TL;DR why is the number of connections to the webpage status info so limited?

Hi, just installed rockstor, purchased the 5 year support. Fun to play with this. I’m running inside virtualbox vm, with 4 gig ram, 2 cpus, 1 physical disk mapped. My system has 16 gig physical ram, 4 proc i7 with multithreading, vt-d. I’m running 3.8.10. I am logged in with my non-root account.

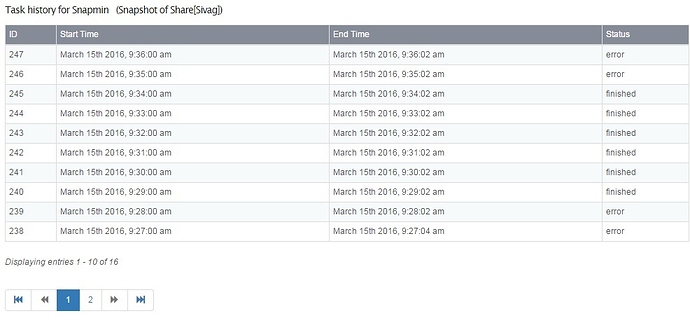

I connect to the http interface on my local network, and everything works, (https://10.1.2,3 or whatever). The problem is I connect a few times a day from different systems. After a while I noticed that I started getting error messages and the ui wouldn’t load. I love the ability to get that error.tar file.

I don’t think I’m opening even 30 different connections. This seems like a strange fragility that I can’t open say 5 or 10 different connections.

In the error file, I see different: FATAL: sorry, too many clients already. I see this with any of the pages at my local network after the problem. Restarting the vm fixes it. Here’s the top of one of the stack frames:

[11/Jan/2016 09:10:40] ERROR [storageadmin.middleware:35] Exception occured while processing a request. Path: / method: GET

[11/Jan/2016 09:10:40] ERROR [storageadmin.middleware:36] FATAL: sorry, too many clients already

Traceback (most recent call last):

File “/opt/rockstor/eggs/Django-1.6.11-py2.7.egg/django/core/handlers/base.py”, line 112, in get_response

response = wrapped_callback(request, *callback_args, **callback_kwargs)

File “/opt/rockstor/src/rockstor/storageadmin/views/home.py”, line 59, in home

setup = Setup.objects.all()[0]

File “/opt/rockstor/eggs/Django-1.6.11-py2.7.egg/django/db/models/query.py”, line 132, in getitem

)

)

and snapshots seem all ok)

and snapshots seem all ok)