3.9.2-18 is now available. This stable release enhancement adds the much anticipated “Disable/Enable Quotas” capability. Thanks to @maxhq and @Dragon2611 for helping to inform this feature and apologies to all who have been waiting patiently. Quotas Enabled is still the default and recommended setting; but all functionality, bar share usage reporting (0 bytes), is expected to work with Quotas Disabled.

https://github.com/rockstor/rockstor-core/issues/1592

Please note that, for the time being, disabled quotas are still an Error state within our logs; but log spamming re “quotas not enabled” should only be expected during Web-UI activity. We can revisit these behaviours going forward.

A quota rescan is automatically initiated for a given pool whenever it’s quotas are re-enabled. Please expect around 1 minute / TB of data for the share usage figures to return to normal. As always there are improvements to be had. Feel free to start a new forum thread with the details of any issue you experience.

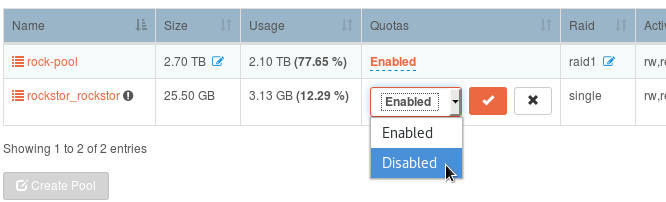

Quick Howto prior to docs update:

Click - Select (from dropdown) - Tick to confirm.

Page refresh for current setting. See ‘mouse over’ tooltip for use context. Setting will persist over a reboot / power cycle.

This inline edit widget is available on both the Pool overview table (as indicated) and on each Pool’s details page.