@freaktechnik Re:

A backup is, as always, advised. Raid is not a backup. OK, having gotten that out of the way. I’m not entirely sure your situation but I can address what a pre 3.9.2-58 system share looks like after updating to 3.9.2-58 and take it from there.

In the following, sys-share & another-sys-share were created on the ROOT pool in our prior ‘wrong’ treatment of the system pool when on a ‘Built on openSUSE’ boot-to-snap config (default when sys drive >17.5 GB or when installing using the yet to be publicly released new Rockstor installer).

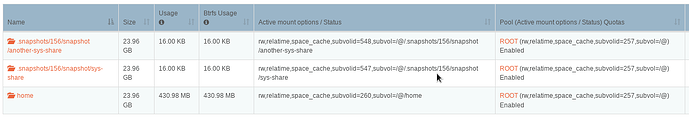

As the Web-UI indicates, these were created within the snapshot that is the current boot-to-snap (it starts out as 1 but this system had been rolled back prior to making these shares):

tumbleweed:~ # cat /proc/mounts | grep " / "

/dev/vda3 / btrfs rw,relatime,space_cache,subvolid=440,subvol=/@/.snapshots/156/snapshot 0 0

And the new (3.9.2-58+) Rockstor system pool (ROOT) mount now specifies, in a boot-to-snap config, that we have a level up from this mount at “subvol=/@” thus:

This was important as without it we can’t do a share rollback. This was an oversite on my part and closely linked to us having, for the last 2 years now, to work with both CentOS’s and openSUSE’s default system pools which are as different as can be. The main casualty was our inability to maintain, within the Web-UI a share: that is once one was created on the system pool a would, upon a page refresh, disappear (which is another confusion on my part of how you were using these shares, but I’ll try not to digress ![]() ). The GitHub issue we had for this lack of feature parity with our CentOS offering was:

). The GitHub issue we had for this lack of feature parity with our CentOS offering was:

Anyway, the linked pull request in that issue formed part of 3.9.2-58. During the experiments of our options re system pool shares in a boot-to-snap config it became apparent that the best user experience, and the only way I could get all our usual stuff to work, was to create our system pool shares at the same level as the default home lives. Otherwise when folks did a boot-to-snap rollback their shares would again be lost to the Rockstor Web-UI with no easy way to re-gain them. This was obviously a bad user experience. So creating them outside (read above) all of that business, ended up being the way to go.

So back to my example:

We have transient (to Web-UI) Rocsktor shares created in pre 3.9.2-57 and earlier, ‘Built on openSUSE’ testing channel variant now showing as ‘all wrong’ due to our update and reboot with it’s consequent ‘more correct’ system pool mount:

cat /proc/mounts | grep "sys-share"

/dev/vda3 /mnt2/.snapshots/156/snapshot/another-sys-share btrfs rw,relatime,space_cache,subvolid=548,subvol=/@/.snapshots/156/snapshot/another-sys-share 0 0

/dev/vda3 /mnt2/.snapshots/156/snapshot/sys-share btrfs rw,relatime,space_cache,subvolid=547,subvol=/@/.snapshots/156/snapshot/sys-share 0 0

But now we have a higher level system pool mount in /mnt2/ROOT of:

tumbleweed:~ # cat /proc/mounts | grep "ROOT"

/dev/vda3 /mnt2/ROOT btrfs rw,relatime,space_cache,subvolid=257,subvol=/@ 0 0

We can hopefully move, within the same pool, these prior transient subvols (shares) to live where they might be were Rockstor to have created them a-fresh:

tumbleweed:~ # cat /proc/mounts | grep "a-fresh"

/dev/vda3 /mnt2/a-fresh-share-post-3.9.2-58 btrfs rw,relatime,space_cache,subvolid=549,subvol=/@/a-fresh-share-post-3.9.2-58 0 0

And given our /mn2/ROOT is mounted at subvol=“/@” we can after stopping Rockstor services thus:

systemctl stop rockstor rockstor-pre rockstor-bootstrap

to prevent it from concurrently meddling (ie remounting these shares) while we meddle, by un-mounting and moving them (or rather their associated subvols) thus:

umount /mnt2/.snapshots/156/snapshot/sys-share

mv /mnt2/ROOT/.snapshots/156/snapshot/sys-share /mnt2/ROOT/

and then restart all Rockstor services:

systemctl start rockstor

(the rockstor service will invoke the other two, as pre-requisite services prior to starting itself)

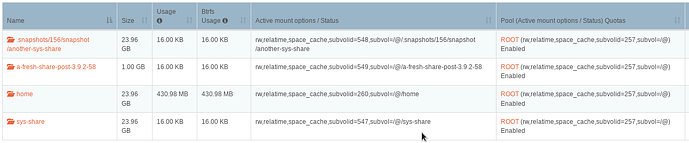

And a browser page refresh or two later and we have:

As can be seen our moved btrfs subvol “sys-share” (a ‘share’ in Rockstor parlance), or more exactly " subvolid=547" now sits, pool heirarchy wise, along side ‘home’ (fstab mounted) and our “a-fresh-share-post-3.9.2-58”

And doing the same four our other transient pre-3.9.2-58 created share

systemctl stop rockstor rockstor-pre rockstor-bootstrap

umount /mnt2/.snapshots/156/snapshot/another-sys-share

mv /mnt2/ROOT/.snapshots/156/snapshot/another-sys-share /mnt2/ROOT/

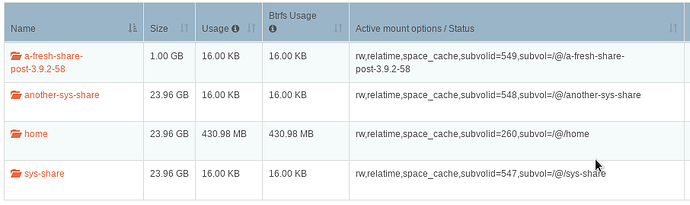

we have:

Now in the case of a Rock-ons root share: http://rockstor.com/docs/docker-based-rock-ons/overview.html#the-rock-ons-root

You would first have to disable the the docker service within Rockstor’s Web-UI and then, post move, re-config that service to the new share prior to re-enabling. However given all of the above I still have no idea how you were ‘using’ shares within Rockstor’s Web-UI that only appear / are known to the Web-UI for a few seconds before the next pool/share/snapshot refresh. But I still though it might be useful to point out a method for moving a prior share, maybe created by Rockstor on the ROOT pool and then configured manually outside of Rockstor to the new ‘proper’ location so that their use might be continued but from within the Web-UI also.

So in short, one can move a subvol (within it’s parent pool only, and given an adequate mount of that pool) using ‘mv’ and thus re-position it to the new norm of Rockstor’s expected ROOT share location, along side ‘home’. This way they survive boot-to-snap rollback events. As might be reasonably expected by folks, at least that was my guess.

Hope that helps. And keep in mind the “(early-adopters/developers only)” caveat for the ‘Built on openSUSE’ testing channel currently. During alpha/beta trials, and for anything that appears in the testing channel really, it is to be expected that anything all the way up to a re-install is likely to be required from time to time.

I’ll address you 3.9.2-58 testing contribution in it’s relevant thread to try and keep this one on topic.

Cheers.

OK, it looks like the time it took for me to work through your questions was insufficient. We have cross posted. Sorry to have taken so long here.