Hello,

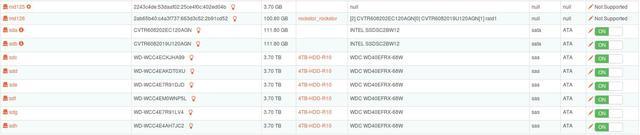

my root trive does have a btrfs partition on top of the root partition:

sdb 8:16 0 119.2G 0 disk

├─sdb1 8:17 0 500M 0 part /boot

├─sdb2 8:18 0 7.3G 0 part /mnt2/rockstor_rockstor

├─sdb3 8:19 0 500M 0 part [SWAP]

└─sdb4 8:20 0 111G 0 part /mnt2/Rockstor_Data

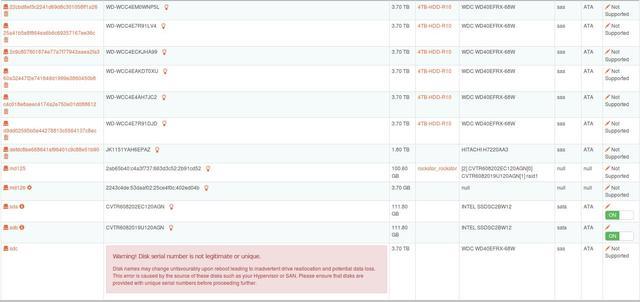

When scanning the disks, I get this error message;

Warning! Disk serial number is not legitimate or unique.

I am aware, that rockstor does not support partitions. But is there any work-around that I could use?

below you find a log.

Regards,

Hendrik

[16/Feb/2016 22:10:00] INFO [storageadmin.views.disk:81] Deleting duplicate or fake (by serial) Disk db entry. Serial = fake-serial-bc2571c9-cbe2-4b99-a043-48796299d224

[16/Feb/2016 22:10:00] ERROR [storageadmin.views.command:75] Exception while refreshing state for Pool(rockstor_rockstor). Moving on: Error running a command. cmd = ['/sbin/btrfs', 'fi', 'show', u'/dev/f18dd2210a024a90808a8955cb10f9d2']. rc = 1. stdout = ['']. stderr = ['']

[16/Feb/2016 22:10:00] ERROR [storageadmin.views.command:76] Error running a command. cmd = ['/sbin/btrfs', 'fi', 'show', u'/dev/f18dd2210a024a90808a8955cb10f9d2']. rc = 1. stdout = ['']. stderr = ['']

Traceback (most recent call last):

File "/opt/rockstor/src/rockstor/storageadmin/views/command.py", line 67, in _refresh_pool_state

pool_info = get_pool_info(fd.name)

File "/opt/rockstor/src/rockstor/fs/btrfs.py", line 71, in get_pool_info

o, e, rc = run_command(cmd)

File "/opt/rockstor/src/rockstor/system/osi.py", line 89, in run_command

raise CommandException(cmd, out, err, rc)

CommandException: Error running a command. cmd = ['/sbin/btrfs', 'fi', 'show', u'/dev/f18dd2210a024a90808a8955cb10f9d2']. rc = 1. stdout = ['']. stderr = ['']

[16/Feb/2016 22:11:02] INFO [storageadmin.views.disk:81] Deleting duplicate or fake (by serial) Disk db entry. Serial = fake-serial-27adeb8f-71c1-47d7-a0bc-936acb148570

[16/Feb/2016 22:11:03] ERROR [storageadmin.views.command:75] Exception while refreshing state for Pool(rockstor_rockstor). Moving on: Error running a command. cmd = ['/sbin/btrfs', 'fi', 'show', u'/dev/036535da54734e58b3db38bc9c68c604']. rc = 1. stdout = ['']. stderr = ['']

[16/Feb/2016 22:11:03] ERROR [storageadmin.views.command:76] Error running a command. cmd = ['/sbin/btrfs', 'fi', 'show', u'/dev/036535da54734e58b3db38bc9c68c604']. rc = 1. stdout = ['']. stderr = ['']

Traceback (most recent call last):

File "/opt/rockstor/src/rockstor/storageadmin/views/command.py", line 67, in _refresh_pool_state

pool_info = get_pool_info(fd.name)

File "/opt/rockstor/src/rockstor/fs/btrfs.py", line 71, in get_pool_info

o, e, rc = run_command(cmd)

File "/opt/rockstor/src/rockstor/system/osi.py", line 89, in run_command

raise CommandException(cmd, out, err, rc)

CommandException: Error running a command. cmd = ['/sbin/btrfs', 'fi', 'show', u'/dev/036535da54734e58b3db38bc9c68c604']. rc = 1. stdout = ['']. stderr = ['']

[16/Feb/2016 22:11:27] INFO [storageadmin.views.disk:81] Deleting duplicate or fake (by serial) Disk db entry. Serial = fake-serial-1395f016-c33d-4a83-a030-69f32a8404dd

[16/Feb/2016 22:11:48] ERROR [storageadmin.util:46] request path: /api/disks/sdd/btrfs-disk-import method: POST data: <QueryDict: {}>

[16/Feb/2016 22:11:48] ERROR [storageadmin.util:47] exception: Failed to import any pool on this device(sdd). Error: 'unicode' object has no attribute 'disk_set'

Traceback (most recent call last):

File "/opt/rockstor/src/rockstor/storageadmin/views/disk.py", line 280, in _btrfs_disk_import

import_shares(po, request)

File "/opt/rockstor/src/rockstor/storageadmin/views/share_helpers.py", line 106, in import_shares

cshare.pool.name))

File "/opt/rockstor/src/rockstor/fs/btrfs.py", line 261, in shares_info

mnt_pt = mount_root(pool)

File "/opt/rockstor/src/rockstor/fs/btrfs.py", line 125, in mount_root

device = pool.disk_set.first().name

AttributeError: 'unicode' object has no attribute 'disk_set'

[16/Feb/2016 22:11:48] DEBUG [storageadmin.util:48] Current Rockstor version: 3.8-11.10