@vesper1978 Hello again and thanks for asking.

Are yes. We had a little bit of a miss-fire on the build / release of the 3.9.2-35 rpm where it was actually identical, code wise, to 3.9.2-34. And as some machines had already installed it, the simplest solution was to build again with a newer rpm number, hence the 3.9.2-36 rpm release.

In summary:

3.9.2-35 rpm = 3.9.2-34 rpm and code tag re-badged (Oops!!)

3.9.2-36 rpm = the real 3.9.2-35 code tag with the indicated:

https://github.com/rockstor/rockstor-core/issues/1532

change actually included this time.

Sorry folks. I really need to start another thread for release notes where we can state / clarify such hick-ups.

And in the spirit of our prior release thread posts I’ll include a quick snippet of before and after screen grabs for some of the user visible aspects of the ‘real’ 3.9.2-35 code tag (actually 3.9.2-36 rpm):

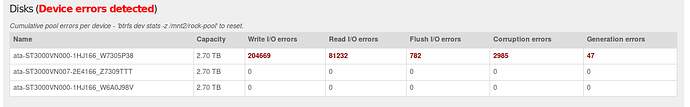

Pool Details page “Disks” subsection

Before code change:

After (real life example on a test machine of mine):

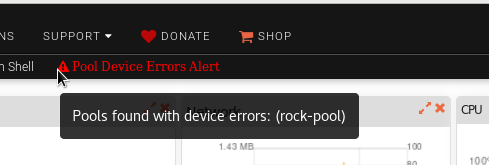

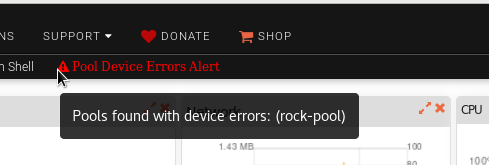

And we also now show a real time (no refresh required) Web-UI header alert indicating the pools that have known cumulative errors :

A complete description of all changes, with images, are detailed in the linked issue:

https://github.com/rockstor/rockstor-core/issues/1532

and it’s associated pr:

https://github.com/rockstor/rockstor-core/pull/1958

Incidentally that 3 disk ‘rock-pool’ errors example above seemed to have resulted from what looked like a disk controller driver hanging one evening!! I had each disk connected to a different controller, there were only 2 disks at the time. No service interruption was experienced however. The next day a fresh boot resulted in the driver and attached drive returning to normal service and one scrub later:

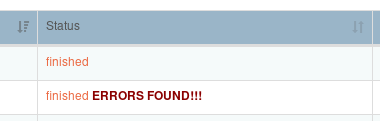

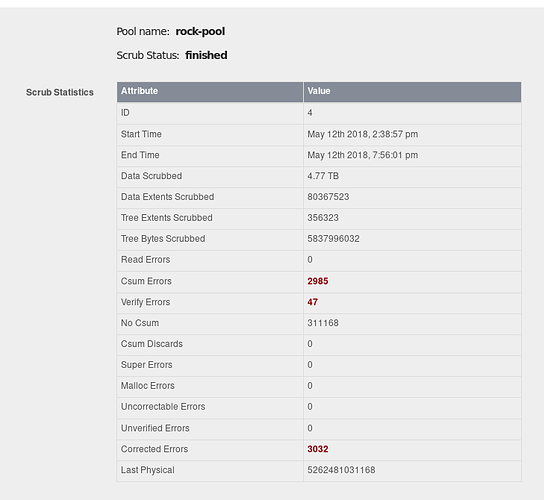

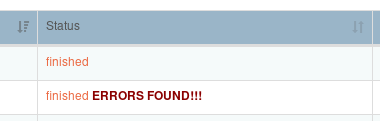

with the following details for the scrub with errors:

and all was repaired. A subsequent scrub (indicated) came up all rosy and that pool has been fine since with no additional errors.

Hope that answers your question, if not concisely; I thought some might like the included ‘story’. And as indicated that pool has since had an additional drive added as it was getting a little full.