Hi @phillxnet!

That’s how I understood it as well but since the Radarr and Sonarr Rock-ons didn’t allow me to specify a config share I assumed (which you never should do) that these we’re different.

Hi @Flox!

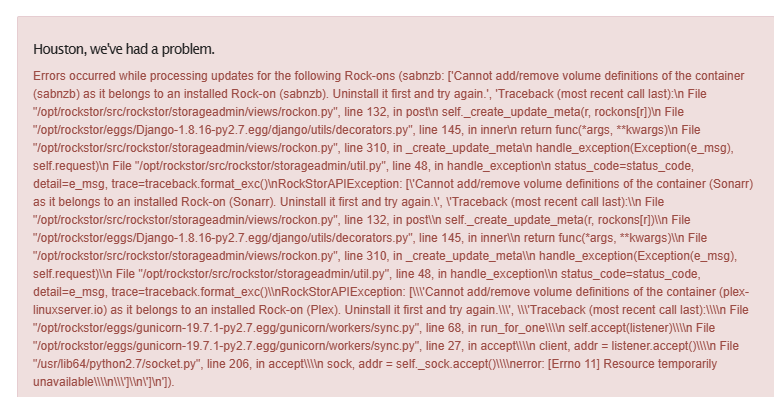

Nope, that’s all there is. There’s a bigger issue though. This is the result I got when I did an update on the Rock-ons to make sure that I had the latest versions:

Traceback:

Traceback (most recent call last):

File “/opt/rockstor/src/rockstor/storageadmin/views/rockon.py”, line 132, in post

self._create_update_meta(r, rockons[r])

File “/opt/rockstor/eggs/Django-1.8.16-py2.7.egg/django/utils/decorators.py”, line 145, in inner

return func(*args, **kwargs)

File “/opt/rockstor/src/rockstor/storageadmin/views/rockon.py”, line 310, in _create_update_meta

handle_exception(Exception(e_msg), self.request)

File “/opt/rockstor/src/rockstor/storageadmin/util.py”, line 48, in handle_exception

status_code=status_code, detail=e_msg, trace=traceback.format_exc()

RockStorAPIException: [‘Cannot add/remove volume definitions of the container (sabnzb) as it belongs to an installed Rock-on (sabnzb). Uninstall it first and try again.’, ‘Traceback (most recent call last):\n File “/opt/rockstor/src/rockstor/storageadmin/views/rockon.py”, line 132, in post\n self._create_update_meta(r, rockons[r])\n File “/opt/rockstor/eggs/Django-1.8.16-py2.7.egg/django/utils/decorators.py”, line 145, in inner\n return func(*args, **kwargs)\n File “/opt/rockstor/src/rockstor/storageadmin/views/rockon.py”, line 310, in _create_update_meta\n handle_exception(Exception(e_msg), self.request)\n File “/opt/rockstor/src/rockstor/storageadmin/util.py”, line 48, in handle_exception\n status_code=status_code, detail=e_msg, trace=traceback.format_exc()\nRockStorAPIException: ['Cannot add/remove volume definitions of the container (Sonarr) as it belongs to an installed Rock-on (Sonarr). Uninstall it first and try again.', 'Traceback (most recent call last):\n File “/opt/rockstor/src/rockstor/storageadmin/views/rockon.py”, line 132, in post\n self._create_update_meta(r, rockons[r])\n File “/opt/rockstor/eggs/Django-1.8.16-py2.7.egg/django/utils/decorators.py”, line 145, in inner\n return func(*args, **kwargs)\n File “/opt/rockstor/src/rockstor/storageadmin/views/rockon.py”, line 310, in _create_update_meta\n handle_exception(Exception(e_msg), self.request)\n File “/opt/rockstor/src/rockstor/storageadmin/util.py”, line 48, in handle_exception\n status_code=status_code, detail=e_msg, trace=traceback.format_exc()\nRockStorAPIException: [\'Cannot add/remove volume definitions of the container (plex-linuxserver.io) as it belongs to an installed Rock-on (Plex). Uninstall it first and try again.\', \'Traceback (most recent call last):\\n File “/opt/rockstor/eggs/gunicorn-19.7.1-py2.7.egg/gunicorn/workers/sync.py”, line 68, in run_for_one\\n self.accept(listener)\\n File “/opt/rockstor/eggs/gunicorn-19.7.1-py2.7.egg/gunicorn/workers/sync.py”, line 27, in accept\\n client, addr = listener.accept()\\n File “/usr/lib64/python2.7/socket.py”, line 206, in accept\\n sock, addr = self._sock.accept()\\nerror: [Errno 11] Resource temporarily unavailable\\n\']\n']\n’]

I’ll run the “Clean database of broken Rock-ons” script once more, I probably missed something when cleaning up after my broken filesystem.

Thanks so far for all the help, I’ll get back to you when I’ve cleaned up the database.

[Edit]

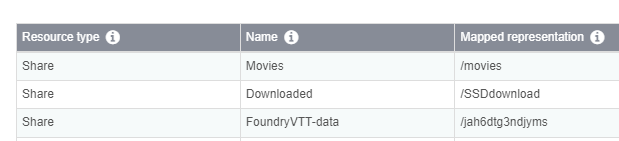

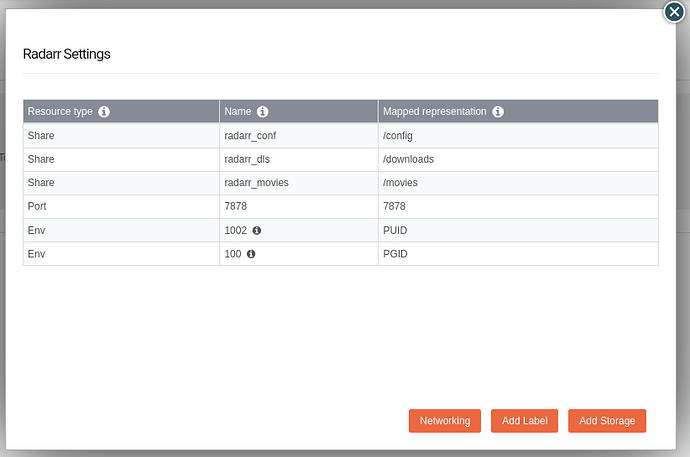

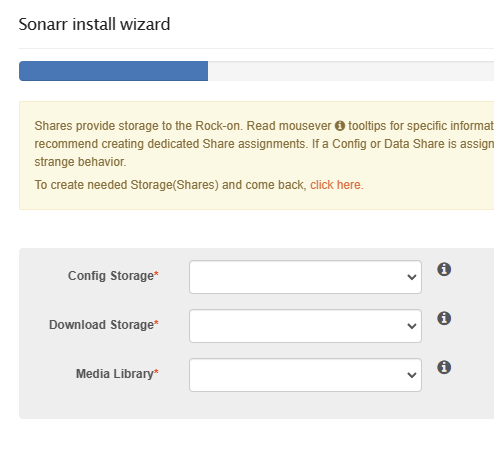

The “Rock-ons database” was already clean so running the script didn’t do anything. Something did something though. When I did a re-install of Radarr I did get the chance to specify the correct folders and this is how the mapping looks now:

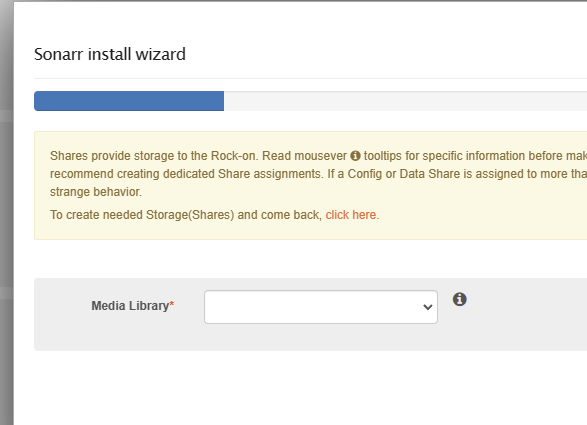

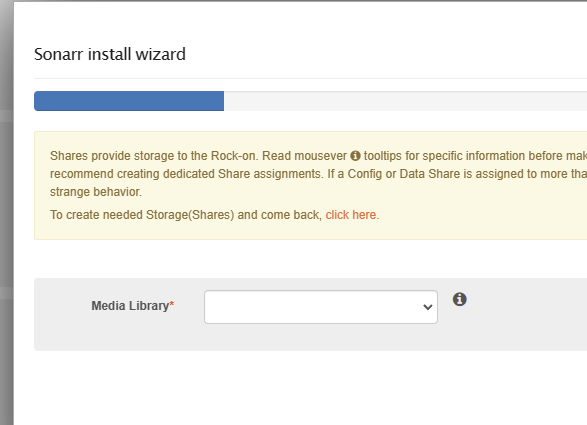

When installing Sonarr, it’s still broken unfortunately:

I’ll configure Radarr and see if it behaves as expected but since Sonarr can’t even be installed properly I’ll leave it alone until we figure out why it’s acting up.