I am trying to build an installer according to the github instructions for 4.1.0-0 on a brand new Leap15.3 Virtual Machine installation (I installed the basic development tools via YAST) with the kernel backport repos uncommented. The iso is successfully created, no obvious errors reported during that process.

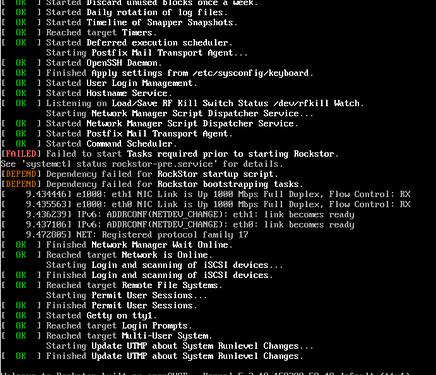

However, when I try a test install, Rockstor fails to start after successfully configuration and the process finishing so I can log in.

Looking at the messages it starts with the avahi service and goes downhill from there. Some of the error messages indicate that no user was found for a given service (e.g. for avahi). When I then checked my existing 4.1 install, there are many more users set up than what I can see on the test installations (e.g. avahi, postfix, postgres, etc.).

to Check whether my kiwi file editing caused any issues, I re-pulled the installer repo and only updated the version to 4.1.0-0 where indicated. Again, build went through, iso was created, but install showed same symptoms as above.

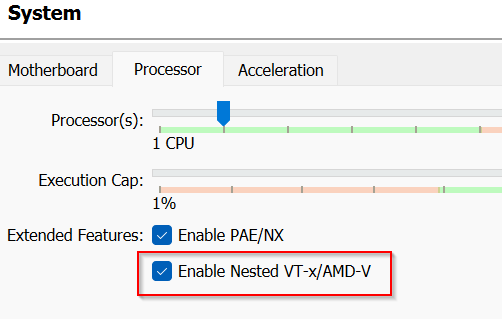

Finally, not that is should matter, I installed using the EFI option on Virtualbox for another installation attempt, same result.

I am wondering where else I can look to see what might have gone wrong during the iso built? The kiwi result files don’t show me an obvious culprit.