Failing, so does manual start. Everything is now mounting correctly.

Aug 17 22:18:49 rockstor docker-wrapper: Traceback (most recent call last):

phillxnet

August 17, 2018, 7:55pm

22

@Jorma_Tuomainen Hello again, as per:

I opened the following issue:

opened 06:22PM - 10 Aug 18 UTC

closed 12:26PM - 16 Aug 18 UTC

Thanks to forum members Jorma_Tuomainen and Flox in the following thread for hig… hlighting this regression. As from 3.9.2-31 pr #1946 nvme system disks were no longer identified as attached: resulting in, post update, the system disk showing as detached and consequently it's default surfaced share 'home', and any created post install, would fail to be available.

Associated log entry:

```

ERROR [storageadmin.views.command:70] Skipping Pool (rockstor_rockstor00) mount as there are no attached devices. Moving on.

```

Where the Pool name may vary from install to install.

Non system pools / shares were not affected.

Please update the following forum thread with this issues resolution:

https://forum.rockstor.com/t/cant-start-rock-ons/5065

and addressed it via the following pull request:

rockstor:master ← phillxnet:1951_regression_-_nvme_system_disk_not_identified

opened 07:11PM - 14 Aug 18 UTC

Address nvme system disk regression, not recognised as attached, by improving th… e partition regex mechanism initially enhanced in commit acbd6a5 (pr #1946) which created the indicated regression.

Summary:

- Unit test added to instantiate observed nvme system disk regression and expected behaviour.

- Simplify regex match conditional, for root partition identification, so that it again incorporates nvme device names: with root in partition.

- Establish conditional partition regex prior to above match.

- Enhance existing btrfs-in-partition unit test to catch a regression observed prior to establishing the above conditional regex that affected the 42nd scsi type drive 'sdap' if that drive had a btrfs partition concurrent with an 'sda' root drive name (and the prior regex's were 'or' combined).

- Add debug logging of sys partition info inheritance from base device.

- Add debug logging of btrfs in partition device skip: we only surface the base device.

- Minor cosmetic bracket changes and comment updates.

- Added TODO: on future option re base device identification from partition device name.

Fixes #1951

Please see issue text for further context.

@schakrava Ready for review.

Testing:

All existing osi and btrfs tests return OK:

extended / modified tests:

test_scan_disks_btrfs_in_partition (system.tests.test_osi.OSITests) ... ok

new tests:

test_scan_disks_nvme_sys_disk (system.tests.test_osi.OSITests) ... ok

Caveats:

This pr and #1946 introduce a white list approach to dev name regex re system partition identification but this was deemed necessary given the related issues observed. Ideally in time we can remove the 'special treatment' required for the system disk by moving it's associated mechanisms over to using the redirect role which is currently only associated with non system disk btrfs in partition disks. This move should help to simplify the code and normalise the partitions element of the Disk collection across all drives, ie move to surfacing the system disk as per non system disks: via the base device.

which in turn has now been reviewed, and released as of version 3.9.2-33:

release 4 hours ago.

Could you clarify that a little I’m not quite sure what you mean. I’d also like to know if the nvme system disk showing as missing fix has worked for you, at your convenience of course. I.e. is your nvme sys disk now showing up ok and not as detached !

As per the fix, it may only take effect after a full reboot unfortunately and as I have no real nvme system here I unfortunately couldn’t fully replicate your setup. But I did add a unit test to help guard against this particular regression resurfacing and based it on the command output you provided (thanks for that).

Hope that helps and let us know what your current systems state is so we can pick it up from there.

Thanks again for reporting this and I hope that this newer version has at least moved us forward a tad. The system disk detection is a bit of a tricky area for us currently and is in need of some cleanup so we will just have to improve that area bit by bit. It still has some legacy mechanisms that need to be moved to a newer system we established for the non system drives.

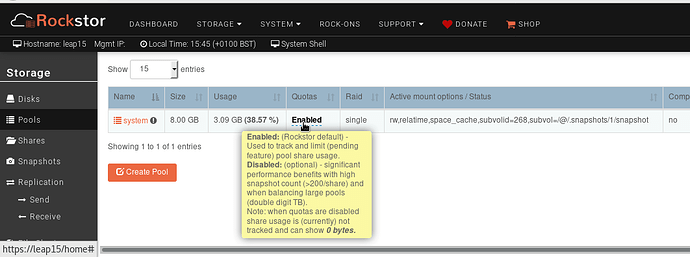

Yes, on reboot it mounted everything ok, I enabled quotas manually:

btrfs quota enable /mnt2/home00/

so those showed up ok too. only docker now gives that error.

Also manually starting now gives an error:

Not docker, straced what it does and:# chmod 711 /mnt2/rockonschmod: changing permissions of ‘/mnt2/rockons/’: Operation not permitted

Fixed it, rogue immutable flag had appeared. So I just did:chattr -i /mnt2/rockstor_rockstor00/rockons/

and reboot, and everything works.

phillxnet

August 18, 2018, 2:59pm

27

@Jorma_Tuomainen Well done on the sort:

Jorma_Tuomainen:

Fixed it, rogue immutable flag had appeared. So I just did:chattr -i /mnt2/rockstor_rockstor00/rockons/

and reboot, and everything works.

Yes I was going to suggest the immutable flag as we still have a bug or two around it’s use. We use it to avoid writes when a mount fails ‘silently’, ie there isn’t inadvertent writing to the mount point rather than the usual share (when it’s not mounted).

Anyway definitely a bug or two left but I did sort one like this recently:

opened 01:38PM - 22 Jan 18 UTC

closed 02:41AM - 28 Jan 18 UTC

Thanks to forum members Haioken and Rene_Castberg for highlighting this issue (a… nd it's current workaround). Under as yet undetermined circumstances a share (btrfs subvol) can have it's immutable flag set. The consequence of this is a failure to be able to delete the affected share. Given that Rockstor currently has no immutable flag observance and that the cause of this flag having been set is unknown it seems prudent for the time being to be robust in this scenario and simply remove the flag prior to a share's delete (which is itself accompanied by a GUI warning of relevant data loss).

This issue was also observed to randomly break replication whilst working on #1853 as the receiver periodically removes and supplants a share with a received snapshot. As and when the true cause of this flag's having been set is identified we can more correctly observe it's use project wide (along with appropriate user messaging), but currently our base ability to manage shares and have more robust replication is judged as taking priority. This element is a key factor in breaking out this change from #1853 as it is important that it be revisited in due cause in it's own right.

Pull request of tested fix to follow shortly.

Please update the following forum thread with this issues resolution:

https://forum.rockstor.com/t/solved-btrfs-subvolume-create-delete-fail/3943

I’ll try and do a code audit of our immutable flag use and get this in better shape, and if not at least create a technical wiki entry on our use of it to help clear things up going forward.

Thanks for your patience and perseverance; and I hope you enjoy your newly non-detached nvme system disk, complete with resident rockon share.

By the way, did you know you can enable / disable quotas from a Pool’s details page or the Pools page:

Was too busy on the command line to check that