I am trying to move a pair of disks out of a pool to be used in a new pool. I have 6 disks, a pair of 2 TB a pair of 3 TB and a pair of 14 TB they were all in a Raid 10 pool that was presented as 3 different shares on the network. I was getting an available size from the pool that was much lower than I expected. I decided to break up the pool and have 3 Raid 1 pools instead. I removed the 2 TB disks from the pool with no problem but when attempting to remove the 3 TB disks I get the following error:

Traceback (most recent call last): File “/opt/rockstor/.venv/lib/python2.7/site-packages/huey/api.py”, line 360, in _execute task_value = task.execute() File “/opt/rockstor/.venv/lib/python2.7/site-packages/huey/api.py”, line 724, in execute return func(*args, **kwargs) File “/opt/rockstor/src/rockstor/fs/btrfs.py”, line 2059, in start_resize_pool raise e CommandException: Error running a command. cmd = /usr/sbin/btrfs device delete /dev/disk/by-id/ata-ST33000651NS_Z2931VBG /dev/disk/by-id/ata-ST33000651NS_Z2936049 /mnt2/Share1. rc = 1. stdout = [‘’]. stderr = [“ERROR: error removing device ‘/dev/disk/by-id/ata-ST33000651NS_Z2931VBG’: unable to go below four devices on raid10”, “ERROR: error removing device ‘/dev/disk/by-id/ata-ST33000651NS_Z2936049’: unable to go below four devices on raid10”, ‘’]

what stood out to me was the “unable to go below four devices on raid10” so I changed the pool to Raid 1 and get the same error. so, I changed the pool Raid again to “single” this time. I still get the “unable to go below four devices on raid10” error when I try to remove a disk from the pool.

Is there a way to remove a disk from a pool while ignoring whatever label is stuck on Raid 10? Or is there a way to correct whatever thinks the pools is still Raid 10?

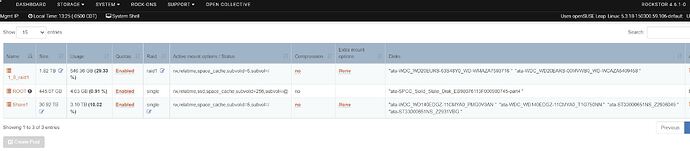

The UI and available storage would indicate that the pool was converted successfully