@hscott Re:

This password is the one for the ‘root’ user and was set during a v4 install:

‘Rockstor’s “Built on openSUSE” installer’: https://rockstor.com/docs/installation/installer-howto.html

in the following install stages:

‘Enter Desired root User Password’: https://rockstor.com/docs/installation/installer-howto.html#enter-desired-root-user-password

‘Confirm root User Password’:https://rockstor.com/docs/installation/installer-howto.html#confirm-root-user-password

That may help to jog your memory, assuming this is a v4 install.

If you have a relatively simple setup and have no data on the system disk (the “ROOT” pool) then you could just re-install and then try importing the pool via the “poorly pool route” documented here:

‘Import unwell Pool’: https://rockstor.com/docs/interface/storage/disks.html#import-unwell-pool

As your pools is missing a member, it will be classed as unwell and so will require a degraded mount option such as is used in that example.

It’s always a good idea to take a note of the root password set during install as it will always be required for more system critical maintenance. There are procedures to reset it you have local access but a simple re-install may just be simpler, plus if you are on v3 still then best you move to v4 anyway as the btrfs code in our new upstream are far more mature.

If you do have data on the current system disk then that complicates things, hence our Web-UI warnings against creating shares on the system disk, it violates system/data seperation. But if this is the case your fresh install could be to a new system disk. And once the pool is repaired via the Web-UI advise given and that data loss prevention guide you can always move the pool back to that original system disk and copy the system disk resident data over to the data pool, and move the pool back to the new install.

Let us know how it goes. But without root access the system is just not fullly accessible to you. However you can still enable the ro,degraded mount options I referenced from within the Web-UI and follow the Pool specific “Maintenance required” contextually sensitive advise given there. All without the root password.

In short we must know if you are on v3 testing or v3 stable (and updated) or v4. And you really must maintain root password awarness to leave your options open. But the re-install route is really only a few minutes on most more basic home setups. Plus if you have a recent config save file you can use this facility to aid the re-setup of any re-install.

‘Configuration Backup and Restore’: https://rockstor.com/docs/interface/system/config_backup.html

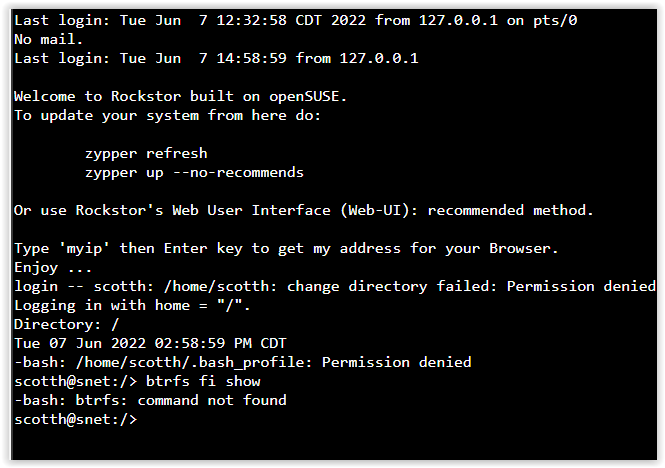

Throw yourself a bone here and give us some more info on this setup and others here are then more likely to be able to help. I.e. we know currently nothing about the raid level used the Rockstor version used, the update channel used the number of disks before or after but we can assume one disk was involved that is now missing. It was new, but not necessarily new hardware as it has been reported as failed on adding. But was that a failed add, or an interrupted balance there after (if it was then the poorly pool import advise above would also require the given example option of skip_balance). This way you can help with resourcing the knowledge here that may help get you up and running again. The btrfs comand was to get some of this info, but it is all available within the Web-UI and from your knowledge of this systems history. But without the root password you are still limited in your options so there is that. And such command line facilities are quicker and easier than multiple screen shots in some cases.

Hope that helps.