Thank you Flox for a quick answer.

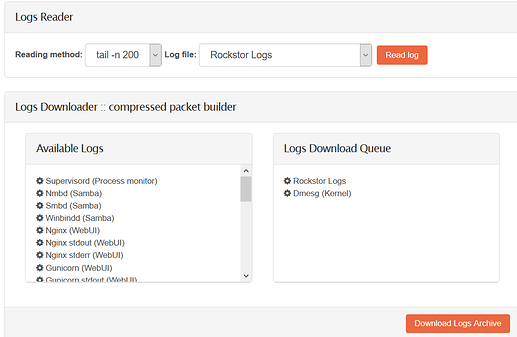

I had a look at the logs as suggested. Last few lines of logs posted below.

As far as i understand i do not see anything useful here, but as i said, i have limited understanding of these systems, so others might see more.

And as you stated, i know one can do more harm than good just trying stuff in situations like this. Any help is appreciated.

However, this is not a production system, so there is no critical stuff on here. But some backupfiles (remotebackups from another site, onsite backups still exists) and private stuff, so i would rather save it than build up the system again. Especially it would suck to loose the private files.

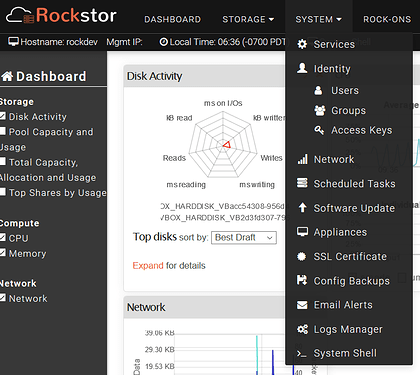

Rockstor logs:

CommandException: Error running a command. cmd = /bin/mount /dev/disk/by-label/BigHomeDisk /mnt2/BigHomeDisk. rc = 32. stdout = ['']. stderr = ['mount: wrong fs type, bad option, bad superblock on /dev/sdc,', ' missing codepage or helper program, or other error', '', ' In some cases useful info is found in syslog - try', ' dmesg | tail or so.', '']

[13/Jul/2019 14:41:39] ERROR [storageadmin.util:44] exception: Failed to import any pool on this device(8). Error: Error running a command. cmd = /bin/mount /dev/disk/by-label/BigHomeDisk /mnt2/BigHomeDisk. rc = 32. stdout = ['']. stderr = ['mount: wrong fs type, bad option, bad superblock on /dev/sdc,', ' missing codepage or helper program, or other error', '', ' In some cases useful info is found in syslog - try', ' dmesg | tail or so.', '']

Traceback (most recent call last):

File "/opt/rockstor/src/rockstor/storageadmin/views/disk.py", line 700, in _btrfs_disk_import

mount_root(po)

File "/opt/rockstor/src/rockstor/fs/btrfs.py", line 252, in mount_root

run_command(mnt_cmd)

File "/opt/rockstor/src/rockstor/system/osi.py", line 115, in run_command

raise CommandException(cmd, out, err, rc)

CommandException: Error running a command. cmd = /bin/mount /dev/disk/by-label/BigHomeDisk /mnt2/BigHomeDisk. rc = 32. stdout = ['']. stderr = ['mount: wrong fs type, bad option, bad superblock on /dev/sdc,', ' missing codepage or helper program, or other error', '', ' In some cases useful info is found in syslog - try', ' dmesg | tail or so.', '']

dmesg:

input10

[ 5.205232] input: HDA Intel Line Out Surround as /devices/pci0000:00/0000:00:1b.0/sound/card0/input11

[ 5.205282] input: HDA Intel Line Out CLFE as /devices/pci0000:00/0000:00:1b.0/sound/card0/input12

[ 5.205334] input: HDA Intel Line Out Side as /devices/pci0000:00/0000:00:1b.0/sound/card0/input13

[ 5.205382] input: HDA Intel Front Headphone as /devices/pci0000:00/0000:00:1b.0/sound/card0/input14

[ 5.205433] input: HDA Intel HDMI/DP,pcm=3 as /devices/pci0000:00/0000:00:1b.0/sound/card0/input15

[ 5.259851] ACPI Warning: SystemIO range 0x0000000000000828-0x000000000000082F conflicts with OpRegion 0x0000000000000800-0x000000000000084F (\PMRG) (20160930/utaddress-247)

[ 5.259857] ACPI: If an ACPI driver is available for this device, you should use it instead of the native driver

[ 5.259859] ACPI Warning: SystemIO range 0x0000000000000530-0x000000000000053F conflicts with OpRegion 0x0000000000000500-0x000000000000053F (\GPS0) (20160930/utaddress-247)

[ 5.259862] ACPI: If an ACPI driver is available for this device, you should use it instead of the native driver

[ 5.259862] ACPI Warning: SystemIO range 0x0000000000000500-0x000000000000052F conflicts with OpRegion 0x0000000000000500-0x000000000000053F (\GPS0) (20160930/utaddress-247)

[ 5.259865] ACPI: If an ACPI driver is available for this device, you should use it instead of the native driver

[ 5.259865] lpc_ich: Resource conflict(s) found affecting gpio_ich

[ 5.264751] ACPI Warning: SystemIO range 0x0000000000000400-0x000000000000041F conflicts with OpRegion 0x0000000000000400-0x000000000000040F (\SMRG) (20160930/utaddress-247)

[ 5.264756] ACPI: If an ACPI driver is available for this device, you should use it instead of the native driver

[ 5.300839] sd 2:0:0:0: Attached scsi generic sg0 type 0

[ 5.300922] sd 2:0:1:0: Attached scsi generic sg1 type 0

[ 5.300955] sd 4:0:0:0: Attached scsi generic sg2 type 0

[ 5.300990] sd 4:0:1:0: Attached scsi generic sg3 type 0

[ 5.301454] sd 3:0:0:0: Attached scsi generic sg4 type 0

[ 5.301490] sd 5:0:0:0: Attached scsi generic sg5 type 0

[ 5.301520] sd 8:0:0:0: Attached scsi generic sg6 type 0

[ 5.301933] sd 9:0:0:0: Attached scsi generic sg7 type 0

[ 5.407496] input: PC Speaker as /devices/platform/pcspkr/input/input16

[ 5.483730] intel_powerclamp: No package C-state available

[ 5.602121] ppdev: user-space parallel port driver

[ 5.639484] Adding 4064252k swap on /dev/sdh2. Priority:-1 extents:1 across:4064252k SSFS

[ 5.663514] iTCO_vendor_support: vendor-support=0

[ 5.665138] iTCO_wdt: Intel TCO WatchDog Timer Driver v1.11

[ 5.665183] iTCO_wdt: Found a ICH10 TCO device (Version=2, TCOBASE=0x0860)

[ 5.665275] iTCO_wdt: initialized. heartbeat=30 sec (nowayout=0)

[ 5.864173] EXT4-fs (sdh1): mounted filesystem with ordered data mode. Opts: (null)