@henfri Sorry this post is a bit of a ramble but thought I’d leave as is, best read all the way through I’m afraid as it’s a little bit ‘thinking aloud’ just wanted to get back to you in the hope of giving some context to help. Short answer is unsupported system drive partitioning (details below). @elmcrest good points.

@henfri I suspect that what you have is essentially a cousin of issue #1043 which has been a particularly tricky one.

ie dev name change breaking mounts

Especially with regard to repeatability. I believe the ball is back in my court on this one but I haven’t gotten back to it.

But you situation differs in an important element in that your logs indicate that a mount it attempted on a missing device (the really long dev names are an internal short hand for missing device names funnily enough). So this complicates you diagnosis. I would suggest that you remove all missing devices via the Disk page and continue from there. Only in your situation of non standard partitioning this ‘missing’ drive may simply re-appear on next rescan, see comments following on non standard partitioning.

The indicated issue as I remember it has extensive logs that seem to me to indicate an issue with mounts failing (but only in very specific instances) when the system drive and a prior data drive switch their names. Hence me chipping in here due to your:

Note however than on a standard Rockstor install this always corrects itself on a subsequent reboot and happens (at least to me) very rarely; hence the difficulty in sorting it. Also hence why your situation is only distantly related.

That is a pool is mounted from a device in that pool and in the specific instance where a system drive and pool member’s drive names get reversed there is a call to mount using it’s old name which in a certain situation is actually the system drive and consequently it fails. Given it’s a one shot deal during boot the system is then unable to remount until a reboot.

But this situation has become very difficult to reproduce due to some improvements along the way but I suspect it still exists but as I say your situation log wise is complicated by missing drives still in the db (from the logs only). Start there by removing these missing devices (see later comment in this post first).

I would also note that you do not have a standard install so are essentially out in the wild on a system that is itself still very much in development. That is obviously another concern and one that makes it rather tricky to reproduce / help with within the confines of working on Rockstor rather than your particular custom linux install.

On the other hand such things can help to expose problems (your point presumably) hence me suggesting the device removal and taking a peak at that issue (see below for further thoughts). But do keep in mind that deviating from standard system drive partitioning is definitely asking for trouble as Rockstor is much more an appliance than a program as it touches quite a few part of the underlying system including assumptions on partitioning, especially of the system drive . But given your valuable contributions here already I know I’m not saying anything you don’t already know. Just trying to narrow down the things that may be tripping you up, ie 4th partition on system drive not being understood by Rockstor’s currently understanding of system drive partitioning.

Please note though that the referenced issue can only really deal with standard installs but thought it might help with some context, also it may not even exist as I have yet to get back and produce a reliable replication of it.

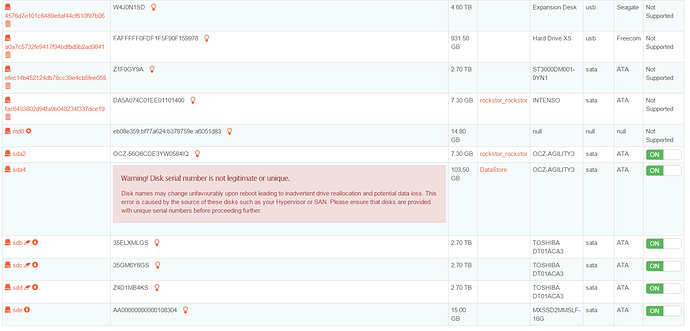

Could you send in a picture of your disks page as is before you do anything else as that may be helpful also, I suspect your non standard sda4 partition as a btrfs member is your problem and the drive names switch lead to the problem you know have as it’s not an expected or supported scenario and even on expected ones there can still be some tricky things to work out (the referenced issue as context) when drives switch names between boots. They use to stay where they were cable wise but alas no longer.

Hope that helps and please don’t take my comments in a negative light.

It’s just occurred to me that your ‘missing’ drive (long dev name) is most likely a consequence of Rockstor’s inability to deal with your system drive partitioning. If you remove the sda4 partition (ie a second btrfs device on the system drive) your experience may well be a lot smoother. There is simply no provision for dealing with btrfs in partitions except for the one exception of a single partition for the system btrfs volume. Additional btrfs volumes created on the system drive is just going to break stuff, especially over reboots. That is each btrfs device, or in the specific case of a single system btrfs partition, is tracked via the serial number of the base device. So the 2 partitions of btrfs on the same system drive will result in one overwriting the other which I’m thinking results in the phantom ‘missing’ long dev name, ie repeats of serial are not allowed as it’s the only unique element of a drive, remember we have to deal with drives prior to any uuid additions via partitioning / formatting so everything is guided by base serial numbers of devices.

Short of it is if you remove the sda4 partition you may well be back in business and also closer to what the Rockstor code can (currently at least) deal with or expects to see.

Hope that make sense.