@phillxnet any idea?

@sumon want to chip in here as well ? I know that this might not be related to rockstor … but two machines on same day upgrade corrupted them self, one it’s root FS and now it can’t mount it’s pool, second one (separate thread that you’re in) seems to somehow tangled it’s config …

FYI I’ve just completely wiped out the VM and started fresh from .15 .iso - same thing…

I’m not familiar with proxmox but virtio in disk naming (ie in udev assigned by-id names) are of type virtio which I wouldn’t imagine is a proper pass-through. ie if one creates virtual disks (backed by a file) in VMM (KVM) the drives are virtual block devices not actual devices as such (confirmed by vendor of 0x1af4 and /dev/vdX names). Are we sure these devices are passed through raw, ie ignored by proxmox not just translated via the virtio layer as it would seem. Also if passed through ‘raw’ then serial should be accessible and so shouldn’t need to be jury rigged on afterwards.

Consequently it looks to me like these are not real drive pass throughs. Also smart is auto disabled for virtio disks and if real pass through then smart should be accessible.

Hope that helps but again, I am not familiar with Proxmox and I personally wouldn’t recommend using Rockstor inside of Proxmox, especially given we use newer kernel versions (assumption on my part) and that may ultimately trip up Proxmox’s ability to fake a machine to Rockstor’s kernel, but I don’t actually know if that is a real issue / concern.

When I use SCSI controller I get more reasonable results:

still it refuses to import anything …

@suman ?

Update:

OK, screw this … just to establish the base line I’ll install the rockstor bare metal to see whenever it can import the pool it self …

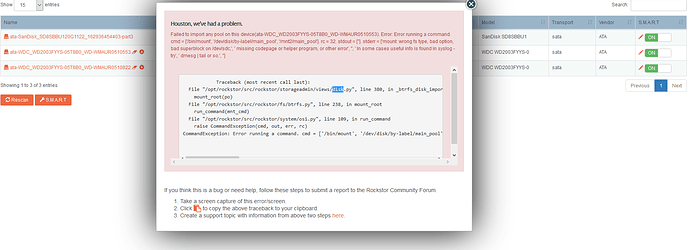

Sooooo … no cigar … a bear metal installation and:

Traceback (most recent call last):

File "/opt/rockstor/src/rockstor/storageadmin/views/disk.py", line 380, in _btrfs_disk_import

mount_root(po)

File "/opt/rockstor/src/rockstor/fs/btrfs.py", line 238, in mount_root

run_command(mnt_cmd)

File "/opt/rockstor/src/rockstor/system/osi.py", line 109, in run_command

raise CommandException(cmd, out, err, rc)

CommandException: Error running a command. cmd = ['/bin/mount', '/dev/disk/by-label/main_pool', '/mnt2/main_pool']. rc = 32. stdout = ['']. stderr = ['mount: wrong fs type, bad option, bad superblock on /dev/sdc,', ' missing codepage or helper program, or other error', '', ' In some cases useful info is found in syslog - try', ' dmesg | tail or so.', '']

nothing interesting in dmesg:

[root@tevva-server ~]# dmesg | tail -n300

[ 95.586609] ata1: SATA link up 3.0 Gbps (SStatus 123 SControl 300)

[ 95.595407] ata1.00: ATA-8: WDC WD2003FYYS-05T8B0, 00.0NS02, max UDMA/133

[ 95.595411] ata1.00: 3907029168 sectors, multi 0: LBA48 NCQ (depth 31/32), AA

[ 95.599036] ata1.00: configured for UDMA/133

[ 95.599046] ata1: EH complete

[ 95.599316] scsi 0:0:0:0: Direct-Access ATA WDC WD2003FYYS-0 NS02 PQ: 0 ANSI: 5

[ 95.611160] sd 0:0:0:0: Attached scsi generic sg3 type 0

[ 95.611193] sd 0:0:0:0: [sdc] 3907029168 512-byte logical blocks: (2.00 TB/1.82 TiB)

[ 95.611406] sd 0:0:0:0: [sdc] Write Protect is off

[ 95.611411] sd 0:0:0:0: [sdc] Mode Sense: 00 3a 00 00

[ 95.611595] sd 0:0:0:0: [sdc] Write cache: disabled, read cache: enabled, doesn't support DPO or FUA

[ 95.611793] systemd-journald[1694]: no db file to read /run/udev/data/+scsi:0:0:0:0: No such file or directory

[ 95.611955] systemd-journald[1694]: no db file to read /run/udev/data/+scsi:0:0:0:0: No such file or directory

[ 95.612087] systemd-journald[1694]: no db file to read /run/udev/data/+scsi:0:0:0:0: No such file or directory

[ 95.612235] systemd-journald[1694]: no db file to read /run/udev/data/+scsi:0:0:0:0: No such file or directory

[ 95.616215] sd 0:0:0:0: [sdc] Attached SCSI disk

[ 98.710435] systemd-udevd[1729]: cleanup idle workers

[ 98.710490] systemd-udevd[1729]: Validate module index

[ 98.710554] systemd-udevd[1729]: Check if link configuration needs reloading.

[ 98.710629] systemd-udevd[12940]: Unload module index

[ 98.710631] systemd-udevd[12941]: Unload module index

[ 98.710636] systemd-udevd[12946]: Unload module index

[ 98.710645] systemd-udevd[12945]: Unload module index

[ 98.710648] systemd-udevd[12957]: Unload module index

[ 98.710685] systemd-udevd[12940]: Unloaded link configuration context.

[ 98.710698] systemd-udevd[12957]: Unloaded link configuration context.

[ 100.109378] udevadm[12967]: calling: info

[ 100.127818] udevadm[12968]: calling: info

[ 100.138837] udevadm[12969]: calling: info

[ 100.387575] udevadm[12981]: calling: info

[ 114.922148] systemd[1]: Got notification message for unit systemd-logind.service

[ 114.922158] systemd[1]: systemd-logind.service: Got notification message from PID 3150 (WATCHDOG=1)

[ 114.922166] systemd[1]: systemd-logind.service: got WATCHDOG=1

[ 114.922544] systemd[1]: Got notification message for unit systemd-journald.service

[ 114.922554] systemd[1]: systemd-journald.service: Got notification message from PID 1694 (WATCHDOG=1)

[ 114.922561] systemd[1]: systemd-journald.service: got WATCHDOG=1

[ 123.786505] random: crng init done

[ 129.647895] udevadm[13001]: calling: info

[ 129.665312] udevadm[13002]: calling: info

[ 129.678504] udevadm[13003]: calling: info

[ 154.921548] systemd[1]: Got notification message for unit systemd-logind.service

[ 154.921558] systemd[1]: systemd-logind.service: Got notification message from PID 3150 (WATCHDOG=1)

[ 154.921565] systemd[1]: systemd-logind.service: got WATCHDOG=1

[ 154.921637] systemd[1]: Got notification message for unit systemd-journald.service

[ 154.921643] systemd[1]: systemd-journald.service: Got notification message from PID 1694 (WATCHDOG=1)

[ 154.921648] systemd[1]: systemd-journald.service: got WATCHDOG=1

[ 160.593310] udevadm[13039]: calling: info

[ 160.613130] udevadm[13040]: calling: info

[ 160.628072] udevadm[13041]: calling: info

[ 160.876903] udevadm[13053]: calling: info

[ 180.171219] systemd[1]: Got notification message for unit systemd-logind.service

[ 180.171230] systemd[1]: systemd-logind.service: Got notification message from PID 3150 (WATCHDOG=1)

[ 180.171237] systemd[1]: systemd-logind.service: got WATCHDOG=1

[ 180.171591] systemd[1]: Got notification message for unit systemd-journald.service

[ 180.171600] systemd[1]: systemd-journald.service: Got notification message from PID 1694 (WATCHDOG=1)

[ 180.171606] systemd[1]: systemd-journald.service: got WATCHDOG=1

[ 196.989756] udevadm[13112]: calling: info

[ 196.990404] systemd[1]: Got notification message for unit systemd-journald.service

[ 196.990432] systemd[1]: systemd-journald.service: Got notification message from PID 1694 (WATCHDOG=1)

[ 196.990438] systemd[1]: systemd-journald.service: got WATCHDOG=1

[ 197.003490] udevadm[13113]: calling: info

[ 197.051029] BTRFS: device label main_pool devid 2 transid 184377 /dev/sdc

[ 197.051449] systemd-udevd[1729]: Validate module index

[ 197.051472] systemd-udevd[1729]: Check if link configuration needs reloading.

[ 197.051542] systemd-udevd[1729]: seq 3576 queued, 'add' 'bdi'

[ 197.051670] BTRFS info (device sdc): disk space caching is enabled

[ 197.051673] BTRFS info (device sdc): has skinny extents

[ 197.051746] systemd-udevd[1729]: seq 3576 forked new worker [13118]

[ 197.051973] systemd-udevd[13118]: seq 3576 running

[ 197.052062] systemd-udevd[13118]: no db file to read /run/udev/data/+bdi:btrfs-2: No such file or directory

[ 197.052086] systemd-udevd[13118]: RUN '/bin/mknod /dev/btrfs-control c 10 234' /etc/udev/rules.d/64-btrfs.rules:1

[ 197.052464] systemd-udevd[13125]: starting '/bin/mknod /dev/btrfs-control c 10 234'

[ 197.053056] BTRFS error (device sdc): failed to read the system array: -5

[ 197.053697] systemd-udevd[1729]: seq 3577 queued, 'remove' 'bdi'

[ 197.053822] systemd-udevd[13118]: '/bin/mknod /dev/btrfs-control c 10 234'(err) '/bin/mknod: '/dev/btrfs-control''

[ 197.076339] BTRFS: open_ctree failed

[ 216.368701] systemd[1]: Got notification message for unit systemd-journald.service

[ 216.368711] systemd[1]: systemd-journald.service: Got notification message from PID 1694 (WATCHDOG=1)

[ 216.368718] systemd[1]: systemd-journald.service: got WATCHDOG=1

[ 221.116248] udevadm[13143]: calling: info

[ 221.137618] udevadm[13144]: calling: info

[ 221.154239] udevadm[13145]: calling: info

[ 221.447413] udevadm[13157]: calling: info

[ 224.920542] systemd[1]: Got notification message for unit systemd-logind.service

[ 224.920552] systemd[1]: systemd-logind.service: Got notification message from PID 3150 (WATCHDOG=1)

[ 224.920560] systemd[1]: systemd-logind.service: got WATCHDOG=1

[ 254.920050] systemd[1]: Got notification message for unit systemd-journald.service

[ 254.920061] systemd[1]: systemd-journald.service: Got notification message from PID 1694 (WATCHDOG=1)

[ 254.920070] systemd[1]: systemd-journald.service: got WATCHDOG=1

[ 264.919913] systemd[1]: Got notification message for unit systemd-logind.service

[ 264.919922] systemd[1]: systemd-logind.service: Got notification message from PID 3150 (WATCHDOG=1)

[ 264.919929] systemd[1]: systemd-logind.service: got WATCHDOG=1

[ 281.713500] udevadm[13179]: calling: info

[ 281.714195] systemd[1]: Got notification message for unit systemd-journald.service

[ 281.714202] systemd[1]: systemd-journald.service: Got notification message from PID 1694 (WATCHDOG=1)

[ 281.714208] systemd[1]: systemd-journald.service: got WATCHDOG=1

[ 281.738585] udevadm[13180]: calling: info

[ 281.757603] udevadm[13181]: calling: info

[ 282.039067] udevadm[13193]: calling: info

[ 304.419400] systemd[1]: Got notification message for unit systemd-journald.service

[ 304.419410] systemd[1]: systemd-journald.service: Got notification message from PID 1694 (WATCHDOG=1)

[ 304.419417] systemd[1]: systemd-journald.service: got WATCHDOG=1

[ 304.919369] systemd[1]: Got notification message for unit systemd-logind.service

[ 304.919378] systemd[1]: systemd-logind.service: Got notification message from PID 3150 (WATCHDOG=1)

[ 304.919384] systemd[1]: systemd-logind.service: got WATCHDOG=1

[ 342.316629] udevadm[13215]: calling: info

[ 342.317393] systemd[1]: Got notification message for unit systemd-journald.service

[ 342.317401] systemd[1]: systemd-journald.service: Got notification message from PID 1694 (WATCHDOG=1)

[ 342.317407] systemd[1]: systemd-journald.service: got WATCHDOG=1

[ 342.336950] udevadm[13216]: calling: info

[ 342.354045] udevadm[13217]: calling: info

[ 342.666417] udevadm[13229]: calling: info

[ 344.918875] systemd[1]: Got notification message for unit systemd-logind.service

[ 344.918885] systemd[1]: systemd-logind.service: Got notification message from PID 3150 (WATCHDOG=1)

[ 344.918892] systemd[1]: systemd-logind.service: got WATCHDOG=1

[ 379.026875] systemd[1]: Got notification message for unit systemd-journald.service

[ 379.026885] systemd[1]: systemd-journald.service: Got notification message from PID 1694 (WATCHDOG=1)

[ 379.026892] systemd[1]: systemd-journald.service: got WATCHDOG=1

[ 379.051372] systemd[1]: Got message type=signal sender=org.freedesktop.DBus destination=n/a object=/org/freedesktop/DBus interface=org.freedesktop.DBus member=NameOwnerChanged cookie=33 reply_cookie=0 error=n/a

[ 379.051421] systemd-logind[3150]: Got message type=signal sender=org.freedesktop.DBus destination=n/a object=/org/freedesktop/DBus interface=org.freedesktop.DBus member=NameOwnerChanged cookie=33 reply_cookie=0 error=n/a

[ 379.051530] systemd[1]: Got notification message for unit systemd-logind.service

[ 379.051537] systemd[1]: systemd-logind.service: Got notification message from PID 3150 (WATCHDOG=1)

[ 379.051543] systemd[1]: systemd-logind.service: got WATCHDOG=1

[ 379.051680] systemd-logind[3150]: Got message type=method_call sender=:1.18 destination=org.freedesktop.login1 object=/org/freedesktop/login1 interface=org.freedesktop.login1.Manager member=CreateSession cookie=2 reply_cookie=0 error=n/a

[ 379.051740] systemd-logind[3150]: Sent message type=method_call sender=n/a destination=org.freedesktop.DBus object=/org/freedesktop/DBus interface=org.freedesktop.DBus member=GetConnectionUnixUser cookie=15 reply_cookie=0 error=n/a

[ 379.052484] systemd-logind[3150]: New user root logged in.

[ 379.052638] systemd-logind[3150]: Sent message type=method_call sender=n/a destination=org.freedesktop.systemd1 object=/org/freedesktop/systemd1 interface=org.freedesktop.systemd1.Manager member=StartUnit cookie=16 reply_cookie=0 error=n/a

[ 379.054390] systemd[1]: run-user-0.mount changed dead -> mounted

[ 379.054468] systemd[1]: Sent message type=signal sender=n/a destination=n/a object=/org/freedesktop/systemd1 interface=org.freedesktop.systemd1.Manager member=UnitNew cookie=2943 reply_cookie=0 error=n/a

[ 379.054510] systemd[1]: Sent message type=signal sender=n/a destination=n/a object=/org/freedesktop/systemd1/unit/dev_2dsda1_2edevice interface=org.freedesktop.DBus.Properties member=PropertiesChanged cookie=2944 reply_cookie=0 error=n/a

[ 379.056149] systemd-logind[3150]: Sent message type=signal sender=n/a destination=n/a object=/org/freedesktop/login1 interface=org.freedesktop.login1.Manager member=UserNew cookie=17 reply_cookie=0 error=n/a

[ 379.056195] systemd-logind[3150]: Sent message type=method_call sender=n/a destination=org.freedesktop.systemd1 object=/org/freedesktop/systemd1 interface=org.freedesktop.systemd1.Manager member=StartTransientUnit cookie=18 reply_cookie=0 error=n/a

[ 379.062200] systemd-logind[3150]: New session 1 of user root.

[ 379.062408] systemd-logind[3150]: Sent message type=signal sender=n/a destination=n/a object=/org/freedesktop/login1 interface=org.freedesktop.login1.Manager member=SessionNew cookie=19 reply_cookie=0 error=n/a

[ 379.062473] systemd-logind[3150]: Sent message type=signal sender=n/a destination=n/a object=/org/freedesktop/login1/user/_0 interface=org.freedesktop.DBus.Properties member=PropertiesChanged cookie=20 reply_cookie=0 error=n/a

[ 402.933017] udevadm[13271]: calling: info

[ 402.933548] systemd[1]: Got notification message for unit systemd-journald.service

[ 402.933569] systemd[1]: systemd-journald.service: Got notification message from PID 1694 (WATCHDOG=1)

[ 402.933575] systemd[1]: systemd-journald.service: got WATCHDOG=1

[ 402.956080] udevadm[13272]: calling: info

[ 402.973002] udevadm[13273]: calling: info

[ 403.269617] udevadm[13285]: calling: info

[ 414.917937] systemd[1]: Got notification message for unit systemd-logind.service

[ 414.917949] systemd[1]: systemd-logind.service: Got notification message from PID 3150 (WATCHDOG=1)

[ 414.917958] systemd[1]: systemd-logind.service: got WATCHDOG=1

but I think I’ve got my error from /var/log/messages:

Dec 7 16:03:36 tevva-server kernel: ata4: exception Emask 0x10 SAct 0x0 SErr 0x4050000 action 0xe frozen

Dec 7 16:03:36 tevva-server kernel: ata4: irq_stat 0x00400040, connection status changed

Dec 7 16:03:36 tevva-server kernel: ata4: SError: { PHYRdyChg CommWake DevExch }

Dec 7 16:03:36 tevva-server kernel: ata4: hard resetting link

Dec 7 16:03:40 tevva-server kernel: ata4: SATA link down (SStatus 0 SControl 300)

Dec 7 16:03:40 tevva-server kernel: ata4: EH complete

Dec 7 16:03:40 tevva-server kernel: ata4: exception Emask 0x10 SAct 0x0 SErr 0x4050002 action 0xe frozen

Dec 7 16:03:40 tevva-server kernel: ata4: irq_stat 0x00400040, connection status changed

Dec 7 16:03:40 tevva-server kernel: ata4: SError: { RecovComm PHYRdyChg CommWake DevExch }

Dec 7 16:03:40 tevva-server kernel: ata4: hard resetting link

Dec 7 16:03:40 tevva-server kernel: ata1: exception Emask 0x10 SAct 0x0 SErr 0x4040000 action 0xe frozen

Dec 7 16:03:40 tevva-server kernel: ata1: irq_stat 0x00000040, connection status changed

Dec 7 16:03:40 tevva-server kernel: ata1: SError: { CommWake DevExch }

Dec 7 16:03:40 tevva-server kernel: ata1: hard resetting link

Dec 7 16:03:46 tevva-server kernel: ata1: link is slow to respond, please be patient (ready=0)

Dec 7 16:03:46 tevva-server kernel: ata4: link is slow to respond, please be patient (ready=0)

Dec 7 16:03:50 tevva-server kernel: ata4: COMRESET failed (errno=-16)

Dec 7 16:03:50 tevva-server kernel: ata4: hard resetting link

Dec 7 16:03:50 tevva-server kernel: ata1: COMRESET failed (errno=-16)

Dec 7 16:03:50 tevva-server kernel: ata1: hard resetting link

Dec 7 16:03:52 tevva-server kernel: ata4: SATA link up 3.0 Gbps (SStatus 123 SControl 300)

Dec 7 16:03:52 tevva-server kernel: ata4.00: ATA-8: WDC WD2003FYYS-05T8B0, 00.0NS01, max UDMA/133

Dec 7 16:03:52 tevva-server kernel: ata4.00: 3907029168 sectors, multi 0: LBA48 NCQ (depth 31/32), AA

Dec 7 16:03:52 tevva-server kernel: ata4.00: configured for UDMA/133

Dec 7 16:03:52 tevva-server kernel: ata4: EH complete

Dec 7 16:03:52 tevva-server kernel: scsi 3:0:0:0: Direct-Access ATA WDC WD2003FYYS-0 NS01 PQ: 0 ANSI: 5

Dec 7 16:03:52 tevva-server kernel: sd 3:0:0:0: [sdb] 3907029168 512-byte logical blocks: (2.00 TB/1.82 TiB)

Dec 7 16:03:52 tevva-server kernel: sd 3:0:0:0: Attached scsi generic sg2 type 0

Dec 7 16:03:52 tevva-server kernel: sd 3:0:0:0: [sdb] Write Protect is off

Dec 7 16:03:52 tevva-server kernel: sd 3:0:0:0: [sdb] Write cache: disabled, read cache: enabled, doesn't support DPO or FUA

Dec 7 16:03:52 tevva-server kernel: sd 3:0:0:0: [sdb] Attached SCSI disk

Dec 7 16:03:54 tevva-server kernel: ata1: SATA link up 3.0 Gbps (SStatus 123 SControl 300)

Dec 7 16:03:54 tevva-server kernel: ata1.00: ATA-8: WDC WD2003FYYS-05T8B0, 00.0NS02, max UDMA/133

Dec 7 16:03:54 tevva-server kernel: ata1.00: 3907029168 sectors, multi 0: LBA48 NCQ (depth 31/32), AA

Dec 7 16:03:54 tevva-server kernel: ata1.00: configured for UDMA/133

Dec 7 16:03:54 tevva-server kernel: ata1: EH complete

Dec 7 16:03:54 tevva-server kernel: scsi 0:0:0:0: Direct-Access ATA WDC WD2003FYYS-0 NS02 PQ: 0 ANSI: 5

Dec 7 16:03:54 tevva-server kernel: sd 0:0:0:0: Attached scsi generic sg3 type 0

Dec 7 16:03:54 tevva-server kernel: sd 0:0:0:0: [sdc] 3907029168 512-byte logical blocks: (2.00 TB/1.82 TiB)

Dec 7 16:03:54 tevva-server kernel: sd 0:0:0:0: [sdc] Write Protect is off

Dec 7 16:03:54 tevva-server kernel: sd 0:0:0:0: [sdc] Write cache: disabled, read cache: enabled, doesn't support DPO or FUA

Dec 7 16:03:54 tevva-server kernel: sd 0:0:0:0: [sdc] Attached SCSI disk

Dec 7 16:04:23 tevva-server kernel: random: crng init done

Dec 7 16:05:36 tevva-server kernel: BTRFS: device label main_pool devid 2 transid 184377 /dev/sdc

Dec 7 16:05:36 tevva-server kernel: BTRFS info (device sdc): disk space caching is enabled

Dec 7 16:05:36 tevva-server kernel: BTRFS info (device sdc): has skinny extents

Dec 7 16:05:36 tevva-server kernel: BTRFS error (device sdc): failed to read the system array: -5

Dec 7 16:05:36 tevva-server kernel: BTRFS: open_ctree failed

Dec 7 16:05:55 tevva-server chronyd[3154]: Selected source 213.171.220.65

Dec 7 16:08:38 tevva-server systemd-logind[3150]: New session 1 of user root.

And what is really making me want to punch somebody in the face is that when I mount btrfs from finger:

mount /dev/sdb /mnt2/main_pool

mount /dev/sdc /mnt2/main_pool

Everything WORKS OK !

Dec 7 16:05:36 tevva-server kernel: BTRFS: device label main_pool devid 2 transid 184377 /dev/sdc

Dec 7 16:05:36 tevva-server kernel: BTRFS info (device sdc): disk space caching is enabled

Dec 7 16:05:36 tevva-server kernel: BTRFS info (device sdc): has skinny extents

Dec 7 16:05:36 tevva-server kernel: BTRFS error (device sdc): failed to read the system array: -5

Dec 7 16:05:36 tevva-server kernel: BTRFS: open_ctree failed

Dec 7 16:05:55 tevva-server chronyd[3154]: Selected source 213.171.220.65

Dec 7 16:08:38 tevva-server systemd-logind[3150]: New session 1 of user root.

Dec 7 16:17:24 tevva-server kernel: systemd-tmpfile: 121 output lines suppressed due to ratelimiting

Dec 7 16:19:17 tevva-server kernel: BTRFS: device label main_pool devid 1 transid 184377 /dev/sdb

Dec 7 16:19:17 tevva-server kernel: BTRFS info (device sdb): disk space caching is enabled

Dec 7 16:19:17 tevva-server kernel: BTRFS info (device sdb): has skinny extents

Dec 7 16:19:34 tevva-server yum[13700]: Installed: gpm-libs-1.20.7-5.el7.x86_64

Dec 7 16:19:34 tevva-server yum[13700]: Installed: 1:mc-4.8.7-8.el7.x86_64

Dec 7 16:27:11 tevva-server kernel: BTRFS info (device sdb): disk space caching is enabled

Dec 7 16:27:11 tevva-server kernel: BTRFS info (device sdb): has skinny extents

There is something very wrong with in and outs of pool import …

Ok,

@Flyer @phillxnet @suman

Before I’ll start doing anything stupid - could any of you try to compress whole pool and than import it on brand new instalation ? Problem that I’m having might be related to the fact that whole pool is compressed.

@Tomasz_Kusmierz OK, I have an idea.

It may be that you are experiencing a know issue with btrfs when mounting by label, however I have only seen this when attaching the disks after boot. I’m assuming you are not doing this as there has been no mention of this. But it would be consistent with your reports and the error you received via the Web-UI ie that of "… wrong fs type, bad option, bad superblock on /dev/sdc… ".

We have an open issue that references a btrfs wiki entry on the same:

where if one adds drives after boot and then tries to mount by label it can result in the message your received.

You could double check this as the cause by first executing the following command prior to attempting import:

btrfs device scan

As you see all Rockstor does is execute a:

/bin/mount /dev/disk/by-label/main_pool /mnt2/main_pool

command.

The issue has a more detailed breakdown of the cause though.

called:

btrfs device scan

import:

but fs is fully mounted:

[root@tevva-server ~]# mount

sysfs on /sys type sysfs (rw,nosuid,nodev,noexec,relatime)

proc on /proc type proc (rw,nosuid,nodev,noexec,relatime)

devtmpfs on /dev type devtmpfs (rw,nosuid,size=8175012k,nr_inodes=2043753,mode=755)

securityfs on /sys/kernel/security type securityfs (rw,nosuid,nodev,noexec,relatime)

tmpfs on /dev/shm type tmpfs (rw,nosuid,nodev)

devpts on /dev/pts type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=000)

tmpfs on /run type tmpfs (rw,nosuid,nodev,mode=755)

tmpfs on /sys/fs/cgroup type tmpfs (ro,nosuid,nodev,noexec,mode=755)

cgroup on /sys/fs/cgroup/systemd type cgroup (rw,nosuid,nodev,noexec,relatime,xattr,release_agent=/usr/lib/systemd/systemd-cgroups-agent,name=systemd)

pstore on /sys/fs/pstore type pstore (rw,nosuid,nodev,noexec,relatime)

cgroup on /sys/fs/cgroup/memory type cgroup (rw,nosuid,nodev,noexec,relatime,memory)

cgroup on /sys/fs/cgroup/net_cls,net_prio type cgroup (rw,nosuid,nodev,noexec,relatime,net_cls,net_prio)

cgroup on /sys/fs/cgroup/hugetlb type cgroup (rw,nosuid,nodev,noexec,relatime,hugetlb)

cgroup on /sys/fs/cgroup/devices type cgroup (rw,nosuid,nodev,noexec,relatime,devices)

cgroup on /sys/fs/cgroup/blkio type cgroup (rw,nosuid,nodev,noexec,relatime,blkio)

cgroup on /sys/fs/cgroup/freezer type cgroup (rw,nosuid,nodev,noexec,relatime,freezer)

cgroup on /sys/fs/cgroup/perf_event type cgroup (rw,nosuid,nodev,noexec,relatime,perf_event)

cgroup on /sys/fs/cgroup/pids type cgroup (rw,nosuid,nodev,noexec,relatime,pids)

cgroup on /sys/fs/cgroup/cpuset type cgroup (rw,nosuid,nodev,noexec,relatime,cpuset)

cgroup on /sys/fs/cgroup/cpu,cpuacct type cgroup (rw,nosuid,nodev,noexec,relatime,cpu,cpuacct)

configfs on /sys/kernel/config type configfs (rw,relatime)

/dev/sda3 on / type btrfs (rw,noatime,ssd,space_cache,subvolid=257,subvol=/root)

systemd-1 on /proc/sys/fs/binfmt_misc type autofs (rw,relatime,fd=31,pgrp=1,timeout=300,minproto=5,maxproto=5,direct,pipe_ino=13014)

mqueue on /dev/mqueue type mqueue (rw,relatime)

hugetlbfs on /dev/hugepages type hugetlbfs (rw,relatime)

debugfs on /sys/kernel/debug type debugfs (rw,relatime)

tmpfs on /tmp type tmpfs (rw)

sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw,relatime)

nfsd on /proc/fs/nfsd type nfsd (rw,relatime)

/dev/sda3 on /home type btrfs (rw,noatime,ssd,space_cache,subvolid=258,subvol=/home)

/dev/sda1 on /boot type ext4 (rw,noatime,data=ordered)

/dev/sda3 on /mnt2/rockstor_tevva-server type btrfs (rw,relatime,ssd,space_cache,subvolid=5,subvol=/)

/dev/sda3 on /mnt2/home type btrfs (rw,relatime,ssd,space_cache,subvolid=258,subvol=/home)

/dev/sda3 on /mnt2/root type btrfs (rw,relatime,ssd,space_cache,subvolid=257,subvol=/root)

tmpfs on /run/user/0 type tmpfs (rw,nosuid,nodev,relatime,size=1637176k,mode=700)

/dev/sdb on /mnt2/main_pool type btrfs (rw,relatime,space_cache,subvolid=5,subvol=/)

/dev/sdb on /mnt2/syncthing_config type btrfs (rw,relatime,space_cache,subvolid=5725,subvol=/syncthing_config)

/dev/sdb on /mnt2/share type btrfs (rw,relatime,space_cache,subvolid=260,subvol=/share)

/dev/sdb on /mnt2/gitlab_config_storage type btrfs (rw,relatime,space_cache,subvolid=4112,subvol=/gitlab_config_storage)

/dev/sdb on /mnt2/stash type btrfs (rw,relatime,space_cache,subvolid=3955,subvol=/stash)

/dev/sdb on /mnt2/rockon_master_storage type btrfs (rw,relatime,space_cache,subvolid=5280,subvol=/rockon_master_storage)

/dev/sdb on /mnt2/syncthing_data type btrfs (rw,relatime,space_cache,subvolid=5726,subvol=/syncthing_data)

/dev/sdb on /mnt2/gitlab_config_logs type btrfs (rw,relatime,space_cache,subvolid=4111,subvol=/gitlab_config_logs)

/dev/sdb on /mnt2/mariadb type btrfs (rw,relatime,space_cache,subvolid=4388,subvol=/mariadb)

/dev/sdb on /mnt2/owncloud-official type btrfs (rw,relatime,space_cache,subvolid=4387,subvol=/owncloud-official)

/dev/sdb on /mnt2/gitlab_repository_storage type btrfs (rw,relatime,space_cache,subvolid=4113,subvol=/gitlab_repository_storage)

[root@tevva-server ~]#

Still this points me towards the compression ?!

@Tomasz_Kusmierz

So we can try and narrow this down can you try the import after first not having mounted via cli. Ie fresh boot and try an import (after only having done a btrfs device scan, although after a fresh boot with drives connected it shouldn’t be necessary). Also note that it doesn’t harm to re-fresh the web page, just in case, just to make sure info displayed is current.

And to be clear, could you state the pool mount status directly after fresh boot prior to rescan or import attempt.

Cheers.

Anyway I’ve just tried on my vmware to see whenever there is a problem with LZO compressed pool being imported. No cigar … everything works ok … $%&#@%$^%^@#&

Ok, so fresh reboot with drives in

main_pool not mounted

btrfs device scan (did not return any error, /var/log/messages empty and dmesg empty)

freshly going to disks tab and hitting import:

Clicked import and have a question “do you want to ?”

Got the:

![]()

for fair amount of time

quickly look into mount output (while arrows of wait keep on going) : everything mounted properly.

And magically I’ve got:

theory number 432532:

maybe it’s down to crazy amount of snapshots that I’ve got ?

Yep … after removing all the snapshots pool imported it self without an delay…

£$%&"%^"*^$%%"£%&

Conclusion it that crawler that harvests all snapshots for the list in rockstor definitely has a problem and times out on large snapshot set (6000 ish) and might be what was causing original problem with samba in the first place ?

@Flyer could you please tell me which mode for proxmox is preffered to connect to drives for rockstor ?

virtio - have to emoulate everything

scsi (seems to be virtio_scsi) - drives come up as model = qemu but serial numbers seem to be ok out of the box and there is an indication that smart might be working

sata - there are only 5 available so it’s a no go for larger arrays.

also how can I remove drives on the fly from working VM ?

Hi @Tomasz_Kusmierz,

my suggestion (and Proxmox docu too) is to have them virtio

M.

@Flyer is this based on performance or functionality or failure modes ?

Also how you see pfsense on proxmox ? or should I get separate machine ? (I can get spare gen6 proliant)

Also how is performance under proxmox ? I’m considering putting CCTV server under proxmox (standalone) just to ease remote administration … and I run cctv on zoneminde in rockon container I get roughly 25% cpu utilization (on 2 e5620L xeons) how much over head I could get ?

Update:

@Flyer I’ve got the main server running … and performance is appaling with virtio and virtio_scsi … before I could pump with 112MB from windows machine to this server over samba, now with initial hike of 20MB falls to 6MB on long iso upload …

To be clear, at the same time when I upload iso to proxmox lvm storage for instalation I still get 112MB so there is nothing wrong with hos setup.

Another thing is that I’ve got the VM connected to the bridge that has 6 x 1GB ethernet ports in 802.3ad … so 1Gb should be always available …

Ok, since nobody wanted to help  I’ve had to do few days of digging … on how to make qemu, kvm, virtio etc work propperly with rockstor (or any system for that matter) … I’m thinking of doing a write up and making it sticky @phillxnet @suman ? Care to make my write up sticky ? ( don’t care how many inuendoes were here

I’ve had to do few days of digging … on how to make qemu, kvm, virtio etc work propperly with rockstor (or any system for that matter) … I’m thinking of doing a write up and making it sticky @phillxnet @suman ? Care to make my write up sticky ? ( don’t care how many inuendoes were here  )

)

!

My experience is that as long as you give virtio drives a serial then they will be recognised and configurable by Rockstor. It is how I do a lot of my Rockstor development, hence my exclamation on your statement.

Also it is difficult to establish the relevance of a non existent write up and given, to date, only release announcements have been sticky (from memory) and then for only a short time that would seem overkill. However if you have valuable performance enhancing config customisation that is consequently useful to other Rockstor users then great. Still not sure if that warrants a sticky though especially given the serial caveat mentioned and of course the specific relevant of:

Are you talking about Proxmox specific configs here. I use Virtual Machine Manager (VMM) for my Rocksto KVM management using a Linux desktop of course.

Way to go on the encouragement front there.

Had you considered a contribution to the official Rockstor doc via the rockstor-doc GitHub repo. Maybe your findings re virtio optimisation or Proxmox or what ever would make a valuable addition there, ie maybe in the form of a new entry in the Howtos section. The forum has had a number of Proxmox mentions so maybe it’s ‘high time’ we had a howto such as your findings may warrant. What you do think?

Hopefully the other howtos and doc content can act as a guide to the presentation / writing style deemed appropriate.

![]()