Welcome back to linux :)))))

So, first:

f**** what a system for a storage  e5v3

e5v3

I’m not complaining, it’s just absolutely amazing to see so much horse power for just storage.

I’m not complaining, it’s just absolutely amazing to see so much horse power for just storage.

Second,

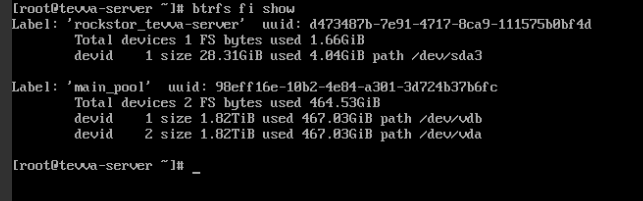

As Flyer said 120GB is a lot, I personally when setting up a new installation of rockstor don’t go higher than 32gb  So what I would like to propose to you is that BTRFS gives you a fantastic raid profile (sort of ride) called “dup” which means that you data will be duplicated on same disk = single sector dies than you got a backup in different place, this is veeeery useful !!! It will require you to use console ( command is “btrfs fi balance start -dconver=dup -mconver=dup -sconvert=dup /”)

So what I would like to propose to you is that BTRFS gives you a fantastic raid profile (sort of ride) called “dup” which means that you data will be duplicated on same disk = single sector dies than you got a backup in different place, this is veeeery useful !!! It will require you to use console ( command is “btrfs fi balance start -dconver=dup -mconver=dup -sconvert=dup /”)

Third,

Your actual question:

a) +1 to @Flyer RAID 5 & 6 on btrfs right now is broken (it will eat you data!!!)

b) +1 to @Flyer on disk speed, BUT from my personal experience, actually slower spinning disk (rpm wise) are less prone to failures. Another thing is slower spinning disks are cheapr = you can buy more of them to get same performance by simply putting more disks into your array.

c) in storage technology best to steer away from “revolutionary technologies”, so in large disks 4TB and more make sure that sector size is still 4KB not some exotic figures (like seagate tries to push down the throat of desktop users). There is proven record of “revolutionary technologies” back firing at people faces (IMB deskstar with revolutionary head technology that was crashing to the disk plater, than Hitachi deskstar with plater paint that was flaking of … yeah hitachi bought deskstar department from IBM and paid price for it)

Fourth,

High 5 for ECC ram !

Fifth,

I would go with 4x6TB in your case (unless it’s some exotic drives), BUT remember that raid10 has a minimum requirement of 4 drives so if one of your drives will die you will not be able to simply rebuild your drive pool to what ever you want. It’s possible but you will have to jump through few hoops for that and it will take some time. For that reason if you don’t care for speed I would suggest going for RAID1 (I personally start raid10 at six drives or more). Raid 1 will give you good performance over large sequential file reads due to nature of btrfs, but it will be lacking in some scenarios.

Sixth,

btrfs allows you to change a “profile” (which means sort of raid level, possible profiles are: single, dup, raid0, raid1, raid5, raid6, raid10) on the fly (unlike zfs) also in btrfs you can have a different profile for different folders.

So here is where btrfs shines, in your setup you could have:

metadata + system = raid1

sensible data = raid1

sensles data = single

media data = raid0

and with that you can save a precious space. I honestly never run different levels for my self, I just pop more storage in or remove data that I don’t care about. Anyway read about btrfs, and don’t ignore warnings … those are pretty seriously meant !

e5v3

e5v3  Just when I need to run another machine I buy another DL180 G6 with two 5520 xeons and 24gb ram for 90 pound and roll with it :)))))

Just when I need to run another machine I buy another DL180 G6 with two 5520 xeons and 24gb ram for 90 pound and roll with it :)))))

(serial option in Proxmox dashboard is a really small one, just need time to check it after some major Rockstor fixes)

(serial option in Proxmox dashboard is a really small one, just need time to check it after some major Rockstor fixes)