@PhillVS First off: welcome to the Rockstor community forum.

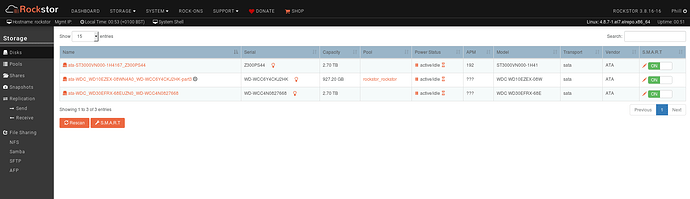

Yes this does look very much like your drives are blank so as @paulsmyth states you should take no further action Disk or Pool wise within the Rockstor UI if you suspect they contain data. As this is how blank drives show up so they will be treated as blank which is obviously not what you believe they are.

Now onto if they are blank or not and if we are looking at a Rockstor bug, which would be good to know of course and your help in diagnosing this would be much appreciated.

Could you ssh into your Rockstor box as the root user or if you use the ‘System - System shell’ as admin first and then ‘su root’, then execute the following commands and paste the output here so that forum members can assess what state your drives are actually in:

btrfs fi show

and

lsblk -P -o NAME,MODEL,SERIAL,SIZE,TRAN,VENDOR,HCTL,TYPE,FSTYPE,LABEL,UUID

If you proceed and follow your pasted output with ``` on lines of their own then the output will be much easier to read (as I have done with this post for the commands).

These are the commands that Rockstor uses internally to assess drives and their partitions / filesystems.

All I can think of initially is that your drives are using some kind of LVM arrangement and that’s not something Rockstor knows anything about.

You could then see if after a:

btrfs dev scan

the outputs change at all. Thanks and lets see what’s going on.

As @paulsmyth has already covered, it is not advisable to make any OS or hardware changes using devices that hold your only copy of the date. OS installs are particularly risky in this sense.

For the time being stay away from all Disk, Pool, Share, and export parts of the UI and don’t attempt to make anything new as from Rockstor’s point of view those drives are blank and so it sees no risk in any further actions.

Hope we can help here and thanks for reporting your findings. Once we have the output of those commands we can assess more what’s going on.

Thanks.