@dont Well done again. But this one is a little more curios as that kernel is available in the command repo to both stable and testing subscribed systems so the switch should not have been necessary.

Also switching to testing from stable, although fine in the CentOS variant, will soon be ill-advised on our openSUSE variant. Especially when followed by the ‘yum update rockstor’ command as it will move folks to what is about to become pretty experimental on the openSUSE side as we start to work on our technical debt, i.e. python 2 to 3 move, once we have our “Built on openSUSE” stable rpms out.

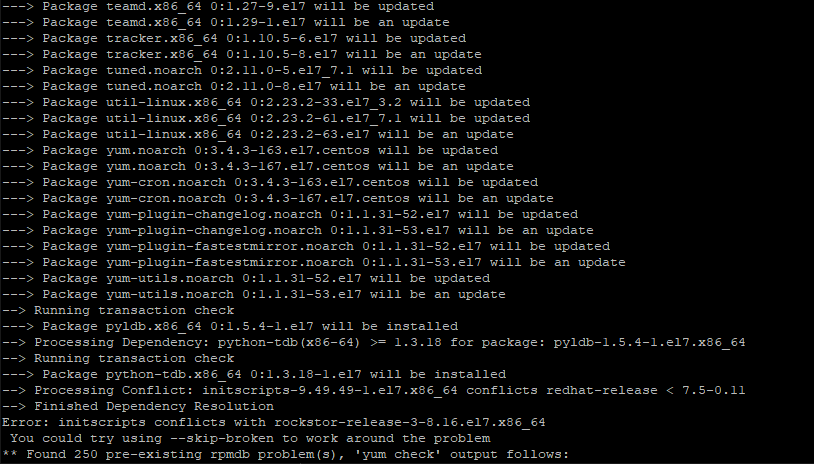

So this again point towards a corrupted rpm db scenario as a normal stable or testing channel subscription also has access to a legacy repo that contains this kernel. The fact that your system did not pick this up suggests it failed to get access to that repo. Hence the ‘repair’ potentially having corner cases such as these.

All down to our super old installer and us relying on progressively larger updates to get folks updated. But this should all change very shortly hopefully. We just have to get to feature parity and we can release a new ‘Built on openSUSE’ installer and get folks fully updated from the get-go.

Thanks again for sharing your experience. I just wanted to establish that this is not a normal experience and you definitely had rpm db issues there. But yes, good to work through them but often easier and quicker to get a clean install/update from the start. However the challenge of the fix is attractive.

So a normal Stable subscription install has the following repos:

yum repolist

repo id repo name status

Rockstor-Stable Subscription channel for stable updates 72

base/x86_64 CentOS-7 - Base 10,070

epel/x86_64 Extra Packages for Enterprise Linux 7 - x86_64 13,250

extras/x86_64 CentOS-7 - Extras 392

rockstor Rockstor 3 - x86_64 55

updates/x86_64 CentOS-7 - Updates 240

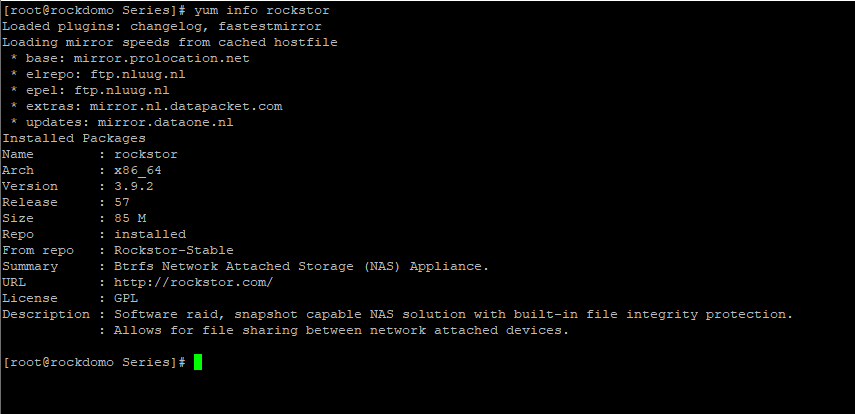

And we see the 4.12 kernel available via the common ‘rockstor’ repo via:

yum repo-pkgs rockstor list

...

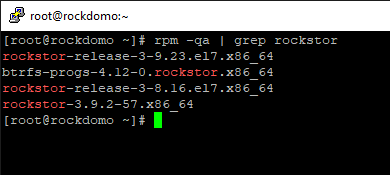

rockstor-release.x86_64 3-9.23.el7 @rockstor

Available Packages

docker-engine.x86_64 1.9.1-1.el7.centos rockstor

docker-engine-selinux.noarch 1.9.1-1.el7.centos rockstor

kernel-ml-devel.x86_64 4.12.4-1.el7.elrepo rockstor

python-devel.x86_64 2.7.5-18.el7_1.1 rockstor

rockstor-logos.noarch 1.0.0-3.fc19 rockstor

Hence no requirement to switch to testing to pick up the 4.12 kernel.

Just wanted to clear that up as otherwise folks experiencing similar issues in the future may quickly skip read this thread and end up jumping to Testing channel rpms which, soon, will be far more experimental than stable. But for our CentOS variants this is entirely safe as we no longer publish testing on that side (now 2.5 years old).

A scenario that has worked for me in the past to rebuild a very poorly CentOS based rpm db is as follows:

# If our db becomes corrupt we can initiate a rebuild via:

mkdir /var/lib/rpm/backup

cp -a /var/lib/rpm/__db* /var/lib/rpm/backup/

rm -f /var/lib/rpm/__db.[0-9][0-9]*

rpm --quiet -qa

rpm --rebuilddb

yum clean all

And a good refresh procedure is as follows:

yum clean expire-cache

yum check-update

However for most folks, a simple re-install is best as it can end up being simpler and often faster. And our new installer in private alpha testing currently has a blank system power-on to first Rockstor Web-UI of < 5 mins on 5 year old hardware so I think sticking to as much system / data separation as possible is good in this regard. But the facility of system drive shares is also rather nice. Always a balance I guess.

Again thanks for sharing your findings.

.

.