Ive run freenas for several years and it has been a pain. A few months ago I started looking for a different solution and came across RockStor. With the Freenas server dying it accelerated by need to migrate.

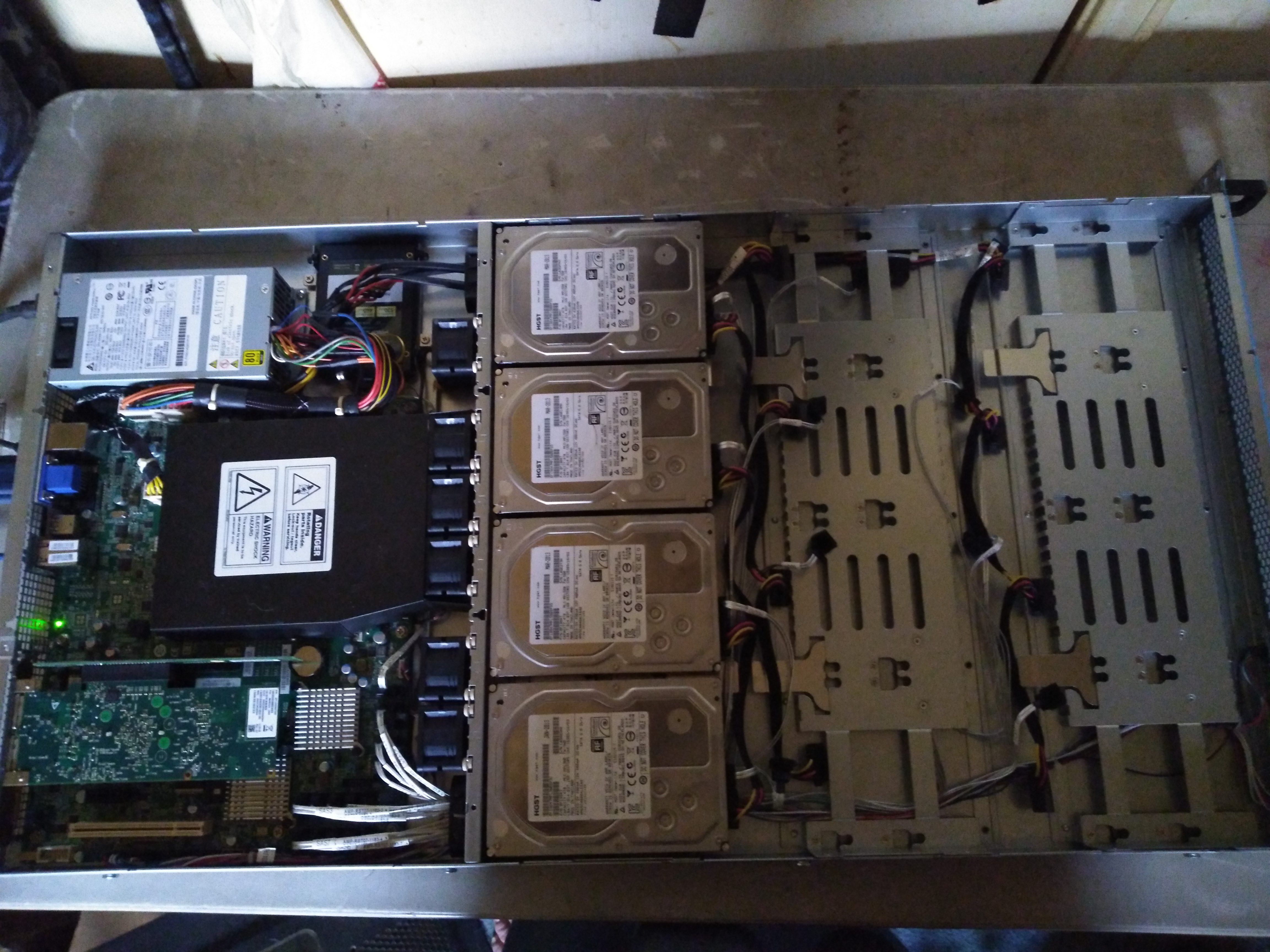

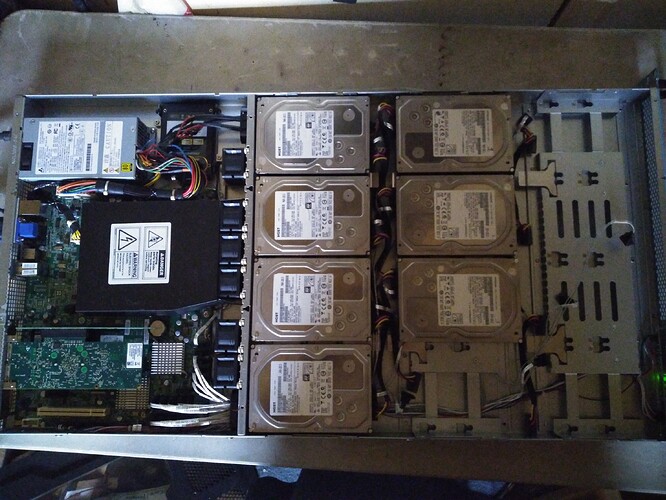

First, I ordered a new server, a 250g ssd boot drive, 4 X 4Tb disks, as well as a 40g infiniband card.

I built Rockstor ISO (4.0.4) from OpenSuse. Deployed it to the server and configured the disks as a single Raid0 array.

After it was set up I install the zfs drivers onto the system.

I shutdown the system and install the first 3 zfs disks from freenas.

From the command line I did a

zpool import

to get it to list the zfs pool on the drives. This was followed by a

zpool import -f <PoolName>

This imported the pool to the system. After which I did a

zfs mount -a

To have zfs mount the pool and all datasets (zfs term for what rockstor calls a share)

I then went into rockstor and created a share (on the 4tb drives) for each zfs dataset

Once the share were created, I copied the data from the zfs dataset to the rockstor share. This was a two fold thing, first I needed to get the data onto the Rockstor share but I also needed to test the 4tb drives and make sure they were not going to fail on me.

After the data was migrated, I added the next 3 drives and repeated the procedure to move the data over. At this point I had all the data moved over to RockStor’s BTRFS drive pool. Just an FYI, this was 3 days of coping data. ZFS uses memory for cache and so the copies were slow, though not a problem.

Once the data was in place I put the USB key back in the system and did a fresh install of RockStor and imported the pool from the initial 4 disk array. Note: up to this point I did not wipe the old disks. I wanted to make sure everything worked. Once I was satisfied it was all good I began wiping the old disks and creating new pools and shares.

Moving data around in Rockstor

In my opinion this is one of Rockstor’s shortcomings. There is no way in the interface to rename a pool, rename a share, or move a share to a different pool. So I did it from the command line.

First was to rename the pool, I had chosen a name of “Space” for the initial pool and that needed to change. The command line is

btrfs filesystem label <mountpoint> <newlabel>

I then moved on to wiping the first set of old disks and creating a new pool.

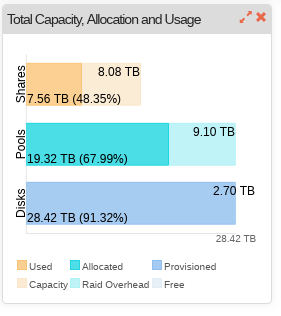

I created the following Pool layout

- Cloud ( 3 x 3Tb Drives in a Raid 5 array )

- Home-Dirs ( 2 x 3Tb Drives in a Raid 1 array )

- Media ( 4 x 4Tb Drives in a Raid 5 Array )

- Unused ( 1 x 3Tb Drive )

Moving the shares was a bit different. You can rename the share using the mv command within the same pool but can not do it to a different pool. To work around this I first moved each existing share to -old in the existing pool. I then created a new share in the new pool and did a cp -a of the data from the old share to the new share in a new pool. This moved the data where I wanted it. After the first copy I did a scrub on the new pool to make sure the data was good. After which I went ahead and migrated the data round to it’s new home.

When all was said and done, Everything was in place and working well. (Yes, that is 32tb of Disk Space!)

This is the point that I buttoned up the server and moved it to the closet with my blade server. This is also when I found out the 40g infiniband card was bad, so I have a new one on order.

So, this Rockstor is the backend NAS for my cloud. The cloud is a 4 blade server where each blade has 24 cores and 128G of ram. It uses libvirt and qemu for virtualization. I then have 20 Docker VM’s in a docker swarm.

I did notice a problem with the deployment. There are 68 containers in the cloud, one of those containers is a log system. The logging data seemed to overload the Rockstor nas causing excessive wait states on drive access. The log system only processes about 700G a day. As I tracked the issue down, the network (1gb) was only running 26MB/s which is below the network threshold. The drives seemed to be a bit slow and this was running on the same drives when they were in a ZFS Raid5 array. The only thing I can come up with is that the overhead of BTRFS was the bottleneck. This is not a real problem. I have a second NAS that is on the 40G infiniband and runs all SSD disks. I can migrate the log services over later.