I actually do see the lines of my SMB Custom configuration, although they are spread out over multiple JSON “Elements” (however this is called, the lines of the same config are in different {} blocks).

{"model": "storageadmin.sambashare", "pk": 1, "fields": {"share": 39, "path": "/mnt2/scanner", "comment": "SMB share for scanner", "browsable": "yes", "read_only": "no", "guest_ok": "no", "shadow_copy": false, "time_machine": false, "snapshot_prefix": null}}, {"model": "storageadmin.sambashare", "pk": 2, "fields": {"share": 30, "path": "/mnt2/old-photos", "comment": "Samba-Export", "browsable": "yes", "read_only": "no", "guest_ok": "no", "shadow_copy": false, "time_machine": false, "snapshot_prefix": null}}, {"model": "storageadmin.sambacustomconfig", "pk": 1, "fields": {"smb_share": 1, "custom_config": "valid users = @scanner"}}, {"model": "storageadmin.sambacustomconfig", "pk": 3, "fields": {"smb_share": 1, "custom_config": "directory mask = 0775"}}, {"model": "storageadmin.sambacustomconfig", "pk": 4, "fields": {"smb_share": 1, "custom_config": "force group = scanner"}}, {"model": "storageadmin.sambacustomconfig", "pk": 6, "fields": {"smb_share": 2, "custom_config": "valid users = @family"}}, {"model": "storageadmin.sambacustomconfig", "pk": 10, "fields": {"smb_share": 2, "custom_config": "force user = admin"}}, {"model": "storageadmin.sambacustomconfig", "pk": 11, "fields": {"smb_share": 2, "custom_config": "force group = users"}}, {"model": "storageadmin.sambacustomconfig", "pk": 12, "fields": {"smb_share": 1, "custom_config": "create mask = 0664"}}, {"model": "storageadmin.sambacustomconfig", "pk": 15, "fields": {"smb_share": 2, "custom_config": "directory mask = 0755"}}, {"model": "storageadmin.sambacustomconfig", "pk": 16, "fields": {"smb_share": 2, "custom_config": "create mask = 0644"}},

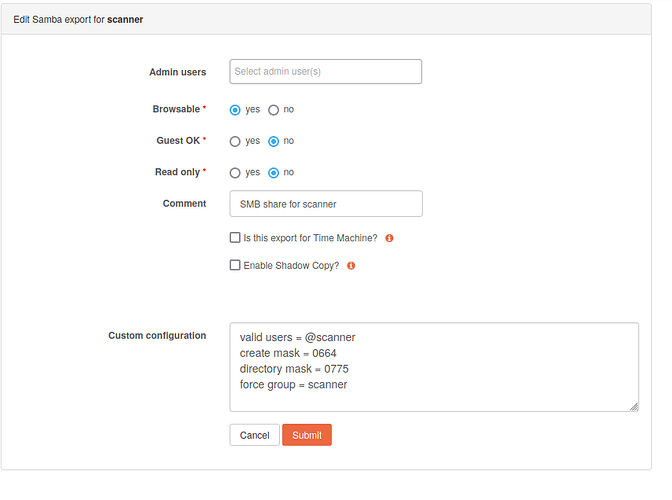

For reference, this is a rockstor screenshot of the “scanner” share:

Interestingly enough there is a “editable” field in the JSON file which is set to “rw” …

And the Host String was restored coreectly to 10.71.128.0/24 in Rockstor.

{"model": "storageadmin.nfsexportgroup", "pk": 2, "fields": {"host_str": "10.71.128.0/24", "editable": "rw", "syncable": "async", "mount_security": "insecure", "nohide": false, "enabled": true, "admin_host": null}},

Note: the JSON file is exported from my old Rockstor 4.6.1 installation.