@Flox Re:

Yes the shellinabox does have some personality of it’s own. But good to know how it’s affecting us in this case. We likely want to update our docs on this front. I think we should try and avoid reach into shellinabox, at least for the time being.

There may be a difference between su to root in shellinabox and using the following option:

Shell connection service: SSH

But it seems we have an as-yet unreported issue with this option failing to allow login. Likely some more sshd changes of late.

buildvm login: root

command-line line 0: Unsupported option “rhostsrsaauthentication”

command-line line 0: Unsupported option “rsaauthentication”

Session closed.

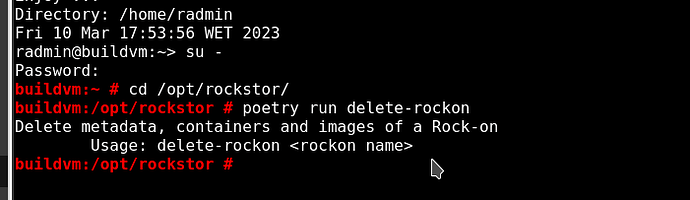

I think what is required here, if the shellinabox is being used, is to request the target users environment:

Directory: /home/radmin

Fri 10 Mar 17:49:01 WET 2023

radmin@buildvm:~> su -

Password:

buildvm:~ # echo $PATH

/sbin:/usr/sbin:/usr/local/sbin:/root/.local/bin:/root/bin:/usr/local/bin:/usr/bin:/bin:/usr/lib/mit/bin:/usr/l

ib/mit/sbin

Note the use of “-” after su to request target users shell, rather than just their privaledges:

– normal Web-UI user (radmin) login (shellinabox defaults), “su -” to the root user with root env –

And so in the above we then get our root users ‘special’ “/root/.local/bin” directory and hence have access to the poetry binary.

[EDIT]

From man su (not on a rockstor instance as we are JeOS based where manuals are not available !

-, -l, --login Start the shell as a login shell with an environment similar to a real login:

Hope that helps.