I am running rockstor 3.8.16-8. This morning I restarted the system and I noticed that NFS did not work afterwards. The service is apparently running (nfsd is working) but none of the clients can mount the shares.

Attempting to diagnose, I looked at dmesg and saw a bunch of:

BTRFS error (device sda5): parent transid verify failed on 1721409388544 wanted 19188 found 83121

So I immediately checked the drives:

btrfs device stats /dev/sda5

[/dev/sda5].write_io_errs 0

[/dev/sda5].read_io_errs 0

[/dev/sda5].flush_io_errs 0

[/dev/sda5].corruption_errs 0

[/dev/sda5].generation_errs 0

They all came up the same, no errors in SMART.

After searching for the transid error online, I’m finding horror stories, but they all seem to be saying that the filesystem would not mount. Here it appears that it is mounting as SAMBA is working just fine and I can apparently get to all my files through it.

My setup is a little unusual, I have two 3TB disks that are divided into two pools:

btrfs fi show

Label: 'Primary' uuid: 21e09dd8-a54d-49ec-95cb-93fdd94f0c17

Total devices 2 FS bytes used 943.67GiB

devid 1 size 2.73TiB used 946.06GiB path /dev/sdb

devid 2 size 2.70TiB used 946.06GiB path /dev/sda5

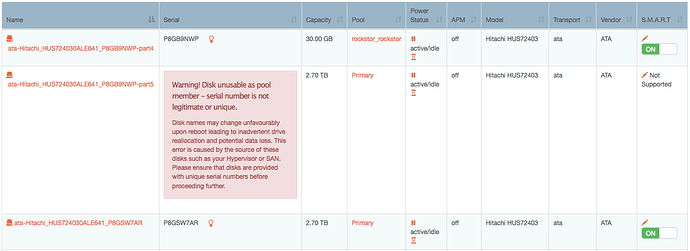

The first one is 30GB for the rockstor system. I then have a Raid1 setup with the second partition on sda and the entirety of sdb. sda5 is this 2.7TB “disk” that shows up in the screenshot above. I had to manually create this setup, it was done on the command line by simply adding sda5 to a pool created on sdb in the rockstor gui, then changing the type to raid1 and rebalancing.

Also I’m getting warnings on an iMac that the identity of the time machine disk has changed. That share is on a different pool with completely separate drives, however, so I’m not sure if its related.

Any thoughts? I don’t have enough experience with BTRFS to feel comfortable resolving this myself, but I was thinking of simply deleting sda5 from the pool, reformatting, and re-adding it. Has anyone seen this before? Is sda5 actually mounting or is all my data ending up on sdb?