@paulsmyth I have spun up a new Rockstor VM in ESXi and transferred the disks across. No data lost, all shares restored and SAMBA now working. But to be completely honest AFP shares are still not working (to my El Capitan mac); I still can’t delete the old Rock-On share; I haven’t tried using the Rock-On service (on the new instance) and I haven’t tried using NUT again yet.

So no data lost and all data accessible via SAMBA, which was my main worry. But this time I am going to play/test on another virtual machine before I start screwing up my primary storage server.

My setup

I have two HP microservers (a Gen8 and an N40L) both running ESXi 6.0, both with 16GB of memory, both full of SSDs/HDDs.

The Gen8 is my primary server and runs Rockstor for NAS storage, Untangle for network gateway/protection and hopefully a NUT server. This machine has a Samsung 250GB Evo as its boot disk (connected to the ODD SATA connection), which holds the VMs and ISO images, and also has 4x3TB WD Red drives for its storage array.

The N40L is my old machine and is intended to just run Rockstor and act as a replication target. It boots ESXi from an internal USB stick, has a 250GB HDD for OSs and ISOs and then three other disks of various sizes for the storage array. This machine also has an Icy Dock 4 disk cage, but I haven’t plumbed it in yet.

The move

The move across to a new Rockstor VM was simple. I’m not an ESXi expert, so I was worried about removing disks from the “broken” instance until I had a new instance working.

On the Gen8 I had previously mapped the data drives to ESXi using Raw Device Mapping. This was partly to increase the access speed (although there is debate about the performance benefits), but mostly to avoid virtualising the storage pool. There’s a lot written about why virtualising ZFS is a very bad idea and I assumed the same would be true for virtualising BTRFS. Raw device mapping has the added benefit of passing the SMART data through to Rockstor, so you can get alerts if one of your disks is failing.

Anyway, with the data disks still attached to the broken instance I spun up a new 3.8-11 instance and attached the disks to it as well. In the ESXi General settings I added disk.EnableUUID=TRUE to the VMX file to allow unique disk names. Then I booted the VM and installed Rockstor as normal.

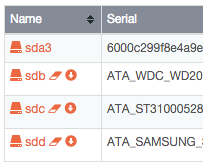

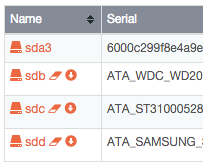

When I logged in to Rockstor for the first time and went to the Disks section there were a couple of icons next to my data drives. The first icon was to wipe all data and start fresh, the second was to import pools, shares and snapshots. These icons are scarily close together:

I clicked on import and my BTRFS pool and shares were imported.

Initially the data usage of the shares was still 0KB, which was worrying. I then exported the shares as SAMBA and checked to see if the files were actually present - and they were: phew! The data usage info was populated after a while, so I guess the system had some housekeeping to do before it was fully sorted.

Re deleting old Rock-On share

When I was first playing around with Rock-Ons I created a share (called “Rock-Ons”), used it for a bit, but then decided to use lowercase share names for system stuff and Title Case share names for shares that I would export. So I created a new share (called “rockons”) and reconfigured the Rock-On service to use the new share. When trying to delete the original share I get the following error…

The error:

Failed to delete the Share(Rock-Ons). Error from the OS: Error running a command. cmd = [‘/sbin/btrfs’, ‘subvolume’, ‘delete’, u’/mnt2/btrPOOL/Rock-Ons’]. rc = 1. stdout = [“Delete subvolume (no-commit): ‘/mnt2/btrPOOL/Rock-Ons’”, ‘’]. stderr = [“ERROR: cannot delete ‘/mnt2/btrPOOL/Rock-Ons’ - Directory not empty”, ‘’]

From the log:

[15/Feb/2016 12:21:48] ERROR [system.osi:87] non-zero code(1) returned by command: [‘/sbin/btrfs’, ‘subvolume’, ‘delete’, u’/mnt2/btrPOOL/Rock-Ons’]. output: [“Delete subvolume (no-commit): ‘/mnt2/btrPOOL/Rock-Ons’”, ‘’] error: [“ERROR: cannot delete ‘/mnt2/btrPOOL/Rock-Ons’ - Directory not empty”, ‘’]

[15/Feb/2016 12:21:48] ERROR [storageadmin.views.share:285] Error running a command. cmd = [‘/sbin/btrfs’, ‘subvolume’, ‘delete’, u’/mnt2/btrPOOL/Rock-Ons’]. rc = 1. stdout = [“Delete subvolume (no-commit): ‘/mnt2/btrPOOL/Rock-Ons’”, ‘’]. stderr = [“ERROR: cannot delete ‘/mnt2/btrPOOL/Rock-Ons’ - Directory not empty”, ‘’]

Traceback (most recent call last):

File “/opt/rockstor/src/rockstor/storageadmin/views/share.py”, line 283, in delete

remove_share(share.pool, share.subvol_name, share.pqgroup)

File “/opt/rockstor/src/rockstor/fs/btrfs.py”, line 396, in remove_share

run_command(delete_cmd, log=True)

File “/opt/rockstor/src/rockstor/system/osi.py”, line 89, in run_command

raise CommandException(cmd, out, err, rc)

CommandException: Error running a command. cmd = [‘/sbin/btrfs’, ‘subvolume’, ‘delete’, u’/mnt2/btrPOOL/Rock-Ons’]. rc = 1. stdout = [“Delete subvolume (no-commit): ‘/mnt2/btrPOOL/Rock-Ons’”, ‘’]. stderr = [“ERROR: cannot delete ‘/mnt2/btrPOOL/Rock-Ons’ - Directory not empty”, ‘’]

[15/Feb/2016 12:21:48] ERROR [storageadmin.util:38] request path: /api/shares/Rock-Ons method: DELETE data: <QueryDict: {}>

[15/Feb/2016 12:21:48] ERROR [storageadmin.util:39] exception: Failed to delete the Share(Rock-Ons). Error from the OS: Error running a command. cmd = [‘/sbin/btrfs’, ‘subvolume’, ‘delete’, u’/mnt2/btrPOOL/Rock-Ons’]. rc = 1. stdout = [“Delete subvolume (no-commit): ‘/mnt2/btrPOOL/Rock-Ons’”, ‘’]. stderr = [“ERROR: cannot delete ‘/mnt2/btrPOOL/Rock-Ons’ - Directory not empty”, ‘’]

Traceback (most recent call last):

File “/opt/rockstor/src/rockstor/storageadmin/views/share.py”, line 283, in delete

remove_share(share.pool, share.subvol_name, share.pqgroup)

File “/opt/rockstor/src/rockstor/fs/btrfs.py”, line 396, in remove_share

run_command(delete_cmd, log=True)

File “/opt/rockstor/src/rockstor/system/osi.py”, line 89, in run_command

raise CommandException(cmd, out, err, rc)

CommandException: Error running a command. cmd = [‘/sbin/btrfs’, ‘subvolume’, ‘delete’, u’/mnt2/btrPOOL/Rock-Ons’]. rc = 1. stdout = [“Delete subvolume (no-commit): ‘/mnt2/btrPOOL/Rock-Ons’”, ‘’]. stderr = [“ERROR: cannot delete ‘/mnt2/btrPOOL/Rock-Ons’ - Directory not empty”, ‘’]

To debug I exported the share to see what files were present and there are files and folders present: graph, containers, btrfs etc. I can delete some, but the btrfs and volumes folders can’t be deleted (from my mac’s file manager), so I think I will need to SSH on to the box and delete the files manually.

I haven’t given up on fixing this, but I haven’t had time to do the googling and test a solution yet.

Re the NUT server

I have an APC SMART UPS 1000VA UPS in my IT cupboard. It is attached to 12 plug power tower from which all my IT kit is powered: two HP microservers, router, switch, wifi access point, telephone. When the power goes out in a storm my kit will stay will stay on for about an hour, however now that I’m running servers I wanted to make sure that they shutdown gracefully before battery power finally runs out.

To do this I thought I would run a NUT server on one of my ESXi servers and attach the USB cable from the UPS to the server. I haven’t got this working yet (after serval evenings of googling an toil), but I think its an issue getting the signal passed through to the server via ESXi rather than a Rockstor issue.

@paulsmyth: I’m not sure if this has answered you question but please let me know if you need further info.