If I have a fully populated chassis with 8 512GB disks in a single pool with raid10, can I replace some of the disks with larger disks? What would be involved if it is possible to do this?

Hi @csmall,

Yes, you can - as long as you have sufficient space to store the data distributed across the remaining disks.

Alteratively, you can drop the RAID protection and switch to RAID0 to allow for more free space, but the operational order will change.

I don’t know whether this is supported in the rockstor UI (or how it’s handled).

The easiest way to handle this on the command line is to:

- Identify the disk you’re going to replace

- Powerdown and physically remove the disk

- Add the new disk

- Identify the new disk

- Powerup and perform a btrfs replace, and filesystem resize.

How you do some these tasks depends a bit on your setup and what tools you have installed.

Ultimately though, the last step would be:

btrfs replace start /dev/old_ass_disk /dev/new_shiny_disk /mnt2/my_rockstor_poolname

btrfs replace status /mnt2/my_rockstor_poolname

btrfs fi resize /dev/new_shiny_disk:max /mnt2/my_rockstor_poolname

With the Rockstor UI, your best bet would probably be to remove the old disk, and then add the new disk.

Note that these procedures take time and should be completed before any you change power state (turn off) or restart the machine!

@csmall Hello again. I’d second what @Haioken stated for a Rockstor Web-UI method.

You could also, if ports etc allow, add the new disk first and then remove the old disk, ie reverse the above order.

This Web-UI method is accessed via the Pool’s Detail page and then the “Resize Pool” which will then open a dialog based wizard that should step you through the available options. See the documentation entry in the Pools section entitled Pool resizing.

Note in the above you first logically remove the disk from the pool prior to physically removing / detaching it from the system, if that is the plan.

I would also suggest that a less risky move, if using a btrfs replace command via command line, is to leave the ‘disk to be replaced’ connected. This should be quicker also I think. But of course this may not be an option, ie if the disk is dead or there just isn’t sufficient ports; but in the later case it may still be advised to temporarily add a port rather than detach the disk from the system unnecessarily. If the ‘disk to be replaced’ is still working but is suspect, health wise, there is also the ‘-r’ switch where the ‘disk to be replaced’ is considered ‘read only’.

I think that the general opinion is that a ‘btrfs replace’ is the more preferred, read efficient, method to btrfs dev add, btrfs dev delete, or the other way around. But currently there is no ‘btrfs replace’ counterpart within the Rockstor Web-UI. But we do have this as an open issue:

Overall I think it is always best to not degrade a pool, ie physically removing a disk, prior to executing either a replace or a delete action as this, by definition, reduces redundancy and puts otherwise data at unnecessary risk, it also requires that a pool be mounted degraded (by btrfs design) which is a further complication. So always try to perform a logical removal before a physical removal is possible. I.e with 8 disks and sufficient free space (as @Haioken mentioned) you should be able to first remove via the Web-UI a disk, and once that procedure is complete (can take ages) you should have an un managed disk that can safely be physically removed. You can look to the balance tab for the pool, after initiating any device change, to see a progress report.

And that issue might be worth a look, info wise.

@Haioken, please correct me (double check) if I’ve gotten something wrong in the above, it’s rather off the top of my head.

Linking to a relevant btrfs wiki for context:

https://btrfs.wiki.kernel.org/index.php/Using_Btrfs_with_Multiple_Devices

Hope that helps.

Hi @phillxnet,

The only issue I can see with your statements, is the initial statement:

This implies to me that he cannot add a new disk while the originals are connected, hence recommending the offline replace method via CLI.

FYI - It’d be nice to see a way of managing this via CLI with a shutdown and boot in between (flag set in DB?)

Process (as I see it) would be:

- Select the disk to be replaced, hit ‘replace disk’ button (confirmation dialog should be here)

- Advise to power down system, remove and replace disk

- Power down, remove and replace disk

- Power up, initial login should ask to select (and confirm) new disk to be inserted into pool

@Haioken I’m afraid I still have a little issue with your recommendation.

Agreed.

It is best however to not degrade a poll at any time if not necessary, and to physically remove a disk would necessarily degrade a pool. But btrfs can replace a disk in situ and it then become blank there after. But one can, if there are insufficient ‘slots’ as would seem the case, first remove a disk logically (btrfs dev delete) prior to removing it physically: there by freeing up a disk slot if that is first required.

And this step is where the pool will be unnecessarily degraded.

My understanding is that this is not how it works. btrfs replace is an online process, although it can take a ‘missing’ argument, assuming the pool is now mounted degraded, for the source (to be replaced) disk. But in this case the disk is not missing and shouldn’t be made missing (by disconnection) so probably best to just use the delete (let it’s balance finish) then the add disk process. Also the missing argument relates to the first missing device: so that’s a tricky / vague specification if there are more than one missing disks.

Thanks for the suggestions re user interaction though. I see the replace UI being located at the pool level as it’s a pool member thing, ie we are not removing the disk necessarily we are just changing it’s member status. So on the pool detail page we have, as you said for the diks page, a replace flag next to the disk name. Then it offers a disk (must be currently unused) to replace that disk with. Initially or later we can have a tick box that indicates if the disk to be replaced should be considered read only (with a tool tip hint suggesting this if the disk is suspected to be unhealthy). Then on confirmation the replace proceeds and there is a feedback component in the pools details page (maybe the first one) which in red states that a disk replace is in progress and to leave it be (along with a progress report).

I have to play with this procedure more myself first before embarking on the code of the front or/and back end but I do think we should not encourage degrading pools as disk management, particularly around missing or poorly disks, is a current weakness of btrfs so best to not push things there for a bit. At least until btrfs is able to ‘write off’ disks as poorly for itself. Plus we have the inconvenience of the degraded pool option requirement. There are a number of additions going in to help in this regard so we just have to be patient and manage with what we have so far.

I’m not a fan of processes that span a reboot and mostly they are not required in btrfs, plus our current code is not that orientated towards this type of mechanism.

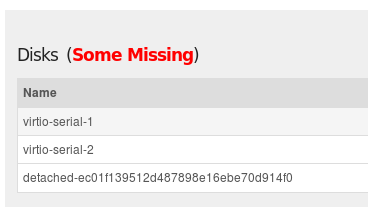

What do you think of my proposed location / user story for the disk replace mechanism. It does fit, is then right next to, the Resize Pool button (our current entry to pool changes) and we already have (in stable subscription updates) an indicator above that table that alerts to missing devices, ie see:

https://github.com/rockstor/rockstor-core/issues/1897

so icons next to each of the pool member disks in that table seems like a nice fit to me. And maybe some flashing / gaudiness there after in that table to indicate the involved (disk) member if we get this info.

All good stuff and I’m looking forward to doing, or if I am beaten to it, getting these feature.