Hi,

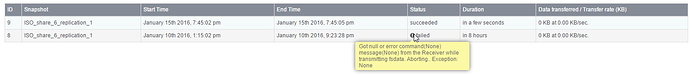

When a replication failure occurs, the next replication does not recover. Eg:

The replication on Jan 10th failed after a connection loss. 2.74GB was transferred of the 7.5GB in the share. The next replication attempt on Jan 15th took only a few seconds, whereas I’d expect either a full re-attempt, or else a replication of the missing ~5GB. Log for the second rep on sender:

[15/Jan/2016 19:45:02] DEBUG [smart_manager.replication.listener_broker:254] new-send request received for 6

[15/Jan/2016 19:45:02] DEBUG [smart_manager.replication.listener_broker:143] previous backup failed for Sender(c8513240-674b808e-b510-4896-8998-5036f72689c0_6). Starting a new one. Attempt 0/10.

[15/Jan/2016 19:45:02] DEBUG [smart_manager.replication.listener_broker:148] No record of last successful ReplicaTrail for Sender(c8513240-674b808e-b510-4896-8998-5036f72689c0_6). Will start a new Full Sender.

[15/Jan/2016 19:45:02] DEBUG [smart_manager.replication.sender:102] Id: c8513240-674b808e-b510-4896-8998-5036f72689c0-6 Initial greeting: {‘snap’: u’ISO_share_6_replication_1’, ‘incremental’: False, ‘share’: u’ISO_share’, ‘uuid’: u’c8513240-674b808e-b510-4896-8998-5036f72689c0’, ‘pool’: u’<POOL_NAME>‘}

[15/Jan/2016 19:45:02] ERROR [storageadmin.util:38] request path: /api/shares/ISO_share/snapshots/ISO_share_6_replication_1 method: POST data: <QueryDict: {u’snap_type’: [u’replication’]}>

[15/Jan/2016 19:45:02] ERROR [storageadmin.util:39] exception: Snapshot(ISO_share_6_replication_1) already exists for the Share(ISO_share).

Traceback (most recent call last):

File “/opt/rockstor/eggs/gunicorn-0.16.1-py2.7.egg/gunicorn/workers/sync.py”, line 34, in run

client, addr = self.socket.accept()

File “/usr/lib64/python2.7/socket.py”, line 202, in accept

sock, addr = self._sock.accept()

error: [Errno 11] Resource temporarily unavailable

[15/Jan/2016 19:45:02] DEBUG [smart_manager.replication.util:120] Snapshot(ISO_share_6_replication_1) already exists for the Share(ISO_share).

[15/Jan/2016 19:45:05] DEBUG [smart_manager.replication.sender:232] Id: c8513240-674b808e-b510-4896-8998-5036f72689c0-6. snap-exists received. Not sending fsdata

[15/Jan/2016 19:45:32] DEBUG [smart_manager.replication.listener_broker:60] Sender(c8513240-674b808e-b510-4896-8998-5036f72689c0_6) exited. exitcode: 0

Pertinent text from above is “share… already exists” and “snap-exists received. Not sending fsdata”. It seems to be assuming that if a share exists, even if it is incomplete, then a previous replication has succeeded. Log for second rep on receiver:

[15/Jan/2016 11:45:03] DEBUG [smart_manager.replication.listener_broker:210] initial greeting from c8513240-674b808e-b510-4896-8998-5036f72689c0-6

[15/Jan/2016 11:45:03] DEBUG [smart_manager.replication.listener_broker:236] New Receiver(c8513240-674b808e-b510-4896-8998-5036f72689c0-6) started.

[15/Jan/2016 11:45:03] DEBUG [smart_manager.replication.receiver:144] Id: c8513240-674b808e-b510-4896-8998-5036f72689c0-6. Starting a new Receiver for meta: {u’snap’: u’ISO_share_6_replication_1’, u’incremental’: False, u’share’: u’ISO_share’, u’uuid’: u’c8513240-674b808e-b510-4896-8998-5036f72689c0’, u’pool’: u’<POOL_NAME>‘}

[15/Jan/2016 11:45:03] ERROR [storageadmin.util:38] request path: /api/shares method: POST data: {u’sname’: u’c8513240-674b808e-b510-4896-8998-5036f72689c0_ISO_share’, u’replica’: True, u’pool’: u’<POOL_NAME>‘}

[15/Jan/2016 11:45:03] ERROR [storageadmin.util:39] exception: Share(c8513240-674b808e-b510-4896-8998-5036f72689c0_ISO_share) already exists. Choose a different name

Traceback (most recent call last):

File “/opt/rockstor/eggs/gunicorn-0.16.1-py2.7.egg/gunicorn/workers/sync.py”, line 34, in run

client, addr = self.socket.accept()

File “/usr/lib64/python2.7/socket.py”, line 202, in accept

sock, addr = self._sock.accept()

error: [Errno 11] Resource temporarily unavailable

[15/Jan/2016 11:45:03] DEBUG [smart_manager.replication.util:145] Share(c8513240-674b808e-b510-4896-8998-5036f72689c0_ISO_share) already exists. Choose a different name

[15/Jan/2016 11:45:03] ERROR [storageadmin.util:38] request path: /api/sm/replicas/rshare method: POST data: <QueryDict: {u’appliance’: [u’SENDER_NAS’], u’share’: [u’c8513240-674b808e-b510-4896-8998-5036f72689c0_ISO_share’], u’src_share’: [u’ISO_share’]}>

[15/Jan/2016 11:45:03] ERROR [storageadmin.util:39] exception: Replicashare(c8513240-674b808e-b510-4896-8998-5036f72689c0_ISO_share) already exists.

Traceback (most recent call last):

File “/opt/rockstor/eggs/gunicorn-0.16.1-py2.7.egg/gunicorn/workers/sync.py”, line 34, in run

client, addr = self.socket.accept()

File “/usr/lib64/python2.7/socket.py”, line 202, in accept

sock, addr = self._sock.accept()

error: [Errno 11] Resource temporarily unavailable

[15/Jan/2016 11:45:05] DEBUG [smart_manager.replication.receiver:223] Id: c8513240-674b808e-b510-4896-8998-5036f72689c0-6. Snapshot to be sent(/mnt2/<POOL_NAME>/.snapshots/c8513240-674b808e-b510-4896-8998-5036f72689c0_ISO_share/ISO_share_6_replication_1) already exists. Not starting a new receive process

[15/Jan/2016 11:45:29] DEBUG [smart_manager.replication.listener_broker:79] Active Receiver: c8513240-674b808e-b510-4896-8998-5036f72689c0-6. Total messages processed: 1

[15/Jan/2016 11:46:05] DEBUG [smart_manager.replication.receiver:131] Id: c8513240-674b808e-b510-4896-8998-5036f72689c0-6 command: snap-exists rcommand: None

[15/Jan/2016 11:46:05] DEBUG [smart_manager.replication.receiver:80] Id: c8513240-674b808e-b510-4896-8998-5036f72689c0-6. meta: {u’snap’: u’ISO_share_6_replication_1’, u’incremental’: False, u’share’: u’ISO_share’, u’uuid’: u’c8513240-674b808e-b510-4896-8998-5036f72689c0’, u’pool’: u’<POOL_NAME>'} Receive successful

[15/Jan/2016 11:46:29] DEBUG [smart_manager.replication.listener_broker:75] Receiver(c8513240-674b808e-b510-4896-8998-5036f72689c0-6) exited. exitcode: 0. Total messages processed: 1. Removing from the list.

[15/Jan/2016 11:57:29] DEBUG [smart_manager.replication.listener_broker:291] Replica trails are truncated successfully.

Again “share… already exists” and “exitcode: 0. Total messages processed: 1”. I’d expect many more than one message would have been transferred. Is this a bug?