I have 3-4TB successfully replicated (4shares). After the initial replication job I changed all 4 shares to 20min replication jobs and now they all continually fail.

Found this:

storage:~ # tail /opt/rockstor/var/log/supervisord_replication_stderr.log

Traceback (most recent call last):

File "/usr/lib64/python3.11/multiprocessing/process.py", line 314, in _bootstrap

self.run()

File "/opt/rockstor/src/rockstor/smart_manager/replication/listener_broker.py", line 219, in run

frontend.bind(f"tcp://{self.listener_interface}:{self.listener_port}")

File "/opt/rockstor/.venv/lib64/python3.11/site-packages/zmq/sugar/socket.py", line 311, in bind

super().bind(addr)

File "_zmq.py", line 917, in zmq.backend.cython._zmq.Socket.bind

File "_zmq.py", line 179, in zmq.backend.cython._zmq._check_rc

zmq.error.ZMQError: Address already in use (addr='tcp://192.168.10.113:10002')

storage:~ #

Address already in use. Why?

src Rockstor appliance - 192.168.10.113

1st Rockstor appliance - 192.168.10.14

5.0.15-0 (paid support)

/opt/rockstor/var/log/rockstor.log

storage:~ # tail /opt/rockstor/var/log/rockstor.log

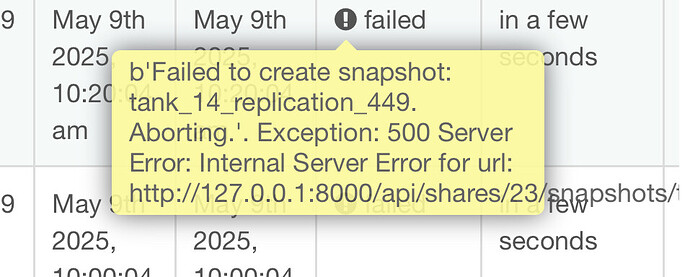

[09/May/2025 11:00:03] ERROR [storageadmin.util:44] Exception: Snapshot (media_15_replication_446) already exists for the share (media).

NoneType: None

[09/May/2025 11:00:03] ERROR [smart_manager.replication.sender:79] Id: ab85bd20-375a-477b-878e-a8d99c26d688-15. b'Failed to create snapshot: media_15_replication_446. Aborting.'. Exception: 500 Server Error: Internal Server Error for url: http://127.0.0.1:8000/api/shares/22/snapshots/media_15_replication_446

[09/May/2025 11:05:03] ERROR [storageadmin.util:44] Exception: Snapshot (media_15_replication_446) already exists for the share (media).

NoneType: None

[09/May/2025 11:05:03] ERROR [smart_manager.replication.sender:79] Id: ab85bd20-375a-477b-878e-a8d99c26d688-15. b'Failed to create snapshot: media_15_replication_446. Aborting.'. Exception: 500 Server Error: Internal Server Error for url: http://127.0.0.1:8000/api/shares/22/snapshots/media_15_replication_446

[09/May/2025 11:10:03] ERROR [storageadmin.util:44] Exception: Snapshot (media_15_replication_446) already exists for the share (media).

NoneType: None

[09/May/2025 11:10:03] ERROR [smart_manager.replication.sender:79] Id: ab85bd20-375a-477b-878e-a8d99c26d688-15. b'Failed to create snapshot: media_15_replication_446. Aborting.'. Exception: 500 Server Error: Internal Server Error for url: http://127.0.0.1:8000/api/shares/22/snapshots/media_15_replication_446

[09/May/2025 11:35:58] ERROR [system.osi:287] non-zero code(7) returned by command: ['/usr/bin/zypper', '--non-interactive', '-q', 'list-updates']. output: [''] error: ['System management is locked by the application with pid 24186 (/usr/bin/zypper).', 'Close this application before trying again.', '']

Deleted all replication tasks and snapshots and started again. Will see what happens in a day after it all replicates again.

@ilium007, my apologies, looks like I gave you the incorrect workaround advice. I believe during testing for another issue this has worked for me, but I was somewhat going off memory.

Looking a bit closer at the underlying code, I suspect when the “request” of a change is submitted, it only checks whether the replication/snapshot already exists, but does not account whether this is an existing or an updated replication task itself and ensure the snapshot/nomenclature is aligned (I’m not a python developer, so I might be wrong).

@Flox, @phillxnet you think that could be the root cause?

After deleting all existing snapshots and restarting the replication it looks like it’s working.I’ve got 500GB to go on the initial snapshot and no errors so far.

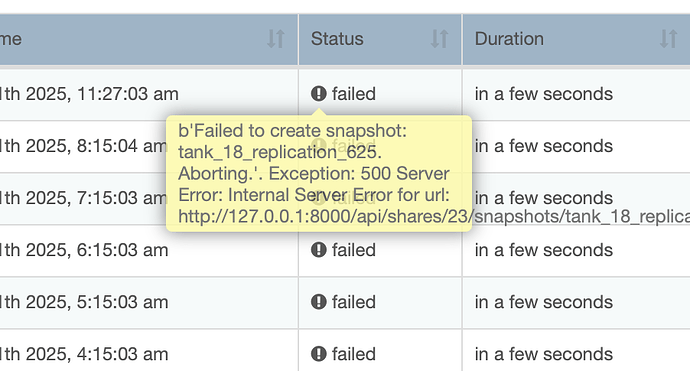

Back to failing replication. 3 jobs work, 1 job fails.

From sender - see jobs succeed, one fails:

[11/May/2025 10:00:05] INFO [smart_manager.replication.sender:335] Id: ab85bd20-375a-477b-878e-a8d99c26d688-19. Sending incremental replica between /mnt2/pool0/.snapshots/media/media_19_replication_663 -- /mnt2/pool0/.snapshots/media/media_19_replication_667

[11/May/2025 10:05:05] INFO [smart_manager.replication.sender:335] Id: ab85bd20-375a-477b-878e-a8d99c26d688-16. Sending incremental replica between /mnt2/pool0/.snapshots/scratch/scratch_16_replication_664 -- /mnt2/pool0/.snapshots/scratch/scratch_16_replication_668

[11/May/2025 10:10:05] INFO [smart_manager.replication.sender:335] Id: ab85bd20-375a-477b-878e-a8d99c26d688-17. Sending incremental replica between /mnt2/pool0/.snapshots/support/support_17_replication_665 -- /mnt2/pool0/.snapshots/support/support_17_replication_669

[11/May/2025 11:00:05] INFO [smart_manager.replication.sender:335] Id: ab85bd20-375a-477b-878e-a8d99c26d688-19. Sending incremental replica between /mnt2/pool0/.snapshots/media/media_19_replication_667 -- /mnt2/pool0/.snapshots/media/media_19_replication_670

[11/May/2025 11:05:05] INFO [smart_manager.replication.sender:335] Id: ab85bd20-375a-477b-878e-a8d99c26d688-16. Sending incremental replica between /mnt2/pool0/.snapshots/scratch/scratch_16_replication_668 -- /mnt2/pool0/.snapshots/scratch/scratch_16_replication_671

[11/May/2025 11:10:05] INFO [smart_manager.replication.sender:335] Id: ab85bd20-375a-477b-878e-a8d99c26d688-17. Sending incremental replica between /mnt2/pool0/.snapshots/support/support_17_replication_669 -- /mnt2/pool0/.snapshots/support/support_17_replication_672

[11/May/2025 11:27:03] ERROR [storageadmin.util:44] Exception: Snapshot (tank_18_replication_625) already exists for the share (tank).

NoneType: None

[11/May/2025 11:27:03] ERROR [smart_manager.replication.sender:79] Id: ab85bd20-375a-477b-878e-a8d99c26d688-18. b'Failed to create snapshot: tank_18_replication_625. Aborting.'. Exception: 500 Server Error: Internal Server Error for url: http://127.0.0.1:8000/api/shares/23/snapshots/tank_18_replication_625

On receiver:

datto01:~ # tail -f /opt/rockstor/var/log/rockstor.log

[11/May/2025 01:27:16] ERROR [smart_manager.replication.receiver:415] Id: b'ab85bd20-375a-477b-878e-a8d99c26d688-18'. No response received from the broker. remaining tries: 8

[11/May/2025 01:27:22] ERROR [smart_manager.replication.receiver:415] Id: b'ab85bd20-375a-477b-878e-a8d99c26d688-18'. No response received from the broker. remaining tries: 7

[11/May/2025 01:27:28] ERROR [smart_manager.replication.receiver:415] Id: b'ab85bd20-375a-477b-878e-a8d99c26d688-18'. No response received from the broker. remaining tries: 6

[11/May/2025 01:27:34] ERROR [smart_manager.replication.receiver:415] Id: b'ab85bd20-375a-477b-878e-a8d99c26d688-18'. No response received from the broker. remaining tries: 5

[11/May/2025 01:27:40] ERROR [smart_manager.replication.receiver:415] Id: b'ab85bd20-375a-477b-878e-a8d99c26d688-18'. No response received from the broker. remaining tries: 4

[11/May/2025 01:27:46] ERROR [smart_manager.replication.receiver:415] Id: b'ab85bd20-375a-477b-878e-a8d99c26d688-18'. No response received from the broker. remaining tries: 3

[11/May/2025 01:27:52] ERROR [smart_manager.replication.receiver:415] Id: b'ab85bd20-375a-477b-878e-a8d99c26d688-18'. No response received from the broker. remaining tries: 2

[11/May/2025 01:27:58] ERROR [smart_manager.replication.receiver:415] Id: b'ab85bd20-375a-477b-878e-a8d99c26d688-18'. No response received from the broker. remaining tries: 1

[11/May/2025 01:28:04] ERROR [smart_manager.replication.receiver:415] Id: b'ab85bd20-375a-477b-878e-a8d99c26d688-18'. No response received from the broker. remaining tries: 0

I have had to, again, delete the ‘tank’ snapshots on src and dst and resend the 100G again.

At this point I am calling it quits on Rockstor and moving back to Synology DSM.

@ilium007 I am sorry to read that this has failed again. If you’re still around (considering that you’re moving back to the synology DSM) did you enable quotas on the pool you were trying to replicate (sender and/or receiver). There was an issue reported in that space where the fourth send ran into problems (though further attempts to replicate by @phillxnet were not successful).

It does seem that the replication using the underlying native btrfs send/receive will require more work to stabilize.

Quotas kept disabling by itself.