Theyre not mounted degraded.

I am able to use the pool as usual and write to it.

The rockstor boot pool and my main RAID1 pool are mounted normally, and both show the same behaviour.

Here are the mount options for my Radi1 pool: rw,relatime,space_cache,subvolid=5,subvol=/

I have never had to force a mount or something like that.

“btrfs fi sh” gives this:

Label: ‘ROOT’ uuid: 4ac51b0f-afeb-4946-aad1-975a2a26c941

Total devices 1 FS bytes used 10.42GiB

devid 1 size 72.46GiB used 20.06GiB path /dev/sdg4

Label: ‘RSPool’ uuid: 12bf3137-8df1-4d6b-bb42-f412e69e94a8

Total devices 7 FS bytes used 5.62TiB

devid 9 size 2.73TiB used 2.31TiB path /dev/sde

devid 10 size 1.82TiB used 1.40TiB path /dev/sdc

devid 11 size 1.82TiB used 1.40TiB path /dev/sdd

devid 12 size 1.82TiB used 1.40TiB path /dev/sdf

devid 13 size 2.73TiB used 2.31TiB path /dev/sdb

devid 15 size 2.73TiB used 2.31TiB path /dev/sdh

devid 16 size 3.64TiB used 1.23TiB path /dev/sda

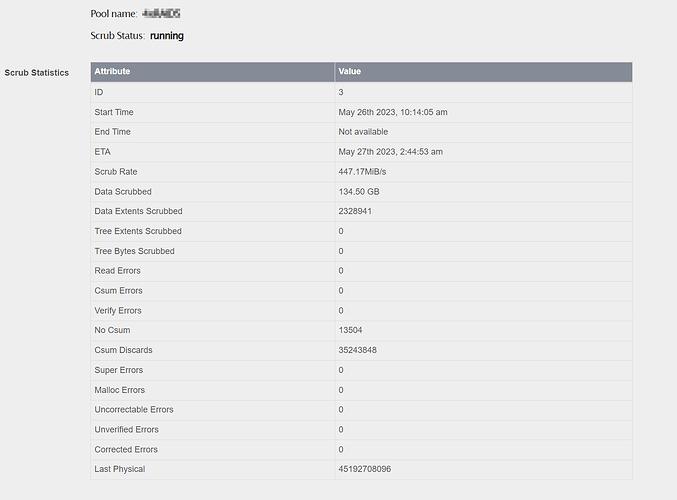

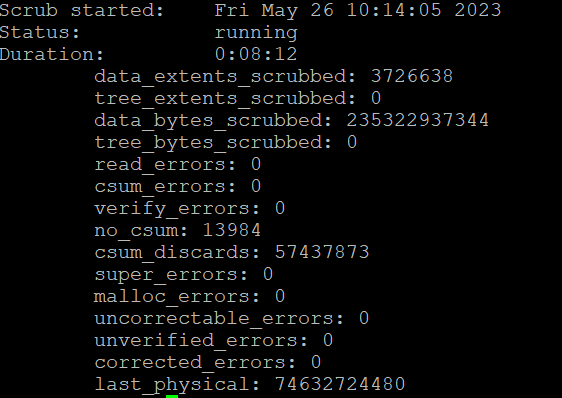

And even thuogh the GUI shows the status as unknown, the scrub runs fine in the background.

“btrfs scrub status” gives this for e.g. my root drive:

Scrub started: Thu May 25 21:05:05 2023

Status: finished

Duration: 0:01:27

Total to scrub: 10.61GiB

Rate: 124.85MiB/s

Error summary: no errors found

The last scrub for my main pool ( scheduled one):

Scrub started: Sat Apr 1 22:00:03 2023

Status: finished

Duration: 7:25:34

Total to scrub: 11.24TiB

Rate: 440.87MiB/s

Error summary: no errors found

There is nothing wrong with my pools I think, the GUI just doesnt catch that the scrub started, and therefore cant report the status or end result.