@kupan787 Hello again.

So I’m not that familiar with bonding/teaming but I believe the team driver is a newer incarnation within the kernel so it might be worth trying that as it is also able to support the LACP (802.3ad) switch hardware stuff.

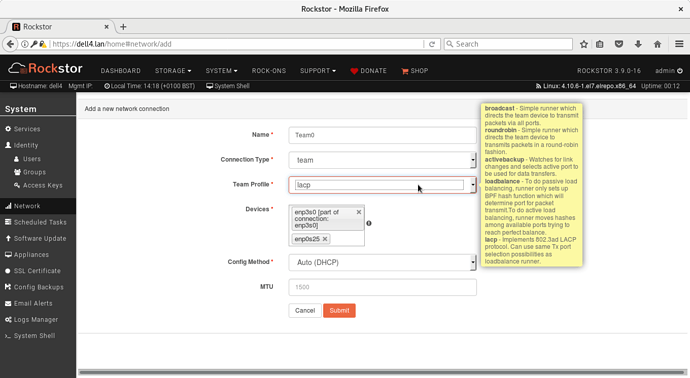

Rockstor Team0 config:

Only 2 NIC’s but still:

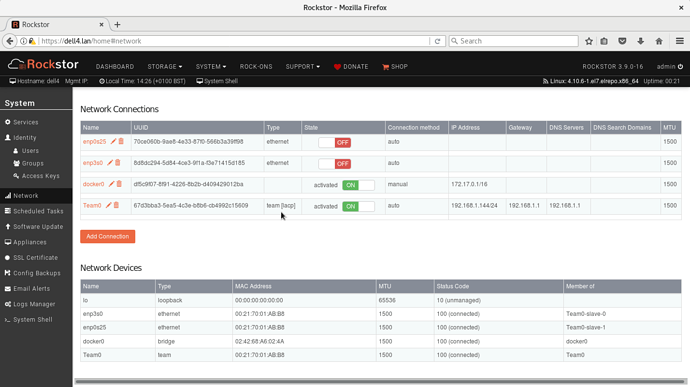

And the resulting overview (had to manually select System - Network again as our above page seems to time out after submitting (bug I’ll enter soon).):

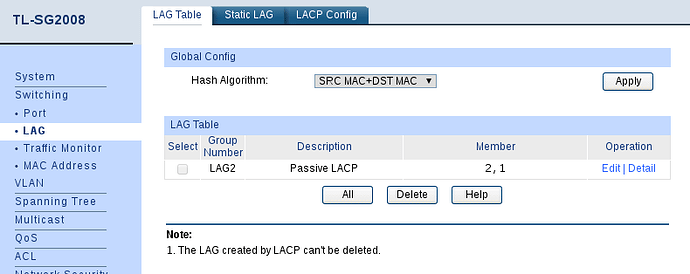

And I went with passive mode in my switch (in this case a TP-Link SG2008), assuming the newer tec in the Rockstor kernel would be better doing the active bit. I also enabled STP (Spanning Tree Protocol) as advised by the router help text.

The above entry didn’t show up until I’d configured Rockstor as above as I enabled the LACP ports on the router first via it’s “LACP Config” tab.

Then on the Rockstor (dell4.lan) I attempted to duplicate your test method and ran:

iperf -s

and on each of a desktop (1.09-1.10 GBytes/s when tested alone) and a laptop (985-1005 MB/s when tested alone) I executed the following command at about the same time:

iperf -i 1 -c dell4.lan

and got roughly usual results on each: (1.09 GBytes/s and 964 MB/s) with the corresponding info presented by the server instance of iperf running on Rockstor:

[ 4] local 192.168.1.144 port 5001 connected with 192.168.1.110 port 51114

[ 5] local 192.168.1.144 port 5001 connected with 192.168.1.138 port 38485

[ 4] 0.0-10.0 sec 1.09 GBytes 939 Mbits/sec

[ 5] 0.0-10.0 sec 964 MBytes 808 Mbits/sec

For ease of ‘cut and past’ I had to do some terminal work over the lan simultaneously but that looks to be 2 simultaneous 1 Gbit connections (or near enough given the slightly slap dash test and the fairly large variance I get from that laptop).

I’d try using the team drivers version of LACP as it seems to be working here.

Hope that helps.