@whitey That’s great thanks.[quote=“whitey, post:30, topic:797”]

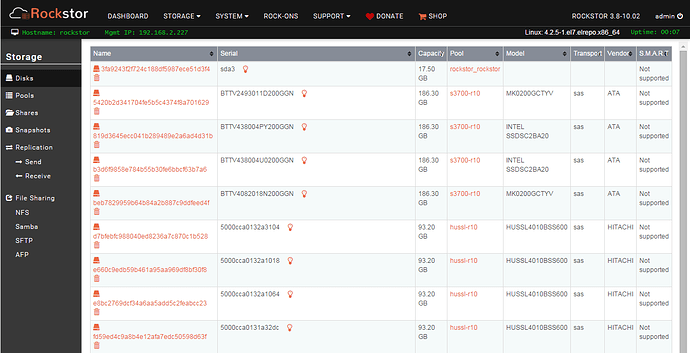

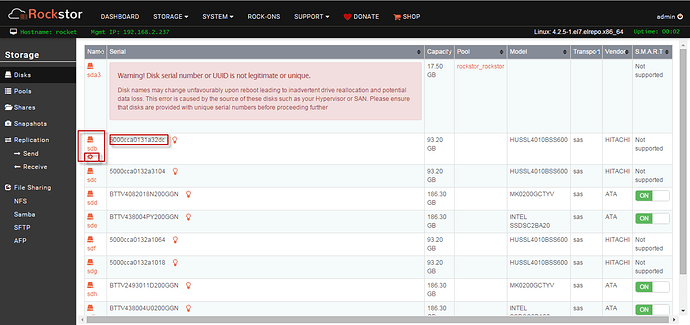

changing from generic /dev/sdb/c/d/e/f to UUID looking entries for names, that intended?

[/quote]

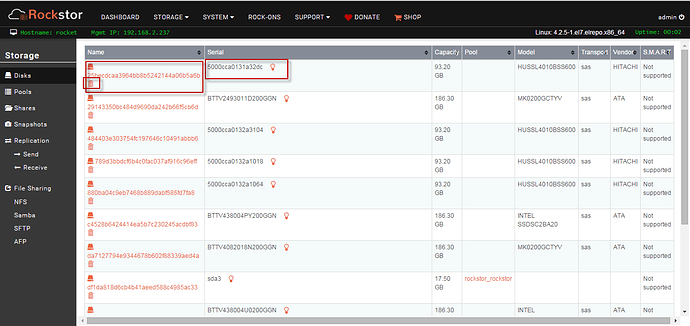

Yes that is intended. the UUID looking entries are Rockstor’s new (currently testing channel only) way to identify device names that are classed as missing as given device names are essentially arbitrary from one boot to the next it made sense to drop them with devices that are thought to be offline / missing. We may later ‘hide’ the uuid derived missing device name with a simple “missing / detached / offline” entry. But for now it is a different made up uuid derived string on each scan that serves as a unique but arbitrary place holder that can’t be confused with real device names that are reserved for scan_disk reported attached devices.

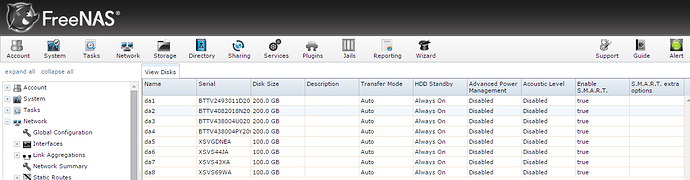

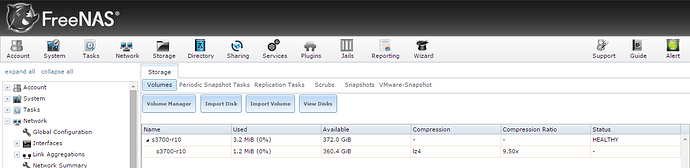

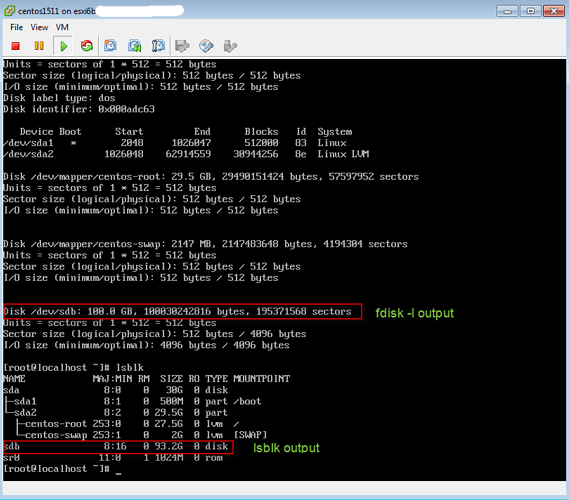

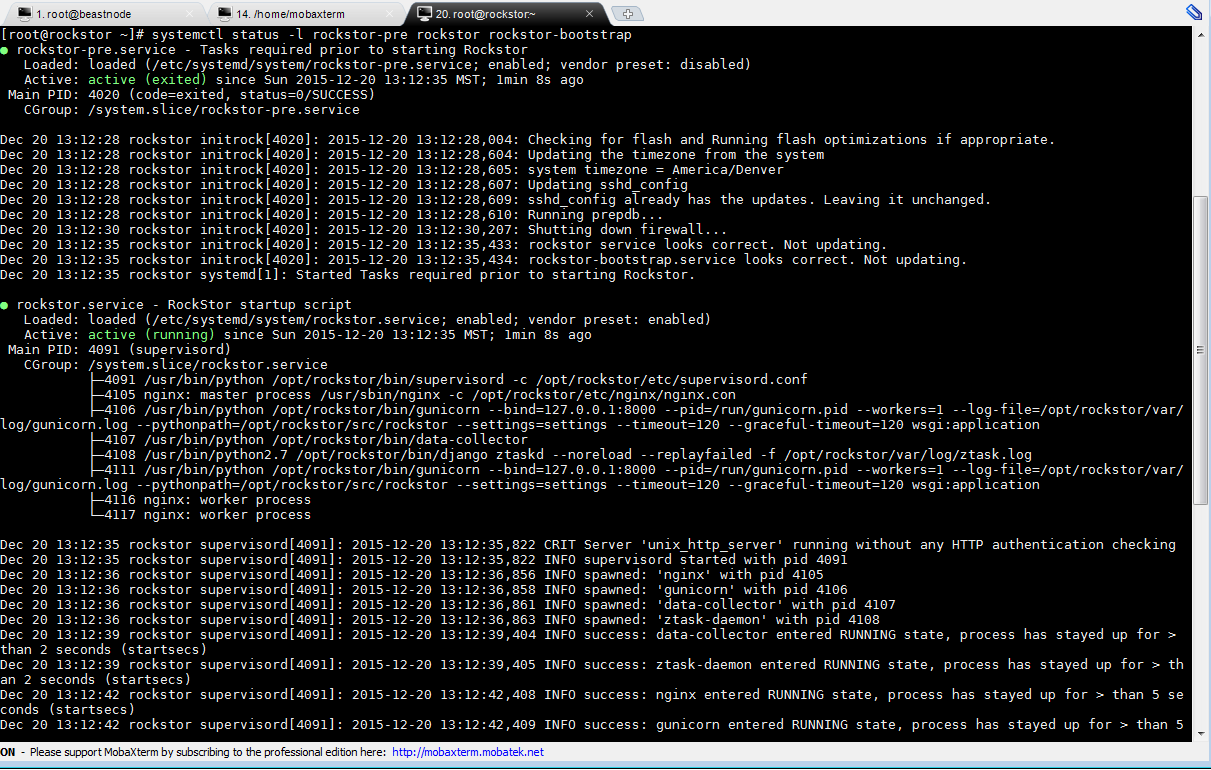

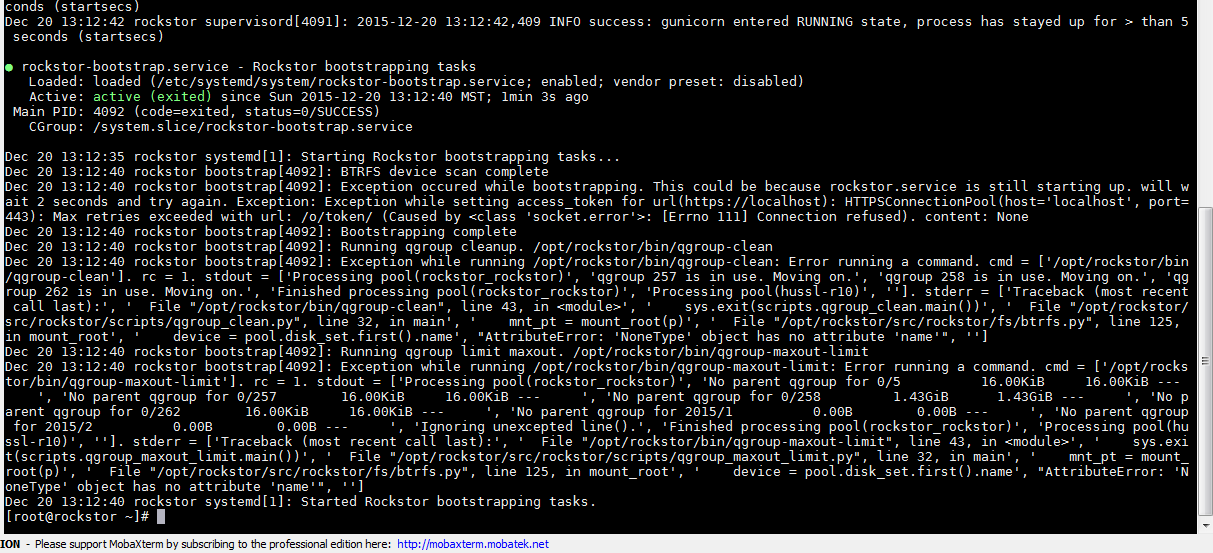

The reason 3.8-10.02 offers only remove is because it can’t any longer see the drives as per your earlier post of the lsblk command that had no data drive entries, where as you’re most recent post of the same lsblk command indicates a bunch of them and sure enough they show as connected in the Disks screen.

Nice formatting there, thanks.

So the options are:-

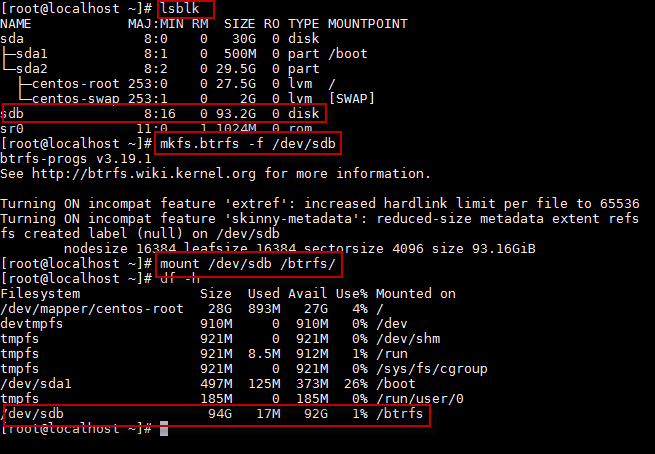

1:- reconfigure the hyper-visor vmware gubbins so that the drives show up with the updated lsblk command as in the most recent post as then Rockstor can see them.

or

2:- downgrade the installed version of lsblk to the prior version that could see the drives, as currently configured.

I’m afraid that is as far as I’ve gotten with this one and option 2 seems like a real hack so I would go with option 1 but I can’t help you there as I know next to nothing of vmware.

The changes between the lsblk version that did work and the one that has just been updated by the CentOS 7.2 1511 update are available in this comparison of the two package versions. Note at the end they include the spec file changes where we can see the changelog. Ironically enough two of the fixes are:-

“lsblk does not list dm-multipath and partitioned devices”

“blkid outputs nothing for some partitions (e.g. sun label)”

No I too haven’t come across such touchy drives, strange. Maybe they are just plain faulty hence the multitude of systems that have had issues sensing them.

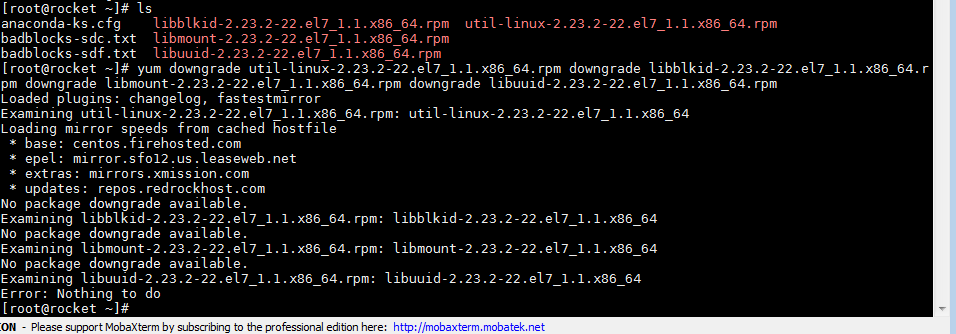

So here goes with the hacky workaround but if the new lsblk can’t see your drives and you can’t use another config to present the drives then I’m not sure what else there is.

In case you want to try the lsblk downgrade (in 3.8-10.02), which I don’t recommend, then here are the downloads of util-linux, it’s dependencies, and the required command to install them:-

# util-linux downgrade to CentOS 7.1.1503 version:-

# https://www.rpmfind.net/linux/RPM/centos/updates/7.1.1503/x86_64/Packages/util-linux-2.23.2-22.el7_1.1.x86_64.html

curl -O ftp://195.220.108.108/linux/centos/7.1.1503/updates/x86_64/Packages/util-linux-2.23.2-22.el7_1.1.x86_64.rpm

# https://www.rpmfind.net/linux/RPM/centos/updates/7.1.1503/x86_64/Packages/libblkid-2.23.2-22.el7_1.1.x86_64.html

curl -O ftp://195.220.108.108/linux/centos/7.1.1503/updates/x86_64/Packages/libblkid-2.23.2-22.el7_1.1.x86_64.rpm

# https://www.rpmfind.net/linux/RPM/centos/updates/7.1.1503/x86_64/Packages/libmount-2.23.2-22.el7_1.1.x86_64.html

curl -O ftp://195.220.108.108/linux/centos/7.1.1503/updates/x86_64/Packages/libmount-2.23.2-22.el7_1.1.x86_64.rpm

# https://www.rpmfind.net/linux/RPM/centos/updates/7.1.1503/x86_64/Packages/libuuid-2.23.2-22.el7_1.1.x86_64.html

curl -O ftp://195.220.108.108/linux/centos/7.1.1503/updates/x86_64/Packages/libuuid-2.23.2-22.el7_1.1.x86_64.rpm

And once you have all of these say in /root then with the following command you can take your chances and change the legs you are standing on:-

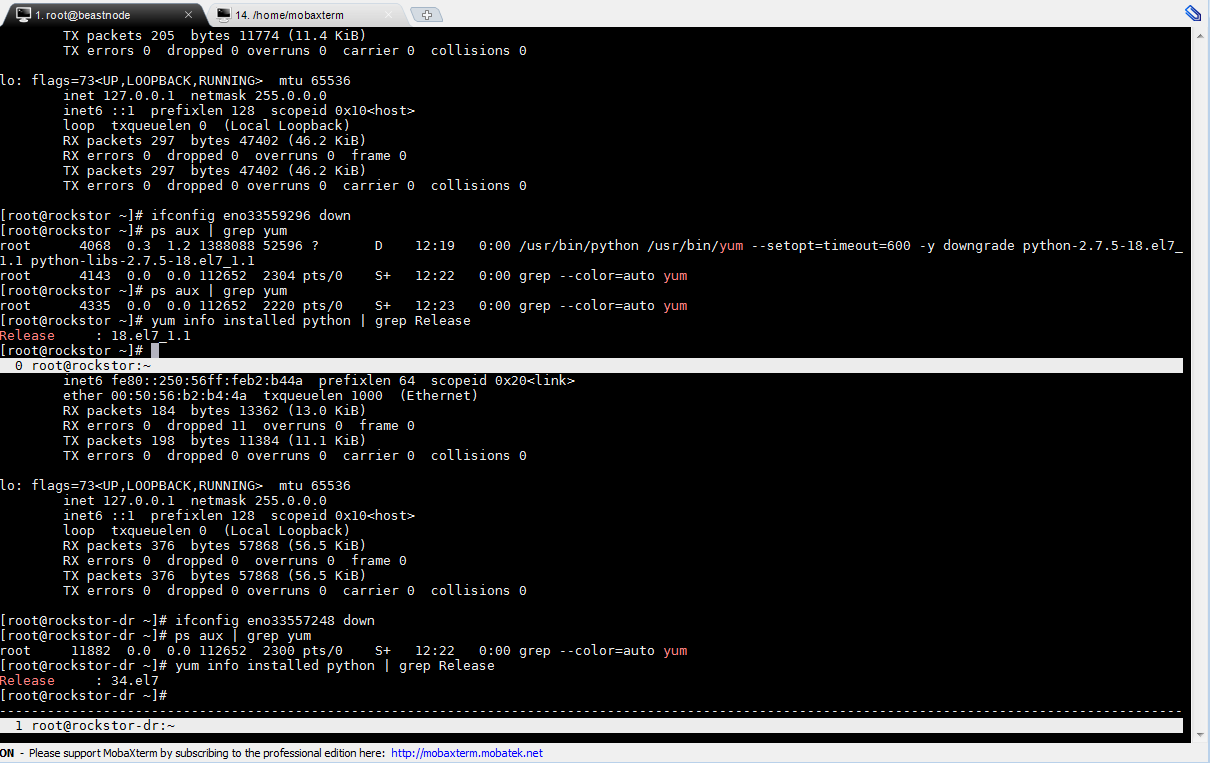

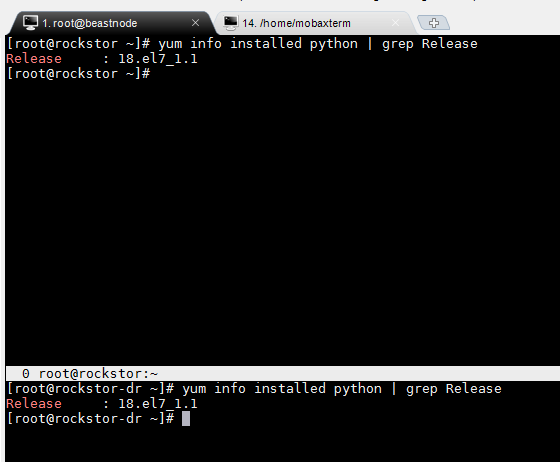

yum downgrade util-linux-2.23.2-22.el7_1.1.x86_64.rpm downgrade libblkid-2.23.2-22.el7_1.1.x86_64.rpm downgrade libmount-2.23.2-22.el7_1.1.x86_64.rpm downgrade libuuid-2.23.2-22.el7_1.1.x86_64.rpm

Note that my rather thin understanding of yum rpm is that these packages will return to their prior (for you non working) versions with the next update. So you will have to exclude them from being updated, ie via an exclude=packagename in the relevant repo listing. See man 5 yum.conf

N.B. I haven’t tried this but from that man page it looks like you will need:-

exclude=util-linux libblkid libmount libuuid

in /etc/yum.conf

After the downgrade you will have to reboot to begin using the downgraded versions.

Again please don’t do this on a production server but if there are no risks then this may very well prove the issue.

If anyone has a nicer way to do this then that would be great and sorry for being such a hack.

My bad/misunderstanding.

My bad/misunderstanding.