Further information:

>>> PoolScrub.objects.all().values()

<QuerySet [{'id': 1, 'pool_id': 1, 'status': 'unknown', 'pid': 15628, 'start_time': datetime.datetime(2024, 7, 7, 21, 16, 56, 266612, tzinfo=datetime.timezone.utc), 'end_time': None, 'time_left': 0, 'eta': None, 'rate': '', 'kb_scrubbed': None, 'data_extents_scrubbed': 0, 'tree_extents_scrubbed': 0, 'tree_bytes_scrubbed': 0, 'read_errors': 0, 'csum_errors': 0, 'verify_errors': 0, 'no_csum': 0, 'csum_discards': 0, 'super_errors': 0, 'malloc_errors': 0, 'uncorrectable_errors': 0, 'unverified_errors': 0, 'corrected_errors': 0, 'last_physical': 0}]>

>>> returned = scrub_status_raw(mnt_pt="/mnt2/main_pool")

>>> returned

{'status': 'finished', 'duration': 0, 'data_extents_scrubbed': 0, 'tree_extents_scrubbed': 12, 'kb_scrubbed': 0.0, 'tree_bytes_scrubbed': 196608, 'read_errors': 0, 'csum_errors': 0, 'verify_errors': 0, 'no_csum': 0, 'csum_discards': 0, 'super_errors': 0, 'malloc_errors': 0, 'uncorrectable_errors': 0, 'unverified_errors': 0, 'corrected_errors': 0, 'last_physical': 22020096}

and:

rockdev:~ # btrfs scrub status -R /mnt2/main_pool

UUID: 900e4fca-a18a-4dca-8350-4bb90e88c466

Scrub started: Sun Jul 7 17:16:56 2024

Status: finished

Duration: 0:00:00

data_extents_scrubbed: 0

tree_extents_scrubbed: 12

data_bytes_scrubbed: 0

tree_bytes_scrubbed: 196608

read_errors: 0

csum_errors: 0

verify_errors: 0

no_csum: 0

csum_discards: 0

super_errors: 0

malloc_errors: 0

uncorrectable_errors: 0

unverified_errors: 0

corrected_errors: 0

last_physical: 22020096

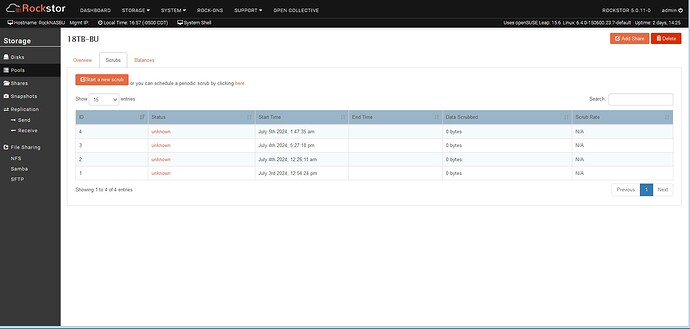

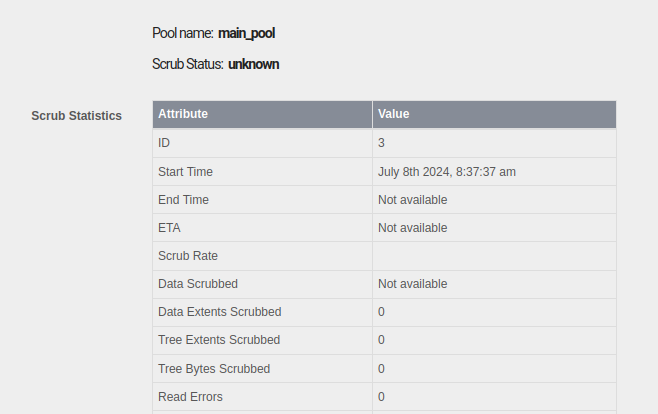

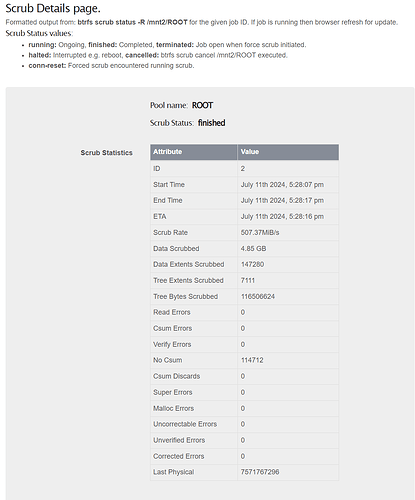

It does seem to parse the scrub “details” ok but the status remains as “unknown”.

![]()