Ok this is scary weird, but I want to try explaing what is happening, because it may have some valid reasons, but I don’t have the skill level nor the experience to give an answer. So here we go:

My main system is a windows 11 machine, where I am running a certain application, which (at boot) fetches various xml files, which are going to build the content of the application sub-menus (there are 20ish directories, each one containing an xml file, which store just some basic metadata of the files contained inside each directory).

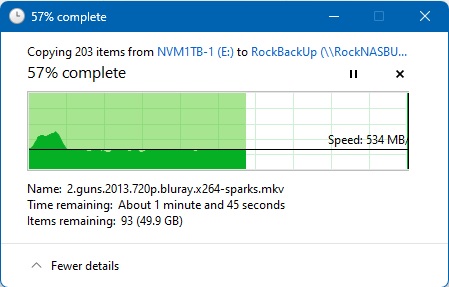

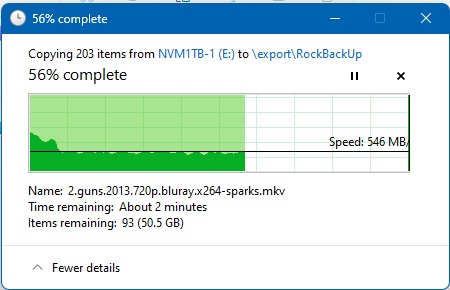

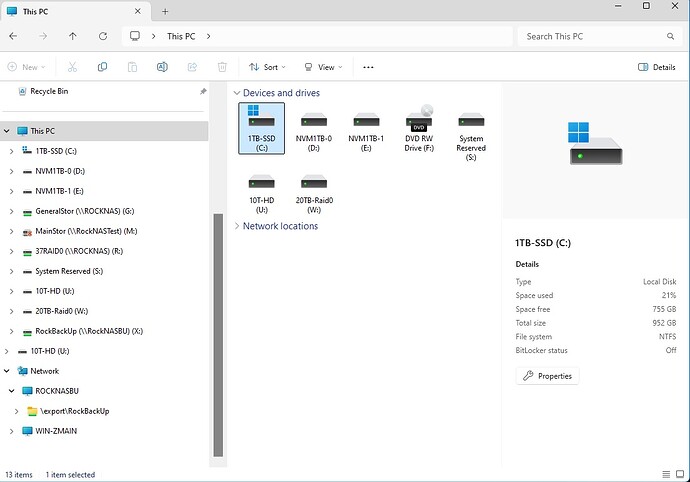

These directories, and subsequentially every xml file are stored in my shared Rockstor pool, which is running on a gen3 i3 cpu, with 16GB of ddr3 ram, a cheap 128GB ssd where Rockstor is installed, another cheap 128GB ssd configured as cache disk and a couple of 3TB Western Digital RED (NAS Ware) @ 5400 rpm, configured in RAID1.

Rockstor is “exposing” the shared data with both NFS and SMB, and my main windows 11 system, where that applcation is running, is mounting the Rockstor share via NFS. Within this environment, the application takes more or less 100 seconds to launch. It was kinda not acceptable to me, even because I remember that it used to load very very faster than that and I couldn’t understand what was wrong. I tried to dive back in the past weeks to see if I could remember if I did some changes, somewhere, but I couldn’t, hence I started to think that my Rockstor system was somehow degraded (maybe the disks were failing or something like that) and so I started benchmarking everything out, but I couldn’t find anything related to the Rockstor performance.

And here we get to the point, when, among all the experiments I’ve done, I tried to mount the same share, on the same system, ALSO in SMB, so that we now have “Z:\blablabla” and “Y:\blablabla”: two identical mounted “network drives” (sorry if this is not the correct term), that just differ for their filesystem access mode (Z: is mounted as NFS, while Y: is mounted as SMB). I tried relaunching the application, having it configured to access those xml on Z (NFS), and it started up in 20 seconds (the same amount of time it used to!!). I then tried to do the same setting Y: (SMB) as target directory to fetch the xml files, configuring my application settings and it started up, again in 20 seconds.

Now the big question is: Why in this world mounting the same share two times, one in NFS and one in SMB, would improve so much my Rockstor disk access timings?

Sorry if I’ve wrote so much, but I thought that it could be interesting to investigate for someone. And I am competely at your disposal, if you want me to try, test and experiment some procedures for the academic purpose xD.

Thanks for your patience and your interest!