[Please complete the below template with details of the problem reported on your Web-UI. Be as detailed as possible. Community members, including developers, shall try and help. Thanks for your time in reporting this issue! We recommend purchasing commercial support for expedited support directly from the developers.]

Brief description of the problem

(Please note - if there is a not a means to recover the array, I can reinstall Rockstor and start over; I’m not prod yet. However, If this isn’t recoverable, then I’m hesitant to proceed with btrfs.)

A drive in a RAID1 array is failing (or rather, the file system has an error). From /var/log/messages:

Apr 14 16:18:50 rockstor kernel: BTRFS info (device sda): use lzo compression

Apr 14 16:18:50 rockstor kernel: BTRFS info (device sda): disk space caching is enabled

Apr 14 16:18:50 rockstor kernel: BTRFS info (device sda): has skinny extents

Apr 14 16:18:50 rockstor kernel: BTRFS error (device sda): failed to read chunk tree: -5

Apr 14 16:18:50 rockstor kernel: BTRFS error (device sda): open_ctree failed

Apr 14 16:19:51 rockstor kernel: BTRFS info (device sda): use lzo compression

Apr 14 16:19:51 rockstor kernel: BTRFS info (device sda): disk space caching is enabled

Apr 14 16:19:51 rockstor kernel: BTRFS info (device sda): has skinny extents

Apr 14 16:19:51 rockstor kernel: BTRFS error (device sda): failed to read chunk tree: -5

Apr 14 16:19:51 rockstor kernel: BTRFS error (device sda): open_ctree failed

etc. ad nauseum.

The pool is unmounted. I can click the pool in the UI but ‘Resize’ is not an option (I hoped to go there to remove it from the array).

Detailed step by step instructions to reproduce the problem

This morning, I rebooted to try installing Rockstor 4. I noted that /dev/sda was not listed as a potential install target (that’s OK in that I did not intend to install to it anyway). On seeing an error, I booted back to Rockstor 3 and this is the current state.

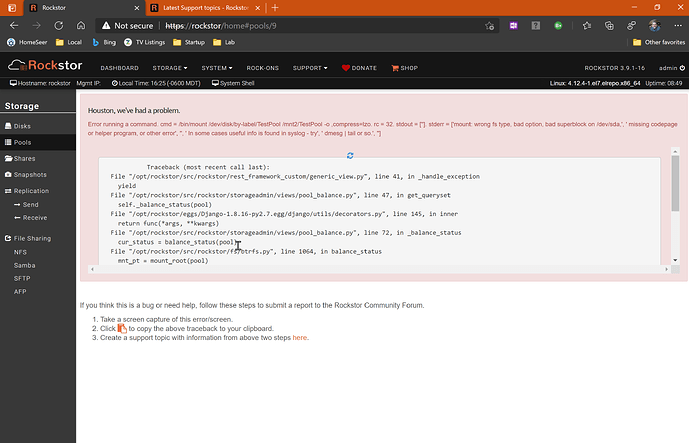

Web-UI screenshot

[Drag and drop the image here]

Error Traceback provided on the Web-UI

Traceback (most recent call last):

File "/opt/rockstor/src/rockstor/rest_framework_custom/generic_view.py", line 41, in _handle_exception

yield

File "/opt/rockstor/src/rockstor/storageadmin/views/pool_balance.py", line 47, in get_queryset

self._balance_status(pool)

File "/opt/rockstor/eggs/Django-1.8.16-py2.7.egg/django/utils/decorators.py", line 145, in inner

return func(*args, **kwargs)

File "/opt/rockstor/src/rockstor/storageadmin/views/pool_balance.py", line 72, in _balance_status

cur_status = balance_status(pool)

File "/opt/rockstor/src/rockstor/fs/btrfs.py", line 1064, in balance_status

mnt_pt = mount_root(pool)

File "/opt/rockstor/src/rockstor/fs/btrfs.py", line 253, in mount_root

run_command(mnt_cmd)

File "/opt/rockstor/src/rockstor/system/osi.py", line 121, in run_command

raise CommandException(cmd, out, err, rc)

CommandException: Error running a command. cmd = /bin/mount /dev/disk/by-label/TestPool /mnt2/TestPool -o ,compress=lzo. rc = 32. stdout = ['']. stderr = ['mount: wrong fs type, bad option, bad superblock on /dev/sda,', ' missing codepage or helper program, or other error', '', ' In some cases useful info is found in syslog - try', ' dmesg | tail or so.', '']