[Please complete the below template with details of the problem reported on your Web-UI. Be as detailed as possible. Community members, including developers, shall try and help. Thanks for your time in reporting this issue! We recommend purchasing commercial support for expedited support directly from the developers.]

Brief description of the problem

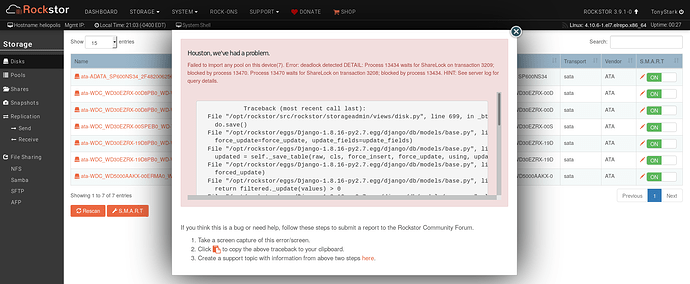

Failed to import array on a fresh install

Detailed step by step instructions to reproduce the problem

Install rockstor on a drive, reboot twice. then while powered down install/connect your old btrfs array. when you reboot in the http UI you use import and then you get the error

Web-UI screenshot

Error Traceback provided on the Web-UI

Traceback (most recent call last):

File “/opt/rockstor/src/rockstor/storageadmin/views/disk.py”, line 699, in _btrfs_disk_import

do.save()

File “/opt/rockstor/eggs/Django-1.8.16-py2.7.egg/django/db/models/base.py”, line 734, in save

force_update=force_update, update_fields=update_fields)

File “/opt/rockstor/eggs/Django-1.8.16-py2.7.egg/django/db/models/base.py”, line 762, in save_base

updated = self._save_table(raw, cls, force_insert, force_update, using, update_fields)

File “/opt/rockstor/eggs/Django-1.8.16-py2.7.egg/django/db/models/base.py”, line 827, in _save_table

forced_update)

File “/opt/rockstor/eggs/Django-1.8.16-py2.7.egg/django/db/models/base.py”, line 877, in _do_update

return filtered._update(values) > 0

File “/opt/rockstor/eggs/Django-1.8.16-py2.7.egg/django/db/models/query.py”, line 580, in _update

return query.get_compiler(self.db).execute_sql(CURSOR)

File “/opt/rockstor/eggs/Django-1.8.16-py2.7.egg/django/db/models/sql/compiler.py”, line 1062, in execute_sql

cursor = super(SQLUpdateCompiler, self).execute_sql(result_type)

File “/opt/rockstor/eggs/Django-1.8.16-py2.7.egg/django/db/models/sql/compiler.py”, line 840, in execute_sql

cursor.execute(sql, params)

File “/opt/rockstor/eggs/Django-1.8.16-py2.7.egg/django/db/backends/utils.py”, line 64, in execute

return self.cursor.execute(sql, params)

File “/opt/rockstor/eggs/Django-1.8.16-py2.7.egg/django/db/utils.py”, line 98, in exit

six.reraise(dj_exc_type, dj_exc_value, traceback)

File “/opt/rockstor/eggs/Django-1.8.16-py2.7.egg/django/db/backends/utils.py”, line 64, in execute

return self.cursor.execute(sql, params)

OperationalError: deadlock detected

DETAIL: Process 13434 waits for ShareLock on transaction 3209; blocked by process 13470.

Process 13470 waits for ShareLock on transaction 3208; blocked by process 13434.

HINT: See server log for query details.