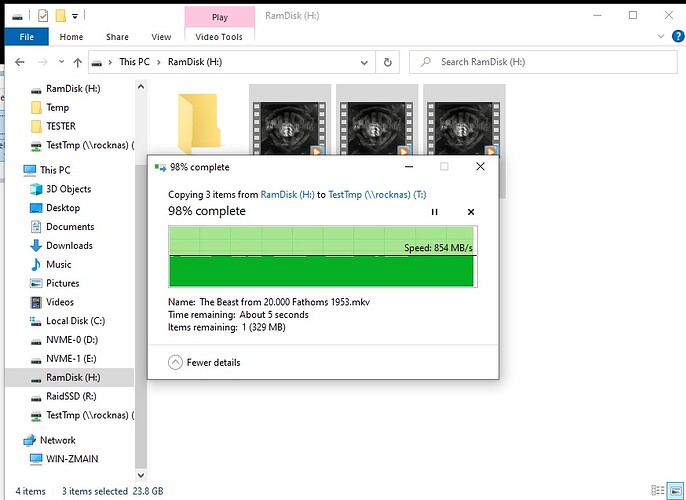

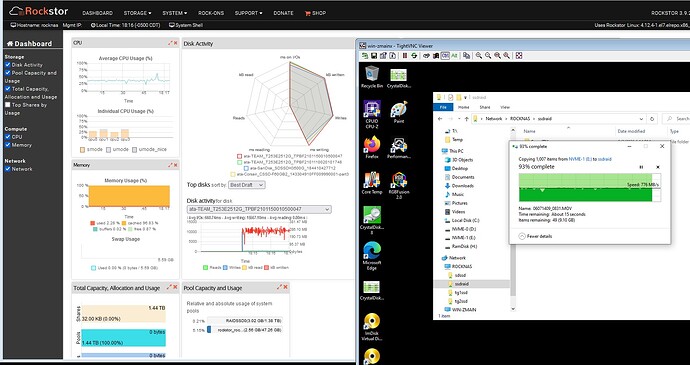

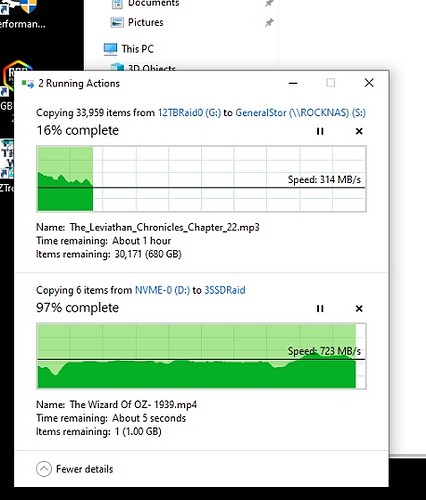

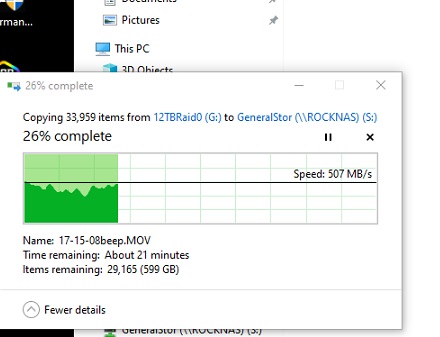

For weeks I was worried about the transfer speed fluctuations over my 10G fiber network and discovered some things.

To narrow things down all drives in my NAS were disconnected and only 2 fast SSDs were setup in Raid-0.

-

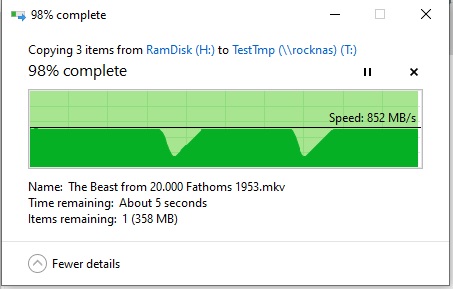

The 4.5GHz 3570K and i7-5930K setups were fastest, the 5930K being most consistent. (1+ GBytes/sec). The R9-5950X setup only runs the network up to 850Gbps for some reason.

-

When copying 240G of files from a NVME OR RamDisk, the RockNas setup would consistently slow speeds to near zero as described in another post. (5950X testing, same results as 5930k)

-

Installing Windows 10 on the RockNas setup hurt things rather than help! Go figure!

-

Installing LinuxMint 20.4 and setting up a shared 1TB SSD Raid-0 Ext4, I got much clearer results. These test were repeated dozens of times in both directions from NAS to 5930K/5950X setups using Rockstor (in previous testing in other thread) and same setup with LM 20.4.

In all this recent testing, 3 copies of an 8GB file were used.

I reached the following results:

A CLEAN transfer of files to the Rockstor setup running Rockstor or LinuxMint 20.4 (<33G total) depends on whether or not there are existing files on the disk drives.

EMPTY Destination Drive:

Overwriting Existing Files:

When over-writing files on the Raid-0 Ext4 setup, there was a slight lag starting the transfer then two more dips in speed. When doing very LARGE transfers, these dips start happening when the 32G of local Ram fills up.

The results show there is nothing wrong with Rockstor using btrfs or the same hardware using Linux with Ext4 or Windows 10 (W10 being the worst). The fact is, there is some significant overhead involved using SSD’s when over-writing files no matter the O/S in question with regards to long sustained transfers.

Furthermore, it may be worse or better with other SSD’s, but I saw this same behavior using two smaller SSDs of another brand.

Furthermore, the slower 850MBs AMD 5960X speed was “conditionally” traced to the buss interface on the Intel 10G NICs I am using. The Intel cards use PCIe-2.0 x8 and the R9-5950X motherboards can only run it PCIe-2.0 x4. Solution to this problem will probably be an upgrade to a PCIe-3.0 x4 card so far as the AMD R9-5950X is concerned. The other 3570K/5930K setups support x8 and run full 1000 MBs speed.

Final thoughts: When the hardware can’t keep up with the network speed, it isn’t necessarily a hardware fault, or the operating system fault, or the “other guys” fault. It can be a combination of SSD TRIM functions, HD access/RW speed, cache usage and methods, overall storage paradigm, all the above and more.

My total setup would run without glitches at all at 1Gbps speed and probably fine up to 5Gbps speed. It is only when I push it hard to 10Gbps speed that a little “Gotchya!” comes into play. The “Gotchya!” is complex and unpredictable in a large 240GB transfer of many different file types and sizes, therefore the intermittent full speed slowdowns seem unpredictable. BUT, I have proven at least two significant and repeatable setups where Mr. Murphy is paying close attention! That is enough for me to support the hypothesis.

Still can’t wait for Rockstor 4 to be released as an ISO file!

![]()

PS: Notable slowdowns also occur when directories and sub-directories are being created and when numerous small and tiny files are transferred, but at least we know the SSDs themselves have something to do with it as well. It’s complex, but now to me understandable.