Its early so i apologize in advance for any typos or incoherent thoughts, i will try to come back later today and revisit this and clean it up if needed.

This install is based on RockStor iso V3.8-9 using a Mellenox MHGA28-XTC

My assumption that the a driver was present in this build was correct. It doesnt seem to be Mellanox or open fabric though since the tools they listed for testing are not found. This lead to some confusion on my part since the tests they had suggested relied on them and i thought the driver was not installed… Below is the basics of what i have found so far, as i educate myself further on centos (and linux in general) i will update with changes. This is on an older system with a connect-x card, but i dont see why it wouldnt apply to newer generations.

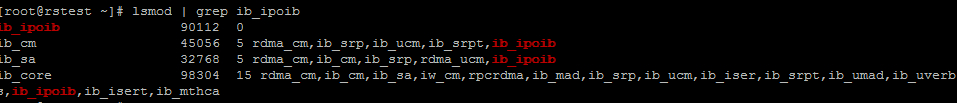

Verify that IPoIB is loaded:

lsmod | grep ib_ipoib

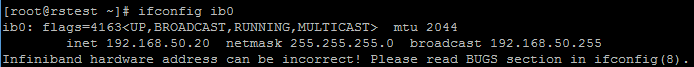

Check that the card was detected:

ifconfig ib0

assign the port an ip address to test connection

ifconfig ib0 192.168.50.20

Verify it was assigned properly

ifconfig ib0

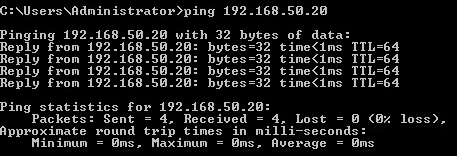

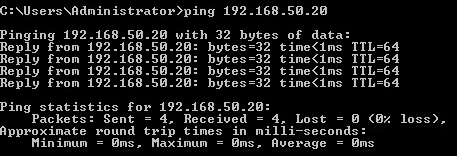

verify the connection from another pc on the network via ping

configure ib0 to load on boot (still not sure if the is 100% right and im sure it needs tweaking to get the performance up). Change ips/domain to reflect your network

vi /etc/sysconfig/network-scripts/ifcfg-ib0

NAME=“ib0”

TYPE=“Infiniband”

BOOTPROTO=“static”

ONBOOT=“yes”

IPADDR0=“192.168.50.20”

NETMASK=“255.255.255.0”

GATEWAY0=“192.168.50.2”

DNS1=“192.168.50.2”

DOMAIN=infiniband.local

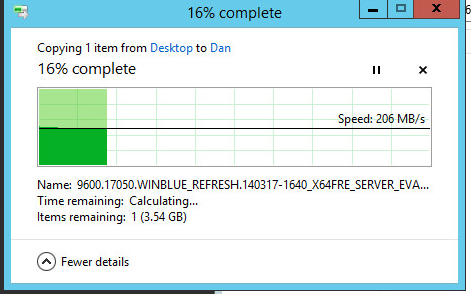

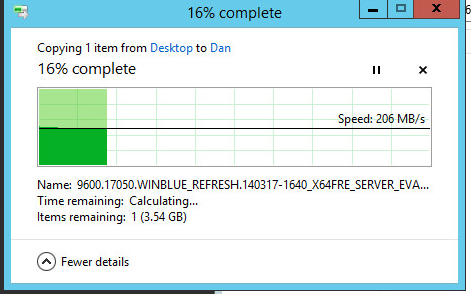

Reboot your NAS to and retest with ping to make sure it is still accessible. If it is great, turn on SMB and give it a try

Most of this information was pulled from a number of sources and i didnt save any of the links except the one below. Once i found it i was able to figure out what was really going on with the drivers.

https://docs.oracle.com/cd/E19436-01/820-3522-10/ch4-linux.html

Once i have finished running burn-in testing on my nas hardware i will reload it with a fresh copy and re-test. It has a newer card and sdd so i will also have a better idea of speeds and can tweak the settings.

I’m still new to linux and infiniband, but if anyone has questions i will do my best to answer them or point you in a direction.

Hope this helps