First of all I would like to announce that I have written a (somewhat extensive) post about using VMs with cockpit on top of Rockstor

Feel free to copy & modify this guide in case you would like to add a section about VMs to the Rockstor documentation.

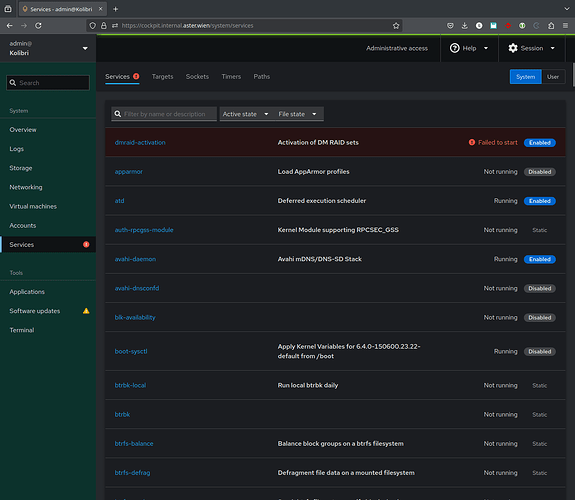

I agree that it is inconsequential & I only noticed it because cockpit warned me about a failing service with a red exclamation mark:

As it is the only failing service, I will probably simply disable it to get rid of the warning ![]()

Thank you for pointing that out, I was not aware of this feature but it sounds great for my purpose.

Although, as I do have to write the mount unit regardless of external scripts, I will probably switch to another mounting location (probably a subfolder of /mnt) to not entangle my script with the rockstor scipts.