Brief description of the problem

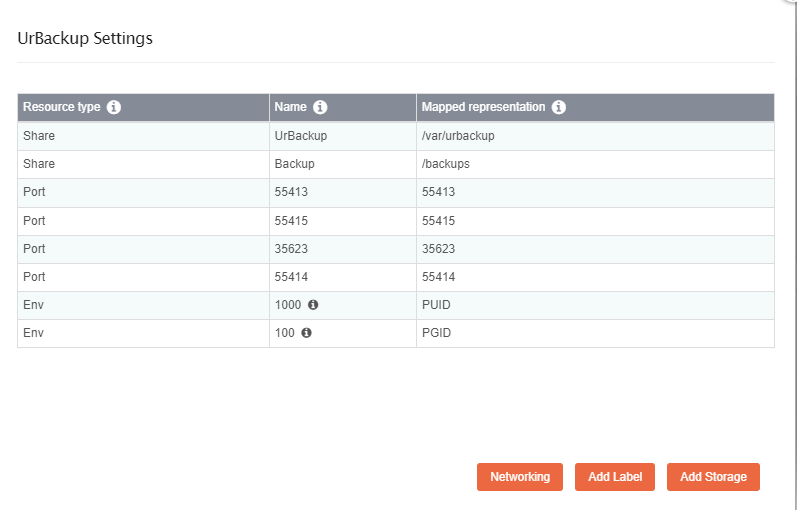

I am in the process of making a RockOn package, UrBackup. Everything seems fine on the docker side, my problem seems on the Data side of thing.

Detailed step by step instructions to reproduce the problem

Here is the UrBackup.json

{

"UrBackup": {

"containers": {

"UrBackup": {

"image": "uroni/urbackup-server",

"launch_order": 1,

"opts": [

["--cap-add", "SYS_ADMIN" ],

["--net", "host"]

],

"volumes": {

"/var/urbackup": {

"description": "Database Location",

"label": "Config/Database Storage"

},

"/backups": {

"description": "Backup Location",

"label": "Backup Storage"

}

},

"environment": {

"PUID": {

"description": "Enter a valid UID to run",

"label": "UID",

"index": 1,

"default":1000

},

"PGID": {

"description": "Enter a valid GID to use along with the same UID. ",

"label": "GID",

"index": 2,

"default":100

}

},

"ports": {

"55414": {

"description": "WebuiPort",

"host_default": 55414,

"label": "WebUI port",

"protocol": "tcp",

"ui": true

},

"55413": {

"description": "FastCGI Web",

"host_default": 55413,

"label": "HTTPS port",

"protocol": "tcp",

"ui": false

},

"55415": {

"description": "Internet Client",

"host_default": 55415,

"label": "Internet client",

"protocol": "tcp",

"ui": false

},

"35623": {

"description": "UDP Discovery Broadcast",

"host_default": 35623,

"label": "UDP Discovery",

"protocol": "udp",

"ui": false

}

}

}

},

"description": "UrBAckup. <p>Based on UrBackup image: <a href='https://hub.docker.com/r/uroni/urbackup-server' target='_blank'>https://hub.docker.com/r/uroni/urbackup-server</a>.",

"volume_add_support": true,

"ui": {

"slug": ""

},

"website": "https://www.urbackup.org/#UrBackup",

"version": "Latest"

}

}

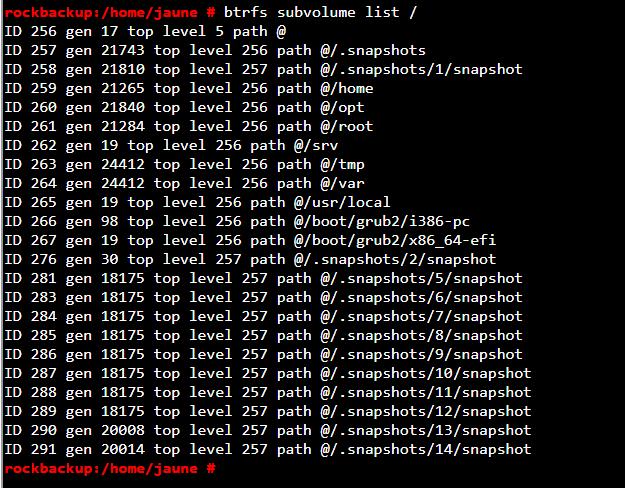

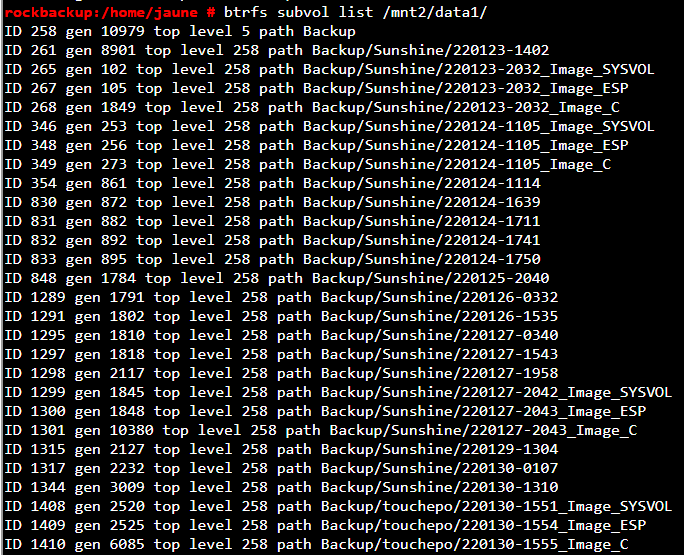

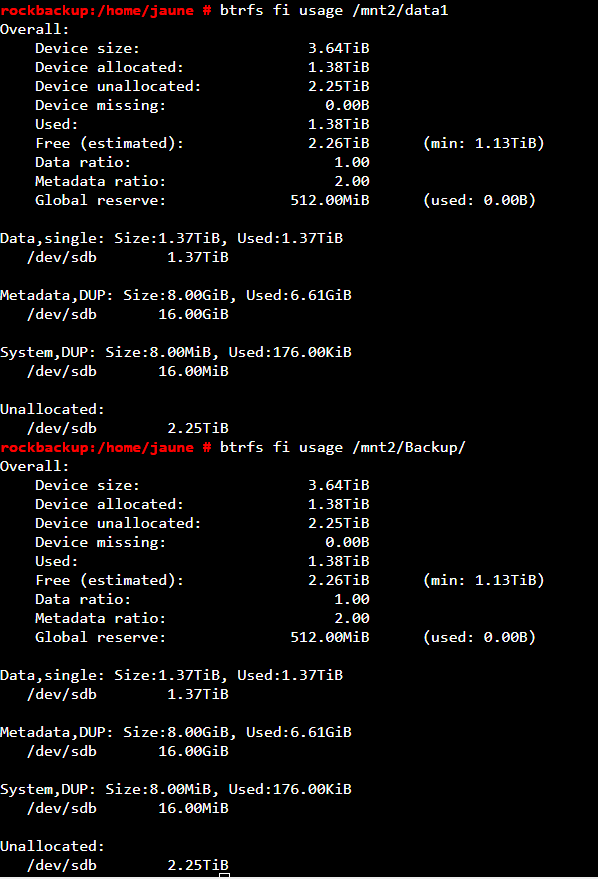

Here is, maybe, a part that can help solve the problem

UrBackup - Server administration manual

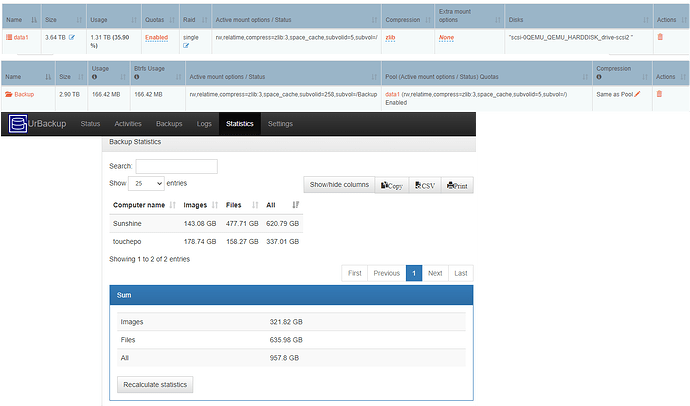

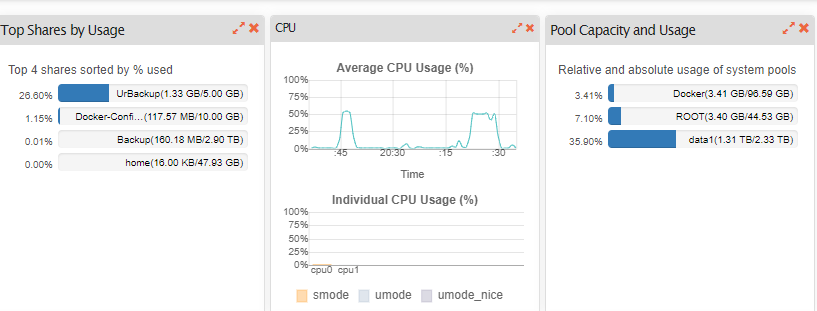

Web-UI screenshot

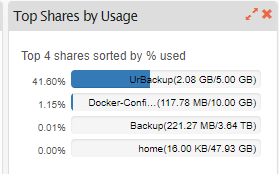

While i don’t mind the 1.1tb in rockstor vs the 1tb in UrBackup (could be a lot of factor) the real problem is that if i try to send/receive (replication) only the 160mb inside the folder BACKUP is send across. Not what we want for a backup.

Hope my question is clear as English is not my primary language.

If you need more information feel free to ask !This text will be hidden