Brief description of the problem

My / partition is full and I’m unable to access the web GUI.

Detailed step by step instructions to reproduce the problem

When I attempt to connect to the web GUI, I get an error.

I’m able to log in via SSH, but it appears that my / partition is full; df -h shows 100% disk usage, 0 available.

Web-UI screenshot

“Unable to connect” error message.

Error Traceback provided on the Web-UI

No web UI.

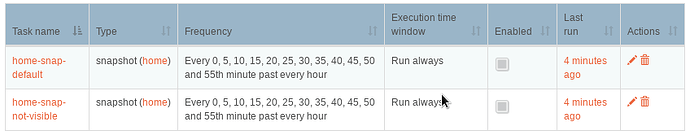

I’m seeking recommendations for how I should free up space. I suspect most of the disk usage is from BTRFS snapshots. Here’s the output of btrfs subvolume list /:

ID 257 gen 3569726 top level 5 path home

ID 258 gen 8011343 top level 5 path root

ID 260 gen 370754 top level 258 path var/lib/machines

ID 294 gen 260378 top level 5 path .snapshots/home/home_201701010400

ID 295 gen 262585 top level 5 path .snapshots/root/root_201701020400

ID 296 gen 269288 top level 5 path .snapshots/home/home_201701050442

ID 297 gen 271491 top level 5 path .snapshots/root/root_201701060442

ID 298 gen 347507 top level 5 path .snapshots/home/home_201702090342

ID 299 gen 349949 top level 5 path .snapshots/root/root_201702100342

ID 300 gen 364539 top level 5 path .snapshots/home/home_201702160342

ID 301 gen 367350 top level 5 path .snapshots/root/root_201702170342

ID 305 gen 396684 top level 5 path .snapshots/root/root-monthly_201703020300

ID 318 gen 737736 top level 5 path .snapshots/root/root-monthly_201708020300

ID 333 gen 852589 top level 5 path .snapshots/root/root-weekly_201709290342

ID 335 gen 858759 top level 5 path .snapshots/root/root-monthly_201710020300

ID 337 gen 894993 top level 5 path .snapshots/root/root-weekly_201710200342

ID 339 gen 909135 top level 5 path .snapshots/root/root-weekly_201710270342

ID 341 gen 919340 top level 5 path .snapshots/home/home-monthly_201711010300

ID 342 gen 921417 top level 5 path .snapshots/root/root-monthly_201711020300

ID 344 gen 923574 top level 5 path .snapshots/root/root-weekly_201711030342

ID 347 gen 2341422 top level 5 path .snapshots/home/snapshot-home

ID 355 gen 2450843 top level 5 path .snapshots/home/home-monthly_201912010300

ID 360 gen 2506157 top level 5 path .snapshots/home/home-monthly_202001010300

ID 366 gen 2563809 top level 5 path .snapshots/home/home-monthly_202002010300

ID 370 gen 3381339 top level 5 path .snapshots/home/home-monthly_202003010300

ID 371 gen 3388134 top level 5 path .snapshots/home/home-weekly_202003050342

ID 372 gen 3400647 top level 5 path .snapshots/home/home-weekly_202003120342

ID 373 gen 3413067 top level 5 path .snapshots/home/home-weekly_202003190342

ID 374 gen 3421442 top level 5 path .snapshots/home/home-weekly_202003260342

ID 375 gen 3569725 top level 5 path .snapshots/home/home-monthly_202004010300

and btrfs qgroup show /:

qgroupid rfer excl

-------- ---- ----

0/5 0.00B 0.00B

0/257 16.00KiB 16.00KiB

0/258 3.69GiB 2.67GiB

0/260 16.00KiB 16.00KiB

0/294 16.00KiB 16.00KiB

0/295 2.30GiB 253.43MiB

0/296 16.00KiB 16.00KiB

0/297 2.17GiB 125.86MiB

0/298 16.00KiB 16.00KiB

0/299 2.18GiB 206.22MiB

0/300 16.00KiB 16.00KiB

0/301 2.39GiB 135.72MiB

0/305 2.44GiB 307.67MiB

0/318 3.18GiB 765.55MiB

0/333 3.19GiB 172.34MiB

0/335 3.15GiB 96.93MiB

0/337 3.21GiB 122.94MiB

0/339 3.20GiB 121.91MiB

0/341 16.00KiB 16.00KiB

0/342 3.20GiB 2.89MiB

0/344 3.21GiB 19.97MiB

0/347 16.00KiB 16.00KiB

0/355 16.00KiB 16.00KiB

0/360 16.00KiB 16.00KiB

0/366 16.00KiB 16.00KiB

0/370 16.00KiB 16.00KiB

0/371 16.00KiB 16.00KiB

0/372 16.00KiB 16.00KiB

0/373 16.00KiB 16.00KiB

0/374 16.00KiB 16.00KiB

0/375 16.00KiB 16.00KiB

2015/1 240.00KiB 240.00KiB

2015/2 7.90GiB 6.88GiB

I’m figuring I can safely get rid of any of those snapshots with “2017” in the filename, but I’m not great with command-line btrfs. I know the command should look something like btrfs subvolume delete .snapshots/root/root_201701020400 but I’m not sure where those subvolumes are actually located. IIRC I have to unmount the current snapshot and switch to a higher level to access all of them, but I don’t remember how to do that and I’m not even sure if I’m barking up the right tree – should I be focusing on deleting snapshots at all, or is there somewhere else I should be looking into freeing up space?

Any help is appreciated. Thanks.