[ 60.932954] BTRFS error (device sdb): level verify failed on logical 2577060052992 mirror 2 wanted 1 found 0

[ 60.938717] nvme0n1: I/O Cmd(0x1) @ LBA 69312480, 8 blocks, I/O Error (sct 0x0 / sc 0x20)

[ 60.939194] I/O error, dev nvme0n1, sector 69312480 op 0x1:(WRITE) flags 0x800 phys_seg 1 prio class 2

[ 60.941326] BTRFS error (device sdb): parent transid verify failed on logical 2577060069376 mirror 2 wanted 741470 fo

und 666211

[ 60.945706] nvme0n1: I/O Cmd(0x1) @ LBA 69312512, 8 blocks, I/O Error (sct 0x0 / sc 0x20)

[ 60.946284] I/O error, dev nvme0n1, sector 69312512 op 0x1:(WRITE) flags 0x800 phys_seg 1 prio class 2

[ 60.947420] BTRFS error (device sdb): level verify failed on logical 2577060085760 mirror 2 wanted 0 found 1

[ 60.948463] BTRFS error (device sdb): level verify failed on logical 2577060085760 mirror 2 wanted 0 found 1

[ 60.952188] nvme0n1: I/O Cmd(0x1) @ LBA 69312544, 8 blocks, I/O Error (sct 0x0 / sc 0x20)

[ 60.953023] I/O error, dev nvme0n1, sector 69312544 op 0x1:(WRITE) flags 0x800 phys_seg 1 prio class 2

[ 60.955662] BTRFS error (device sdb): level verify failed on logical 2575970598912 mirror 2 wanted 1 found 0

[ 60.960589] nvme0n1: I/O Cmd(0x1) @ LBA 14755840, 8 blocks, I/O Error (sct 0x0 / sc 0x20)

[ 60.961039] I/O error, dev nvme0n1, sector 14755840 op 0x1:(WRITE) flags 0x800 phys_seg 1 prio class 2

[ 60.961725] BTRFS error (device sdb): parent transid verify failed on logical 2575974203392 mirror 2 wanted 741485 fo

und 734077

[ 60.967384] nvme0n1: I/O Cmd(0x1) @ LBA 14762880, 8 blocks, I/O Error (sct 0x0 / sc 0x20)

[ 60.968220] I/O error, dev nvme0n1, sector 14762880 op 0x1:(WRITE) flags 0x800 phys_seg 1 prio class 2

[ 60.970019] BTRFS error (device sdb): parent transid verify failed on logical 2575975841792 mirror 2 wanted 741485 fo

und 734077

[ 60.974740] nvme0n1: I/O Cmd(0x1) @ LBA 14766080, 8 blocks, I/O Error (sct 0x0 / sc 0x20)

[ 60.975683] I/O error, dev nvme0n1, sector 14766080 op 0x1:(WRITE) flags 0x800 phys_seg 1 prio class 2

[ 60.977375] BTRFS error (device sdb): level verify failed on logical 2575970631680 mirror 2 wanted 2 found 1

[ 60.980948] nvme0n1: I/O Cmd(0x1) @ LBA 14755904, 8 blocks, I/O Error (sct 0x0 / sc 0x20)

[ 60.981594] I/O error, dev nvme0n1, sector 14755904 op 0x1:(WRITE) flags 0x800 phys_seg 1 prio class 2

[ 60.982882] BTRFS error (device sdb): level verify failed on logical 2575971860480 mirror 2 wanted 2 found 0

[ 60.988136] nvme0n1: I/O Cmd(0x1) @ LBA 14758304, 8 blocks, I/O Error (sct 0x0 / sc 0x20)

[ 60.988714] I/O error, dev nvme0n1, sector 14758304 op 0x1:(WRITE) flags 0x800 phys_seg 1 prio class 2

[ 60.989845] BTRFS error (device sdb): level verify failed on logical 2575974924288 mirror 2 wanted 1 found 0

[ 60.994828] nvme0n1: I/O Cmd(0x1) @ LBA 14764288, 8 blocks, I/O Error (sct 0x0 / sc 0x20)

[ 60.995791] I/O error, dev nvme0n1, sector 14764288 op 0x1:(WRITE) flags 0x800 phys_seg 1 prio class 2

[ 60.997135] BTRFS error (device sdb): parent transid verify failed on logical 2575970615296 mirror 2 wanted 741485 fo

und 734077

[ 61.002389] nvme0n1: I/O Cmd(0x1) @ LBA 14755872, 8 blocks, I/O Error (sct 0x0 / sc 0x20)

[ 61.003098] I/O error, dev nvme0n1, sector 14755872 op 0x1:(WRITE) flags 0x800 phys_seg 1 prio class 2

[ 61.006395] BTRFS error (device sdb): level verify failed on logical 2575975301120 mirror 2 wanted 1 found 0

[ 61.011771] BTRFS error (device sdb): parent transid verify failed on logical 2575975055360 mirror 2 wanted 741485 fo

und 734077

[ 61.019825] BTRFS info (device sdb): bdev /dev/nvme0n1 errs: wr 17522227, rd 0, flush 94019, corrupt 599812, gen 0

[ 61.022011] BTRFS error (device sdb): level verify failed on logical 2575970648064 mirror 2 wanted 1 found 0

[ 61.026830] BTRFS error (device sdb): bdev /dev/nvme0n1 errs: wr 17522228, rd 0, flush 94019, corrupt 599812, gen 0

[ 61.027943] BTRFS error (device sdb): parent transid verify failed on logical 2575970664448 mirror 2 wanted 741485 fo

und 734477

[ 61.033253] BTRFS error (device sdb): bdev /dev/nvme0n1 errs: wr 17522229, rd 0, flush 94019, corrupt 599812, gen 0

[ 61.056055] BTRFS error (device sdb): parent transid verify failed on logical 2576700145664 mirror 2 wanted 741447 fo

und 740020

[ 61.062611] BTRFS error (device sdb): bdev /dev/nvme0n1 errs: wr 17522230, rd 0, flush 94019, corrupt 599812, gen 0

[ 61.097330] BTRFS error (device sdb): parent transid verify failed on logical 2795355160576 mirror 2 wanted 741420 fo

und 740013

[ 61.106694] BTRFS error (device sdb): bdev /dev/nvme0n1 errs: wr 17522231, rd 0, flush 94019, corrupt 599812, gen 0

[ 61.110306] BTRFS error (device sdb): level verify failed on logical 2795439538176 mirror 2 wanted 0 found 1

[ 61.116278] BTRFS error (device sdb): bdev /dev/nvme0n1 errs: wr 17522232, rd 0, flush 94019, corrupt 599812, gen 0

[ 61.126068] BTRFS error (device sdb): level verify failed on logical 2575974580224 mirror 2 wanted 1 found 0

[ 61.130550] BTRFS error (device sdb): bdev /dev/nvme0n1 errs: wr 17522233, rd 0, flush 94019, corrupt 599812, gen 0

[ 61.135236] BTRFS error (device sdb): parent transid verify failed on logical 2575975972864 mirror 2 wanted 741485 fo

und 734077

[ 61.142195] BTRFS error (device sdb): bdev /dev/nvme0n1 errs: wr 17522234, rd 0, flush 94019, corrupt 599812, gen 0

[ 61.143761] BTRFS error (device sdb): level verify failed on logical 2575974645760 mirror 2 wanted 1 found 0

[ 61.148594] BTRFS error (device sdb): bdev /dev/nvme0n1 errs: wr 17522235, rd 0, flush 94019, corrupt 599812, gen 0

[ 61.152913] BTRFS error (device sdb): level verify failed on logical 2575971319808 mirror 2 wanted 1 found 0

[ 61.159202] BTRFS error (device sdb): bdev /dev/nvme0n1 errs: wr 17522236, rd 0, flush 94019, corrupt 599812, gen 0

[ 61.175979] BTRFS error (device sdb): level verify failed on logical 2575974268928 mirror 2 wanted 0 found 1

[ 61.181471] BTRFS error (device sdb): bdev /dev/nvme0n1 errs: wr 17522237, rd 0, flush 94019, corrupt 599812, gen 0

[ 61.183044] BTRFS error (device sdb): parent transid verify failed on logical 2575971336192 mirror 2 wanted 741485 fo

und 734077

[ 61.188956] BTRFS info (device sdb): enabling ssd optimizations

[ 61.189768] BTRFS info (device sdb): auto enabling async discard

[ 61.213072] BTRFS info (device sdb): balance: force reducing metadata redundancy

[ 61.214432] BTRFS error (device sdb): level verify failed on logical 2575970680832 mirror 2 wanted 0 found 2

[ 61.344692] BTRFS error (device sdb): level verify failed on logical 2793979691008 mirror 1 wanted 1 found 0

[ 61.369126] BTRFS error (device sdb): level verify failed on logical 2794311647232 mirror 1 wanted 1 found 0

[ 61.373653] BTRFS error (device sdb): csum mismatch on free space cache

[ 61.374584] BTRFS warning (device sdb): failed to load free space cache for block group 2627543760896, rebuilding it

now

[ 61.380567] BTRFS error (device sdb): csum mismatch on free space cache

[ 61.381459] BTRFS warning (device sdb): failed to load free space cache for block group 2639354920960, rebuilding it

now

[ 61.384348] BTRFS error (device sdb): csum mismatch on free space cache

[ 61.385011] BTRFS warning (device sdb): failed to load free space cache for block group 2644723630080, rebuilding it

now

[ 61.400029] BTRFS error (device sdb): level verify failed on logical 2575971876864 mirror 2 wanted 1 found 2

[ 61.405021] BTRFS error (device sdb): level verify failed on logical 2794279796736 mirror 1 wanted 0 found 1

[ 61.409508] BTRFS error (device sdb): csum mismatch on free space cache

[ 61.409610] BTRFS error (device sdb): level verify failed on logical 2575971893248 mirror 2 wanted 0 found 1

[ 61.410719] BTRFS warning (device sdb): failed to load free space cache for block group 2679083368448, rebuilding it

now

[ 61.422763] BTRFS error (device sdb): csum mismatch on free space cache

[ 61.423413] BTRFS warning (device sdb): failed to load free space cache for block group 2687673303040, rebuilding it

now

[ 61.428888] BTRFS error (device sdb): csum mismatch on free space cache

[ 61.429531] BTRFS warning (device sdb): failed to load free space cache for block group 2693042012160, rebuilding it

now

[ 61.431160] BTRFS error (device sdb): csum mismatch on free space cache

[ 61.431800] BTRFS warning (device sdb): failed to load free space cache for block group 2694115753984, rebuilding it

now

[ 61.437254] BTRFS error (device sdb): csum mismatch on free space cache

[ 61.437536] BTRFS error (device sdb): csum mismatch on free space cache

[ 61.437881] BTRFS warning (device sdb): failed to load free space cache for block group 2702705688576, rebuilding it

now

[ 61.438484] BTRFS warning (device sdb): failed to load free space cache for block group 2703779430400, rebuilding it

now

[ 61.440073] BTRFS error (device sdb): csum mismatch on free space cache

[ 61.441523] BTRFS warning (device sdb): failed to load free space cache for block group 2705926914048, rebuilding it

now

[ 61.455224] BTRFS warning (device sdb): failed to load free space cache for block group 2713443106816, rebuilding it

now

[ 61.456022] BTRFS warning (device sdb): failed to load free space cache for block group 2715590590464, rebuilding it

now

[ 61.456874] BTRFS warning (device sdb): failed to load free space cache for block group 2716664332288, rebuilding it

now

[ 61.459568] BTRFS warning (device sdb): failed to load free space cache for block group 2718811815936, rebuilding it

now

[ 61.461537] BTRFS warning (device sdb): failed to load free space cache for block group 2720959299584, rebuilding it

now

[ 61.503789] BTRFS warning (device sdb): failed to load free space cache for block group 2729549234176, rebuilding it

now

[ 61.506121] BTRFS warning (device sdb): failed to load free space cache for block group 2726328008704, rebuilding it

now

[ 61.506258] BTRFS warning (device sdb): failed to load free space cache for block group 2731696717824, rebuilding it

now

[ 61.506392] BTRFS warning (device sdb): failed to load free space cache for block group 2730622976000, rebuilding it

now

[ 61.506509] BTRFS warning (device sdb): failed to load free space cache for block group 2732770459648, rebuilding it

now

[ 61.506826] BTRFS warning (device sdb): failed to load free space cache for block group 2733844201472, rebuilding it

now

[ 61.512590] BTRFS warning (device sdb): failed to load free space cache for block group 2738139168768, rebuilding it

now

[ 61.512712] BTRFS warning (device sdb): failed to load free space cache for block group 2740286652416, rebuilding it

now

[ 61.521158] BTRFS warning (device sdb): failed to load free space cache for block group 2746729103360, rebuilding it

now

[ 61.521381] BTRFS warning (device sdb): failed to load free space cache for block group 2745655361536, rebuilding it

now

[ 61.539633] BTRFS error (device sdb): level verify failed on logical 2794279796736 mirror 1 wanted 0 found 1

[ 61.540347] BTRFS warning (device sdb): failed to load free space cache for block group 2764982714368, rebuilding it

now

[ 61.548519] BTRFS warning (device sdb): failed to load free space cache for block group 2744581619712, rebuilding it

now

[ 61.557094] BTRFS warning (device sdb): lost page write due to IO error on /dev/nvme0n1 (-5)

[ 61.557895] BTRFS warning (device sdb): lost page write due to IO error on /dev/nvme0n1 (-5)

[ 61.558671] BTRFS warning (device sdb): lost page write due to IO error on /dev/nvme0n1 (-5)

[ 61.559460] BTRFS error (device sdb): error writing primary super block to device 1

[ 61.560273] BTRFS info (device sdb): balance: resume -f -dconvert=single,soft -mconvert=single,soft -sconvert=single,

soft

[ 61.573849] BTRFS error (device sdb): level verify failed on logical 2794279862272 mirror 1 wanted 0 found 2

[ 61.573986] BTRFS error (device sdb): level verify failed on logical 2794279878656 mirror 1 wanted 0 found 1

[ 61.592495] BTRFS error (device sdb): level verify failed on logical 2794279862272 mirror 1 wanted 0 found 2

[ 61.639322] BTRFS error (device sdb): level verify failed on logical 2794279878656 mirror 1 wanted 0 found 1

[ 61.757694] BTRFS error (device sdb): level verify failed on logical 2794280058880 mirror 1 wanted 0 found 1

[ 61.769163] BTRFS error (device sdb): level verify failed on logical 2794280058880 mirror 1 wanted 0 found 1

[ 62.332016] BTRFS info (device sdb): relocating block group 2868162592768 flags data|raid1

[ 62.351733] BTRFS error (device sdb): level verify failed on logical 2575974662144 mirror 2 wanted 0 found 2

[ 62.386078] BTRFS warning (device sdb): chunk 2870310076416 missing 1 devices, max tolerance is 0 for writable mount

[ 62.387115] BTRFS: error (device sdb) in write_all_supers:4345: errno=-5 IO failure (errors while submitting device b

arriers.)

[ 62.389133] BTRFS info (device sdb: state E): forced readonly

[ 62.390138] BTRFS warning (device sdb: state E): Skipping commit of aborted transaction.

[ 62.391138] BTRFS error (device sdb: state EA): Transaction aborted (error -5)

[ 62.392128] BTRFS: error (device sdb: state EA) in cleanup_transaction:2051: errno=-5 IO failure

[ 62.393103] BTRFS info (device sdb: state EA): balance: ended with status: -5

[ 64.342597] BTRFS error (device sdb: state EA): level verify failed on logical 2576721969152 mirror 2 wanted 1 found

0

[ 64.644753] BTRFS error (device sdb: state EA): level verify failed on logical 2795757862912 mirror 2 wanted 0 found

1

[ 64.894653] BTRFS error (device sdb: state EA): level verify failed on logical 2795654381568 mirror 2 wanted 1 found

0

[ 65.076259] BTRFS error (device sdb: state EA): level verify failed on logical 2794301816832 mirror 1 wanted 1 found

0

[ 65.345773] BTRFS error (device sdb: state EA): level verify failed on logical 2794349576192 mirror 1 wanted 2 found

0

[ 65.352113] BTRFS error (device sdb: state EA): level verify failed on logical 2794349641728 mirror 1 wanted 1 found

0

[ 65.357520] BTRFS error (device sdb: state EA): level verify failed on logical 2794312925184 mirror 1 wanted 1 found

0

[ 65.812663] BTRFS error (device sdb: state EMA): Remounting read-write after error is not allowed

[ 65.929061] BTRFS error (device sdb: state EMA): Remounting read-write after error is not allowed

[ 65.967831] BTRFS error (device sdb: state EMA): Remounting read-write after error is not allowed

[ 66.003261] BTRFS error (device sdb: state EMA): Remounting read-write after error is not allowed

[ 66.105235] BTRFS error (device sdb: state EMA): Remounting read-write after error is not allowed

[ 66.247567] BTRFS error (device sdb: state EMA): Remounting read-write after error is not allowed

[ 66.332820] BTRFS error (device sdb: state EMA): Remounting read-write after error is not allowed

[ 66.367223] BTRFS error (device sdb: state EMA): Remounting read-write after error is not allowed

[ 77.070105] BTRFS error (device sdb: state EMA): Remounting read-write after error is not allowed

[ 77.231062] BTRFS error (device sdb: state EMA): Remounting read-write after error is not allowed

[ 77.252895] BTRFS error (device sdb: state EMA): Remounting read-write after error is not allowed

[ 77.274086] BTRFS error (device sdb: state EMA): Remounting read-write after error is not allowed

[ 77.293131] BTRFS error (device sdb: state EMA): Remounting read-write after error is not allowed

[ 77.314939] BTRFS error (device sdb: state EMA): Remounting read-write after error is not allowed

[ 77.333293] BTRFS error (device sdb: state EMA): Remounting read-write after error is not allowed

[ 77.352091] BTRFS error (device sdb: state EMA): Remounting read-write after error is not allowed

[ 77.396376] BTRFS error (device sdb: state EMA): Remounting read-write after error is not allowed

[ 77.416913] BTRFS error (device sdb: state EMA): Remounting read-write after error is not allowed

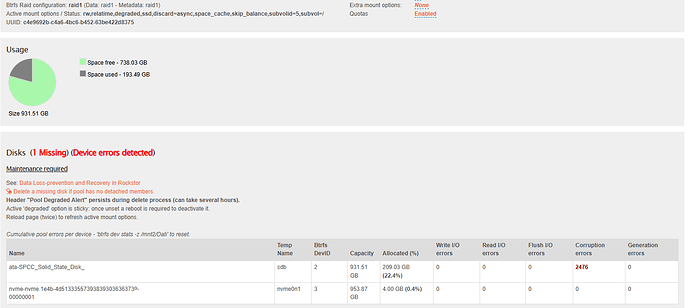

![]() ), since the new NVMe drive arrives tomorrow, I’m thinking of shutting down the system, replacing it, and hoping everything will be fine. But I’m asking you how I can avoid problems and the loss of data and Rockstor drives, which have been working perfectly for at least a year.

), since the new NVMe drive arrives tomorrow, I’m thinking of shutting down the system, replacing it, and hoping everything will be fine. But I’m asking you how I can avoid problems and the loss of data and Rockstor drives, which have been working perfectly for at least a year.