@Haioken and @stray_tachyon, I’ve only glanced at the network config code but my understanding is that it attempts to take it’s cues from current system state. Ie if one has a bond configuration and does a complete rebuild (with consequent hole db wipe), when you first login in it will show the prior (current to underling system) bond arrangement: that is the db tries to reflect system state (within it’s limits). All interactions with the system are via the nmcli program. So I’d initially suggest that one confirms nmcli’s understanding of current network state by way of more information, ie:

nmcli

Should show network managers report of the current config plus some pointers to getting more info.

It might be worth attempting to ‘work around’ your current chicken and egg scenario by configuring your network via nmcli and see what Rockstor indicates, possibly after a reboot.

We do have a now outdated technical wiki entry on the network code but since then it’s had a fair bit of change, such as the addition of bonding for example (big change that one):

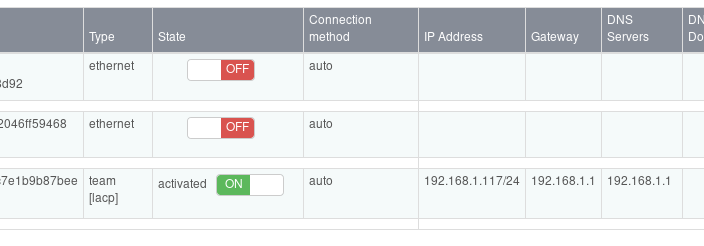

I’ve got a couple of test system here that are both dual ethernet bonded (via lacp):

one of which is also an ASRock C2550D4I.

And have previously changed their config from within Rockstor (to test the new at the time bonding code) and as far as I remember the only buggy behaviour was a temporary disconnect requiring a browser page refresh to get the new config to be displayed. Kind of inevitable with live network changes of the link used to do the changes.

Let us know any thing else you can about how this occurred (ie dhcp/static etc) so I or someone else on the forum may be able to reproduce your issue. In which case we can open a bug issue with the reproducer steps and get this one sorted. Changing the db directly is rather a last resort really but one can always re-install and ‘needs must’. But your initial description seems pretty complete.

My initial guess is that we are failing to cope when the underlying network system is switched out like that. I’ve tested this multiple times but not with a bonding config.

Let us know what nmcli thinks of what you have and if it can get you out of this situation. If the Rockstor Web-UI still can’t that is.

Sorry I can’t be of more help but I just haven’t yet done much with the network code. I’m currently working through my current queue so I’ll not be able to get to this for a bit I’m afraid but it’s definitely worth putting what extra details you can so we can get this behaving better.

Thanks for your report and for helping to support Rockstor development.