Hi,

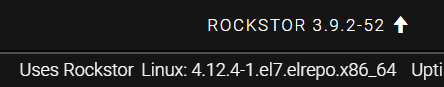

not sure this is related to the update to 3.9.2.53 in the stable channel, but that’s when it happened (i.e. CentOS is the operating system).

When I triggered the update, after 5 minutes or so, (based on the pop-up with the countdown that comes up), the connection was lost to the WebUI, and it would not come back. At the same time, I was still connected via SSH (PuTTY) to the server itself, so it was still “running”.

Querying the status, I found, that for some reason a deleted device was causing the hdparm service to fail.

[root@rockstorw ~]# systemctl status

● rockstorw

State: degraded

Jobs: 0 queued

Failed: 1 units

Since: Thu 2020-01-09 17:56:15 PST; 1 months 2 days ago

CGroup: /

├─1 /usr/lib/systemd/systemd --system --deserialize 20

├─docker

│ ├─303a0397c2b44183b5a8072a7e206f5b9f09640f8d81ef5110a7110be4d193de

│ │ ├─ 4491 bash /usr/libexec/netdata/plugins.d/tc-qos-helper.sh 1

│ │ ├─ 9339 /usr/libexec/netdata/plugins.d/apps.plugin 1

│ │ ├─17778 /usr/sbin/netdata -D -u root -s /host -p 19999

│ │ └─22468 /usr/libexec/netdata/plugins.d/go.d.plugin 1

│ ├─28c77fcfcc59cf6b67609fd2f222e28b7799d583d70ec9ada5a5f85c9be48811

│ │ ├─ 6004 s6-svscan -t0 /var/run/s6/services

│ │ ├─ 6065 s6-supervise s6-fdholderd

│ │ ├─ 7451 s6-supervise plex

│ │ ├─11646 /usr/lib/plexmediaserver/Plex Media Server

│ │ ├─11667 Plex Plug-in [com.plexapp.system] /usr/lib/plexmediaserve

│ │ ├─11718 /usr/lib/plexmediaserver/Plex DLNA Server

│ │ ├─11721 /usr/lib/plexmediaserver/Plex Tuner Service /usr/lib/plex

│ │ └─17079 Plex EAE Service

│ ├─3154f5ae28cb47d6b5933d2964b0d4d830ba53eb8f4d0fbf9bca4677dac09776

The looking for the failed item:

[root@rockstorw ~]# systemctl --failed

UNIT LOAD ACTIVE SUB DESCRIPTION

● rockstor-hdparm.service loaded failed failed Rockstor hdparm settings

LOAD = Reflects whether the unit definition was properly loaded.

ACTIVE = The high-level unit activation state, i.e. generalization of SUB.

SUB = The low-level unit activation state, values depend on unit type.

1 loaded units listed. Pass --all to see loaded but inactive units, too.

To show all installed unit files use ‘systemctl list-unit-files’.

[root@rockstorw ~]# systemctl restart rockstor-hdparm

failed again (as I was hoping, it was something like reported a long time ago here:

but alas, that didn’t work either. So, looking at this:

[root@rockstorw ~]# systemctl status rockstor-hdparm.service

● rockstor-hdparm.service - Rockstor hdparm settings

Loaded: loaded (/etc/systemd/system/rockstor-hdparm.service; enabled; vendor preset: disabled)

Active: failed (Result: exit-code) since Tue 2020-02-11 14:25:11 PST; 22s ago

Process: 22554 ExecStart=/usr/sbin/hdparm -q -B125 -q -S240 /dev/disk/by-id/ata-HGST_HDN724040ALE640_PK1334PEHZB3XS (code=exited, status=2)

Main PID: 22554 (code=exited, status=2)

journal -xe

shows this:

Feb 11 14:25:11 rockstorw systemd[1]: Starting Rockstor hdparm settings...

-- Subject: Unit rockstor-hdparm.service has begun start-up

-- Defined-By: systemd

-- Support: http://lists.freedesktop.org/mailman/listinfo/systemd-devel

--

-- Unit rockstor-hdparm.service has begun starting up.

Feb 11 14:25:11 rockstorw hdparm[22554]: /dev/disk/by-id/ata-HGST_HDN724040ALE640_PK1334PEHZB3XS: No such file or directory

Feb 11 14:25:11 rockstorw systemd[1]: rockstor-hdparm.service: main process exited, code=exited, status=2/INVALIDARGUMENT

Feb 11 14:25:11 rockstorw systemd[1]: Failed to start Rockstor hdparm settings.

-- Subject: Unit rockstor-hdparm.service has failed

-- Defined-By: systemd

-- Support: http://lists.freedesktop.org/mailman/listinfo/systemd-devel

--

-- Unit rockstor-hdparm.service has failed.

--

-- The result is failed.

Feb 11 14:25:11 rockstorw systemd[1]: Unit rockstor-hdparm.service entered failed state.

Feb 11 14:25:11 rockstorw systemd[1]: rockstor-hdparm.service failed.

Feb 11 14:25:11 rockstorw polkitd[4284]: Unregistered Authentication Agent for unix-process:22548:283853767 (system bus name :1.2061, object path /org/freedesktop/PolicyKit1/AuthenticationAgent, locale en_US.UTF-8) (disconnected from bus)

Feb 11 14:25:33 rockstorw systemd[1]: Configuration file /etc/systemd/system/rockstor-hdparm.service is marked world-inaccessible. This has no effect as configuration data is accessible via APIs without restrictions. Proceeding anyway.

As you can see above, it seems the hdparm tries to access a device that I removed about a month ago see here: Advice needed on All-Drives replacement - #8 by Hooverdan

I replaced all HGST drives with WD ones … and after each removal/detaching also ensured that I deleted the entry in the disks line.

The only way I can get the UI back is issuing a reboot via the terminal, which I did. The system comes back up, and shows the update still as available:

If @phillxnet or @Flox confirm that it’s update related, I can update the title, but I suspect, this could have happened at any point in time. It seems to me that there’s a zombie entry for the last removed device (I think the ID is from the last drive I then removed to complete my disk replacement journey) somewhere.

No idea how to fix this. Any suggestions? As of now, the box runs fine as far as I can tell, I can access the shares/open files, etc. so during the reboot this does not seem to be an issue, only as a post-upgrade piece …