After a rare power outage, Rockstor rebooted, and seems to function fine as a NAS as well as the RockOns working, etc. However, the WebUI access is suddenly not working anymore, on various browser variants I get the SSL Errors regarding the protocol or, in Mozilla’s case, the RECORD_TOO_LONG message.

As before, I have been using the https:// version of the IP address.

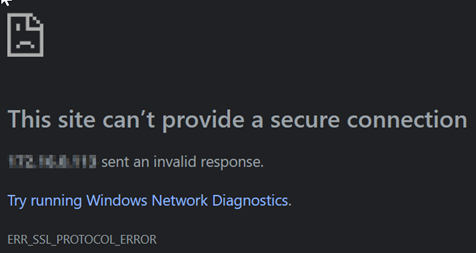

Chrome, New Edge:

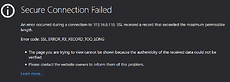

Firefox:

I investigated whether I suddenly suffered from the elusive empty nginx.conf file issue

but my configuration file seems to be populated just fine

In November 2020, I had already checked whether I possibly had an issue with discrepancy between an nginx update and the dependency of openssl11-libs as mentioned here:

But I got both of versions in sync, and they still are.

However, if I check the nginx status right after a reboot, it shows this:

systemctl status nginx

> ● nginx.service - The nginx HTTP and reverse proxy server

> Loaded: loaded (/usr/lib/systemd/system/nginx.service; disabled; vendor preset: disabled)

> Active: inactive (dead)

And overall system status:

systemctl status -l rockstor-pre rockstor-bootstrap Rockstor

● rockstor-pre.service - Tasks required prior to starting Rockstor

Loaded: loaded (/etc/systemd/system/rockstor-pre.service; enabled; vendor preset: disabled)

Active: active (exited) since Fri 2021-01-29 09:43:59 PST; 1h 2min ago

Main PID: 3923 (code=exited, status=0/SUCCESS)

CGroup: /system.slice/rockstor-pre.service

Jan 29 09:43:58 rockstorw initrock[3923]: 2021-01-29 09:43:58,908: Done

Jan 29 09:43:58 rockstorw initrock[3923]: 2021-01-29 09:43:58,908: Running prepdb...

Jan 29 09:43:59 rockstorw initrock[3923]: 2021-01-29 09:43:59,287: Done

Jan 29 09:43:59 rockstorw initrock[3923]: 2021-01-29 09:43:59,287: stopping firewalld...

Jan 29 09:43:59 rockstorw initrock[3923]: 2021-01-29 09:43:59,332: firewalld stopped and disabled

Jan 29 09:43:59 rockstorw initrock[3923]: 2021-01-29 09:43:59,463: Normalising on shellinaboxd service file

Jan 29 09:43:59 rockstorw initrock[3923]: 2021-01-29 09:43:59,463: - shellinaboxd.service already exists

Jan 29 09:43:59 rockstorw initrock[3923]: 2021-01-29 09:43:59,463: rockstor service looks correct. Not updating.

Jan 29 09:43:59 rockstorw initrock[3923]: 2021-01-29 09:43:59,463: rockstor-bootstrap.service looks correct. Not updating.

Jan 29 09:43:59 rockstorw systemd[1]: Started Tasks required prior to starting Rockstor.

● rockstor-bootstrap.service - Rockstor bootstrapping tasks

Loaded: loaded (/etc/systemd/system/rockstor-bootstrap.service; enabled; vendor preset: disabled)

Active: active (exited) since Fri 2021-01-29 09:44:33 PST; 1h 2min ago

Process: 9196 ExecStart=/opt/rockstor/bin/bootstrap (code=exited, status=0/SUCCESS)

Main PID: 9196 (code=exited, status=0/SUCCESS)

CGroup: /system.slice/rockstor-bootstrap.service

Jan 29 09:44:00 rockstorw bootstrap[9196]: If you have done those things and are still encountering

Jan 29 09:44:00 rockstorw bootstrap[9196]: this message, please follow up at

Jan 29 09:44:00 rockstorw bootstrap[9196]: https://bit.ly/setuptools-py2-warning.

Jan 29 09:44:00 rockstorw bootstrap[9196]: ************************************************************

Jan 29 09:44:00 rockstorw bootstrap[9196]: sys.version_info < (3,) and warnings.warn(pre + "*" * 60 + msg + "*" * 60)

Jan 29 09:44:33 rockstorw bootstrap[9196]: BTRFS device scan complete

Jan 29 09:44:33 rockstorw bootstrap[9196]: Bootstrapping complete

Jan 29 09:44:33 rockstorw bootstrap[9196]: Running qgroup cleanup. /opt/rockstor/bin/qgroup-clean

Jan 29 09:44:33 rockstorw bootstrap[9196]: Running qgroup limit maxout. /opt/rockstor/bin/qgroup-maxout-limit

Jan 29 09:44:33 rockstorw systemd[1]: Started Rockstor bootstrapping tasks.

● rockstor.service - RockStor startup script

Loaded: loaded (/etc/systemd/system/rockstor.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2021-01-29 09:43:59 PST; 1h 2min ago

Main PID: 9195 (supervisord)

CGroup: /system.slice/rockstor.service

├─9195 /usr/bin/python2 /opt/rockstor/bin/supervisord -c /opt/rockstor/etc/supervisord.conf

├─9212 nginx: master process /usr/sbin/nginx -c /opt/rockstor/etc/nginx/nginx.conf

├─9213 /usr/bin/python2 /opt/rockstor/bin/gunicorn --bind=127.0.0.1:8000 --pid=/run/gunicorn.pid --workers=2 --log-file=/opt/rockstor/var/log/gunicorn.log --pythonpath=/opt/rockstor/src/rockstor --timeout=120 --graceful-timeout=120 wsgi:application

├─9214 /usr/bin/python2 /opt/rockstor/bin/data-collector

├─9215 /usr/bin/python2 /opt/rockstor/bin/django ztaskd --noreload --replayfailed -f /opt/rockstor/var/log/ztask.log

├─9216 nginx: worker process

├─9217 nginx: worker process

├─9238 /usr/bin/python2 /opt/rockstor/bin/gunicorn --bind=127.0.0.1:8000 --pid=/run/gunicorn.pid --workers=2 --log-file=/opt/rockstor/var/log/gunicorn.log --pythonpath=/opt/rockstor/src/rockstor --timeout=120 --graceful-timeout=120 wsgi:application

└─9243 /usr/bin/python2 /opt/rockstor/bin/gunicorn --bind=127.0.0.1:8000 --pid=/run/gunicorn.pid --workers=2 --log-file=/opt/rockstor/var/log/gunicorn.log --pythonpath=/opt/rockstor/src/rockstor --timeout=120 --graceful-timeout=120 wsgi:application

Jan 29 09:44:00 rockstorw supervisord[9195]: 2021-01-29 09:44:00,187 CRIT Server 'unix_http_server' running without any HTTP authentication checking

Jan 29 09:44:00 rockstorw supervisord[9195]: 2021-01-29 09:44:00,187 INFO supervisord started with pid 9195

Jan 29 09:44:01 rockstorw supervisord[9195]: 2021-01-29 09:44:01,189 INFO spawned: 'nginx' with pid 9212

Jan 29 09:44:01 rockstorw supervisord[9195]: 2021-01-29 09:44:01,190 INFO spawned: 'gunicorn' with pid 9213

Jan 29 09:44:01 rockstorw supervisord[9195]: 2021-01-29 09:44:01,191 INFO spawned: 'data-collector' with pid 9214

Jan 29 09:44:01 rockstorw supervisord[9195]: 2021-01-29 09:44:01,192 INFO spawned: 'ztask-daemon' with pid 9215

Jan 29 09:44:03 rockstorw supervisord[9195]: 2021-01-29 09:44:03,592 INFO success: data-collector entered RUNNING state, process has stayed up for > than 2 seconds (startsecs)

Jan 29 09:44:03 rockstorw supervisord[9195]: 2021-01-29 09:44:03,592 INFO success: ztask-daemon entered RUNNING state, process has stayed up for > than 2 seconds (startsecs)

Jan 29 09:44:06 rockstorw supervisord[9195]: 2021-01-29 09:44:06,596 INFO success: nginx entered RUNNING state, process has stayed up for > than 5 seconds (startsecs)

Jan 29 09:44:06 rockstorw supervisord[9195]: 2021-01-29 09:44:06,597 INFO success: gunicorn entered RUNNING state, process has stayed up for > than 5 seconds (startsecs)

My nginx.conf file looks like this (/opt/rockstor/etc/nginx/nginx.conf) and appears normal to me:

daemon off;

worker_processes 2;

events {

worker_connections 1024;

use epoll;

}

http {

include /opt/rockstor/etc/nginx/mime.types;

default_type application/octet-stream;

log_format main

'$remote_addr - $remote_user [$time_local] '

'"$request" $status $bytes_sent '

'"$http_referer" "$http_user_agent" '

'"$gzip_ratio"';

client_header_timeout 10m;

client_body_timeout 10m;

send_timeout 10m;

connection_pool_size 256;

client_header_buffer_size 1k;

large_client_header_buffers 4 8k;

request_pool_size 4k;

gzip on;

gzip_min_length 1100;

gzip_buffers 4 8k;

gzip_types text/plain;

output_buffers 1 32k;

postpone_output 1460;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 75 20;

ignore_invalid_headers on;

index index.html;

server {

listen 443 default_server;

server_name "~^(?<myhost>.+)$";

#ssl on; deprecated

ssl_protocols TLSv1.2 TLSv1.1 TLSv1;

ssl_certificate /opt/rockstor/certs/rockstor.cert;

ssl_certificate_key /opt/rockstor/certs/rockstor.key;

location /site_media {

root /media/; # Notice this is the /media folder that we create above

}

location ~* ^.+\.(zip|rar|bz2|doc|xls|exe|pdf|ppt|txt|tar|mid|midi|wav|bmp|rtf|mov) {

access_log off;

expires 30d;

}

location /static {

root /opt/rockstor/;

}

location /logs {

root /opt/rockstor/src/rockstor/;

}

location / {

proxy_pass_header Server;

proxy_set_header Host $http_host;

proxy_set_header X-Forwarded-Proto https;

proxy_redirect off;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Scheme $scheme;

proxy_connect_timeout 75;

proxy_read_timeout 120;

proxy_pass http://127.0.0.1:8000/;

}

location /socket.io {

proxy_pass http://127.0.0.1:8001;

proxy_redirect off;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

location /shell/ {

valid_referers server_names;

if ($invalid_referer) { return 404; }

proxy_pass http://127.0.0.1:4200;

proxy_redirect off;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

}

}

In the logs (/opt/rockstor/var/log/rockstor.log) I don’t seem to find anything suspicious either. They’re all about the “can’t list qgroups: quotas are not enabled” message.

Any suggestions what else I should look at? The good part is that I can still access the shares and their data, as well as the RockOns are operating normally…