10G network was up and just as I was about to speed test it, one of the Intel 10G cards died… ordered replacement…

I hate Murphy! LOL!

10G network was up and just as I was about to speed test it, one of the Intel 10G cards died… ordered replacement…

I hate Murphy! LOL!

Yep, that Murphy is a law unto himself

Hope replacement turns up quickly, look forward to seeing what results you get

Well, learned a few things about 10Gb network. Got two cards that test the same to replace the bad one.

Best transfer rates around 850MB/sec on 4.5GHz i5-3570k 1600 mem setup. However, sometimes packets lost and xfer halts for timeout then begins again. Jumbo packets do nothing to help because nothing else seems to really support them. Hours of tweaking with no peak speed difference. Best moderated transfer rate around 750MB/sec. (from NAS to external setup)

Tried same card on E3-1240V2 setup and got worse results. Max good transfer speeds around 550 MB/sec.

Next is try on I7-5930K setup then eventually on Ryzen 9 5950X setup…

Also, all tests FROM Rockstor setup TO external 1TB Raid-0 SSD setup. Going from different setups to the Rockstor NAS are slowed by the write speed of the target it seems… not sure what is happening with Rockstor cache at this point, get a lot of speed variance writing to NAS.

And the A/C broke in my home and is fixed a couple days later for $619. Sheesh.

I7-5930K running 4GHz is best so far; and some things making sense now.

I can in fact transfer to the NAS at full 1.09 GB/sec speed from a RamDisk. However, when the transfer continues past about 24 gig, things start slowing down… But, all in all, seems the major area’s of slowdown are SSD and disk R/W speeds. This is not unexpected, just not to the extent I thought.

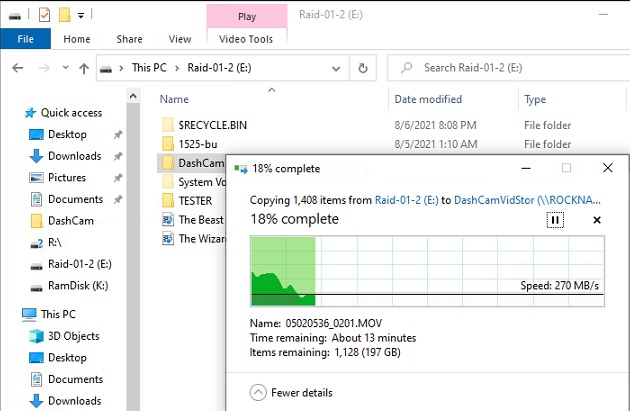

So, from SSD RAID-0 to RamDisk 11GB is about as expected:

From RamDisk to NAS (ssd raid-0) 11GB is okay so far:

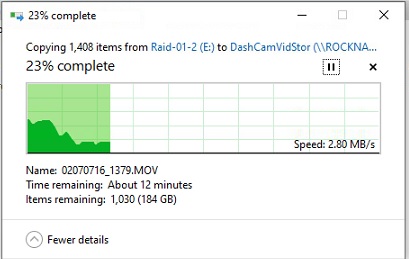

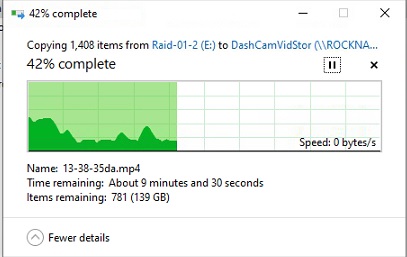

Then we try a large 241GB directory from Raid-0 to NAS and what you see is the Fast startup slowly dropping in speed to sometimes 0!! Well, turns out the two SSD’s are mix and match ones I had laying around… one has a write speed near 400MB/sec and the other can only do about 190MB/sec sustained.

Bumping along from about 230 MB/sec to zero and back the whole way…

Bumping along large transfer on 4TB Raid-1 setup…143MB/sec to 0 and back…

ASSUMING new 1TB SSDs run faster and balanced, looks like my NAS is on its way to being finalized.

Funny how the NAS just gets bogged down when cache is full… actually stops while it cleans up shop then continues on.

Open IMAGES in new TAB to get full size… hope I am not boring anyone…

NEXT: Since network and NAS working, install openSUSE Leap 15.3 on 4.5GHz setup…

Unanswered questions about how main memory cache works in Rockstor have to be researched as well:

So much to do------------ LOL!

![]()

@Tex1954 Thanks for the extensive report, Nice.

Re:

Have you disabled quotas on the Rockstor pool you are using? That can have a significant impact on a pools performance and IO characteristics, if you can handle the reduced ability to report share usage.

Hope that helps.

Yes it does, I’m understanding the limitations and they pretty much make sense to me. If I was content with 1Gbs speed, all would be well in all cases. The fact that the system “CAN” do 10Gbs is proven and the only real limitation is disk access times. I did know this would be the case going into this build considering the 32GB DRAM limitation so no surprises there.

I have Leap 15.3 up and running now and playing with it a little while trying to figure out glitches on the NAS when swapping SSD’s.

![]()

Okay, setup the new X570 Ryzen9-5950X system with one of the 2 NVME 1TB drives installed and did some more speed testing.

The 3.88GHz Ryzen9-5950X NVME can read and write easily over 3,000 MB/Sec, far faster than the 10Gbps LAN. It has no problem maintaining a 10Gbps speed.

RockStor has 2 FAST 512MB SSDs set in Raid-0. Their write speed is proven to be between 950-1020 MB/sec used in this manner. READ speeds are faster at about 1056 MB/sec.

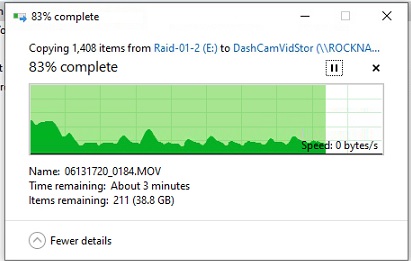

Max throughput in either direction from 5950X to Rockstor is around 850MB/sec. However, this dips and dives to zero sometimes where I witness the NAS CPU usage skyrocket briefly. Also, this happens even when the NAS 32G memory isn’t fully utilized as cache, but more often when it is fully utilized.

Clearly Rockstor has something that isn’t optimized so far as large and long file transfers are concerned. The Raid-0 SSD setup should be able to handle full speed transfers. This is all with Quota’s disabled.

HOWEVER, I am personally happy with the setup as is for now. These huge transfers are few and far between in real practice.

I think I want to wait for the openSUSE version to release before I play with Rockons and other things, but for now this setup will do…

The only thing still on my mind is that if say the NAS CPU or Motherboard dies, I can’t move the disks to a different Windows system and keep going like I could before.

Still trudging along…

![]()

You can look at this transfer picture and see how Rockstor stalls many times on a 250 Gig transfer.

@Tex1954 Hello again.

Just wanted to chip in on this one:

But your could move them to a fresh Rockstor install. Or for that matter read the pools with any modern linux distribution. But a Rockstor install can import them far easier with far less knowledge required.

See: Import BTRFS Pool: Disks — Rockstor documentation

And you get the familiar representation. A modern Rockstor can import any prior Rockstor created btrfs volume (Pool in Rockstor speak). And we use vanilla btrfs. Hence the any modern linux install also being able to mount our Pools. Note however that the reverse is not true. We use a specific subset / arrangement of btrfs subvolumes and will ignore subvols that are not in this arrangement. However if a subvol (Share in Rockstor) is created on another linux distro and it is within the existing heierarcy used by Rockstor then it will import identically to any other Rockstor ‘native’ share. It’s just the location/arrangement of them that is important.

We also have the config backup and restore capability:

https://rockstor.com/docs/interface/system/config_backup.html

So if you take care not to use the system pool (which currently has no redundancy capabilities) and in this scenario is ‘lost’ anyway, then you can import a pool into a fresh install and restore you config (assuming you made one and downloaded it) and be pretty much were you were. If you are on a stable subscription version of Rockstor you can also, with immediate effect, transition your subscription to the new systems Appliance ID (it’s taken from the motherboard) via Appman (https://appman.rockstor.com/).

Also, re your performance testing. I’m assuming this was done on a v3 (CentOS) variant. Is that the case? Our v4 should be significantly better performing I’m guessing. Would be interesting to know.

Hope that helps.

Well, that is good to know. I will try it, a fresh install, import, and see how that goes. SCARY stuff since I don’t have another FULL backup at the moment.

SMB is always slow on many (small) files, but fast on some big files. That’s not Rockstor specific.

Years ago when i got my first qnap nas the first thing i did was make a filesystem and a couple of files then rip the drives out and try them in a linux machine to see that i could recover the files off if the hardware died.  always a good idea to test that you can get your data back. Hehe

always a good idea to test that you can get your data back. Hehe

BOY OH BOY AM I GETTING THERE!!!

Per other post, the upgrade to 4.09 solved so many problems! Everything works as expected now and guess what? I even got “mc” to install (using sudo zypper in mc) and to run (sudo mc) in the RockStor “system shell” and now 100% happy camper!!! Soon as a very few more parts arrive, I will be able to button up the NAS and then post a build report with pictures.

(Open image in new Tab to see full size)

WOOHOOO! Now I can delete /bin /etc /libs or whatever I want!

LOL!

Not for the feint of heart I dare say…

![]()

![]()