@miversen33 Welcome to the Rockstor community forum.

Re:

What happens when you try to change the custom mount options to degraded,rw?

If a pool has a missing disk, as you explain yours does, it will refuse to mount without a degraded option. That is normal. But what may have happened here is a partial removal during:

Btrfs does input/output error if it can’t find a ‘good’ copy: or it may be reporting an actual drive input-output error. Either way it’s now poorly. And in which case you may have more limited options. If degraded,ro mount works; it gives you a change to refresh your backups if need be.

Also of relevance here is the age of your system. What version of Rockstor are your running, and what base OS is that version using: top right of Web-UI should tell you all these things.

That would be a degraded pool, and the parity btrfs-raids of 5&6 do allow for drive removal. But are known to have less robust repair capabilities. Hence me asking about the base OS and rockstor version your are running. Leap 15.6 carries, by default, newer kernel and user land for btrfs than our prior Leap bases. And can do parity raid rw by default. But if you are already using say TW or stable kernel backports then you already have the newer btrfs capabilities.

You best option, if you can get degraded,ro mount is to move the data off the flaky pool, and then recreate a pool with know good drives and restore. As per our prior warnings against the parity based btrfs-raid levels they are weaker in repair scenarios. But at least our upstream (openSUSE) have now removed their (and consequently our) default ro (default rw disabled) capability. So that bodes well for improved confidence in the parity raids. But if you end up re-doing, consider using one of the now available mixed raid so metadata is not using parity raid, that way you get the space advantage of the parity raid level for data, but have a more robust raid1c3 or raid1c4 for medadata. We have a slightly outdated doc entry at the end of the following for this:

https://rockstor.com/docs/howtos/stable_kernel_backport.html

We do now support within the Web-UI, in more recent versions, mixed raid levels.

Also, for some CLI hints as to repair options, see our:

https://rockstor.com/docs/data_loss.html

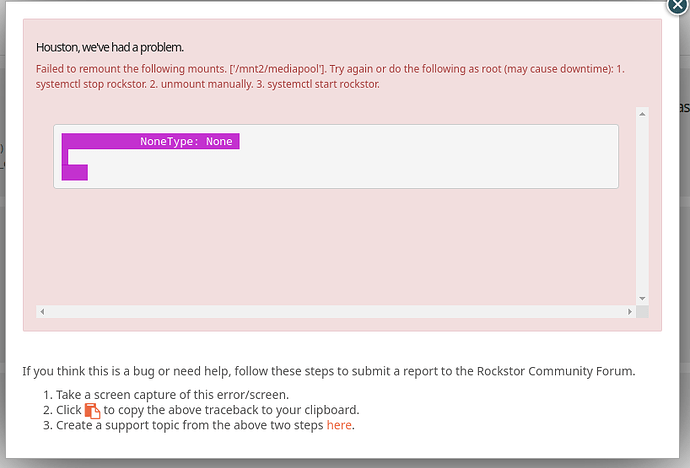

And it’s always worth doing a reboot before attempting to change custom mount options. That is what the message you received was attempting to avoid re stopping and starting services: a reboot does the same. Sometimes btrfs mount options can be sticky, and only free-up over an unmount rescan and mount.

Hope that helps, any my apologies for now having more time to detail your optinos. But those doc references should help, and first-things-first you must use what you have already (degraded,ro) if that is working, to get data off if need be. Otherwise things could be worse. The parity btrfs-raid levels were a little flaky and your pool is probably form that time. So a freshly recreated pool will inherit the newer improvements if you first have newer OS in place before creating the pool again: if that is what you end up doing.

![]()