So potentially the HA RockOn would actually spawn the HA and the JS container on install?

Yes, you can define multiple containers within the json file, and put a launch order on them.

You would probably have it launch the zwave-js-server first, so that the HA can find it once it launches. You would connect the two via an additional network (so it can do name resolution, etc.). You can take a look at these two (draft) PRs that are currently in process to get an idea of how that works:

and

@Hooverdan @Flox I’ve been picking away at trying to build the json image to launch the Zwave JS server. I read the examples under the registry and l’m using the HA image wrote with the device pass through as a model. I’m using this docker-compose.yml file as a guide assuming there are probably some similarities in the data between the json and yaml methods of launching a docker container.

Here’s what I have so far.

{

“Zwave JS server for Home Assistant”: {

“description”: “Zwave JS server required to host the Zwave adapter used by Home Assistant.This image will open a web socket on port 3000 for Home Assistant communications. Port 8091 is the UI for JS server configuration.”,

“ui”: {

“slug”: “”

},

“website”: “Z-Wave JS · GitHub”,

“version”: “3.7”,

“containers”: {

“zwaveusb”: {

“image”: “zwavejs/zwave-js-ui”,

“tag”: “latest”,

“launch_order”: 1,

“opts”: [

[

“–net”,

“host”

]

],

“devices”: {

“ZwaveUSBStick”: {

“description”: “Optional: /dev/ttyACM# or /dev/ttyUSB#. Can be found with command ls -l /dev/serial/by-id. Leave blank if not needed.”,

“label”: “ZwaveUSBStick”

},"ports": { "3000": { "description": "This websocket is used by HA to connect. You may need to open the same port on your firewall (protocol: tcp).", "host_default": 3000, "label": "Websocket", "protocol": "tcp", "ui": false }, "8091": { "description": "This UI for JS server configuration. ", "host_default": 8091, "label": JS server UI "protocol": "tcp", "ui": true } }, "volumes": { "/zwave-config": { "description": "Choose a Share for configuration. E.g.: create a Share called zwave-config for this purpose alone.", "label": "Zwave-Config Share" } }, "environment": { "SESSION_SECRET": { "description": "Enter a master secret for your security keys.", "label": "Network Key", "index": 1 }, "ZWAVEJS_EXTERNAL_CONFIG": { "description": "Enter a valid Group ID (GID) to use along with the above UID. It(or the above UID) must have full permissions to all Shares mapped in the previous step.", "label": "GID", "index": 2 } } } }}

}

I’m not sure how to handle this environment statement in the yaml file

- ZWAVEJS_EXTERNAL_CONFIG=/usr/src/app/store/.config-db

I’m also unclear on where to put the networks: zwave: statement in the json file. I really appreciate all your help so far. If you have time, a peer review and comments on what I have so far would be a huge help.

Thanks

Del

again away from a computer, but will take a look in a little bit hopefully.

Sorry, took a little longer.

I don’t think you need any of the environment variables, when looking here on how the docker run command is executed.

You also swapped a couple of the device and volume variables in your example. Something like this could possibly already work for you:

{

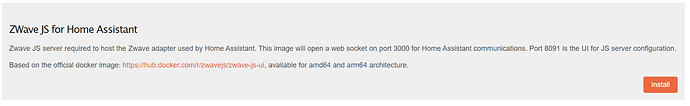

"ZWave JS for Home Assistant": {

"description": "Zwave JS server required to host the Zwave adapter used by Home Assistant. This image will open a web socket on port 3000 for Home Assistant communications. Port 8091 is the UI for JS server configuration.<p>Based on the official docker image: <a href='https://hub.docker.com/r/zwavejs/zwave-js-ui' target='_blank'>https://hub.docker.com/r/zwavejs/zwave-js-ui</a>, available for amd64 and arm64 architecture.</p>",

"ui": {

"slug": ""

},

"website": "https://github.com/zwave-js/zwave-js-ui/",

"version": "3.7",

"containers": {

"zwaveusb": {

"image": "zwavejs/zwave-js-ui",

"tag": "latest",

"launch_order": 1,

"opts": [

[

"–net",

"host"

]

],

"devices": {

"/dev/zwave": {

"description": "Optional: device path /dev/ttyACM # or /dev/ttyUSB. Can be found with command ls - l /dev/serial/by-id. Leave blank if not needed.",

"label": "ZwaveUSBStick"

}

},

"ports": {

"3000": {

"description": "This websocket is used by HA to connect. You may need to open the same port on your firewall (protocol: tcp).",

"host_default": 3000,

"label": "Websocket",

"protocol": "tcp",

"ui": false

},

"8091": {

"description": "This is the UI for the JS server configuration.",

"host_default": 8091,

"label": "JS server UI",

"protocol": "tcp",

"ui": true

}

},

"volumes": {

"/usr/src/app/store": {

"description": "Choose a Share for configuration. E.g.: create a Share called zwave-config for this purpose alone.",

"label": "Zwave-Config Share"

}

}

}

}

}

}

7-Dec-24: updated with corrected json definition

I believe, this will only come into play if you want to define your own name for the configuration database. I would try it without this first. Also the secret key should not be necessary, but I could be wrong.

Once you define the HA container also in this json, then the network block you can put anywhere in the json file (e.g. before the containers definitions).

If you decide to use the existing HA Rockon and add the zwave one, then you would manually create a new docker network (using the Rockstor WebUI) and then add both containers to the newly created one.

I would first just try to get the zwave server to run by itself (I assume its own UI will allow you to tell whether the stick is recognized and accessible), before creating the multi-container version of HA …

@Hooverdan thanks for the review and feedback. I think the secret key will only be an issue for folks with an existing HA installation. I’ve been playing around with the windows version and when I installed, I left the secret key blank an the server created one. Now if I want to move to a new server I think I need to provide that key, or have it create a new one and then reintegrate all my devices. At least that’s my assumption based on my very rudimentary knowledge so far. It’s probably not a must have for now, but probably a good feature for the RockOn long term. I’ll update when I find some time to experiment.

@Hooverdan I finally got a chance to play with this RockOn again. I took the json in your previous post and added it to my local metastore with the name Zwave-server.json, but when I updated the rockon list the Rockstor system got very unhappy and threw the following error. I’ve looked at it, but not sure where to start. The system froze for a while, but then went back to working with no intervention from me.

Traceback (most recent call last):File “/opt/rockstor/src/rockstor/storageadmin/views/rockon.py”, line 111, in post

self._create_update_meta(r, rockons[r])

File “/opt/rockstor/eggs/Django-1.8.16-py2.7.egg/django/utils/decorators.py”, line 145, in inner

return func(*args, **kwargs)

File “/opt/rockstor/src/rockstor/storageadmin/views/rockon.py”, line 310, in _create_update_meta

self._update_device(co, c_d)

File “/opt/rockstor/src/rockstor/storageadmin/views/rockon.py”, line 397, in _update_device

defaults = {‘description’: ccd_d[‘description’],

KeyError: ‘description’

I removed the new json and ran an update again and it looks like the same error.

Traceback (most recent call last):File “/opt/rockstor/src/rockstor/storageadmin/views/rockon.py”, line 111, in post

self._create_update_meta(r, rockons[r])

File “/opt/rockstor/eggs/Django-1.8.16-py2.7.egg/django/utils/decorators.py”, line 145, in inner

return func(*args, **kwargs)

File “/opt/rockstor/src/rockstor/storageadmin/views/rockon.py”, line 310, in _create_update_meta

self._update_device(co, c_d)

File “/opt/rockstor/src/rockstor/storageadmin/views/rockon.py”, line 397, in _update_device

defaults = {‘description’: ccd_d[‘description’],

KeyError: ‘description’

finally I removed my previous HA json from the metastore and ran one more update, same error. I was going to default to the universal solution and reboot but thought I’d seek guidance first.

Any suggestions on what if anything I should change?

Thanks

Del

do you have any custom json files left in the rockons-metastore directory? If so, remove them all and run update to see whether that will remediate the situation …

The metastore folder is empty, but same error.

UPDATE: @Hooverdan Found the problem. Lose nut behind the keyboard! LOL

Went back and ran a ls -a and found a .json file in the directory. Removed it and update ran fine. Now I’ll go put the json files back and try again.

@Hooverdan One more update. Rockons update with empty folder runs fine. Put the Zwave json back and got the same error

Traceback (most recent call last):File “/opt/rockstor/src/rockstor/storageadmin/views/rockon.py”, line 111, in post

self._create_update_meta(r, rockons[r])

File “/opt/rockstor/eggs/Django-1.8.16-py2.7.egg/django/utils/decorators.py”, line 145, in inner

return func(*args, **kwargs)

File “/opt/rockstor/src/rockstor/storageadmin/views/rockon.py”, line 310, in _create_update_meta

self._update_device(co, c_d)

File “/opt/rockstor/src/rockstor/storageadmin/views/rockon.py”, line 397, in _update_device

defaults = {‘description’: ccd_d[‘description’],

KeyError: ‘description’

renamed it to get rid of the - in the name just in case still no luck.

Removed the Zwave json, and put the old HA json file back and the update ran without error.

Although I’ve noticed the update didn’t add the HA package to the list, but it’s in the folder.

[root@jonesville rockons-metastore]# ls -a /opt/rockstor/rockons-metastore

. … Home-Assistant-with-USB.json

@D_Jones well, if one doesn’t count all of the brackets correctly, and it even passes the JSON linter …

there was one missing around the device section, and one too many at the end of the file, so I didn’t catch it.

I updated the above post containing the Rockon definition. this time I test loaded it into a VM to see whether anything was failing or not… it has taken it now without error …

I also put it up on github, if that’s easier for you, in this test branch:

Hope, that will get you going … and if it does work (with whatever changes you still have to make to it), see whether you want to present a Pull Request to the rockon-registry.

@Hooverdan Thanks for the json, I put it in the metastore and update ran without a problem. Then I tired to install it and got an error.

Failed to install in the previous attempt. Here’s how you can proceed.

Check logs in /opt/rockstor/var/log for clues.

Install again.

If the problem persists, post on the Forum or email support@rockstor.com

I pulled the end of the rockstor log and got the following

[root@jonesville log]# tail rockstor.log

[07/Dec/2024 17:38:04] ERROR [storageadmin.views.rockon_helpers:130] Error running a command. cmd = /usr/bin/docker pull zwavejs/zwave-js-ui:latest. rc = 1. stdout = [‘’]. stderr = [‘Error response from daemon: missing signature key’, ‘’]

Traceback (most recent call last):

File “/opt/rockstor/src/rockstor/storageadmin/views/rockon_helpers.py”, line 127, in install

generic_install)(rockon)

File “/opt/rockstor/src/rockstor/storageadmin/views/rockon_helpers.py”, line 242, in generic_install

run_command([DOCKER, ‘pull’, image_name_plus_tag], log=True)

File “/opt/rockstor/src/rockstor/system/osi.py”, line 176, in run_command

raise CommandException(cmd, out, err, rc)

CommandException: Error running a command. cmd = /usr/bin/docker pull zwavejs/zwave-js-ui:latest. rc = 1. stdout = [‘’]. stderr = [‘Error response from daemon: missing signature key’, ‘’]

I see the missing signature key error, but not sure what signature key they’re looking for.

Any thoughts?

Thanks again,

Del

I just ran the pull command by itself using the command prompt:

/usr/bin/docker pull zwavejs/zwave-js-ui:latest

and it pulled the image without issue for my installation (5.0.15-0 on Leap 15.6)

What version of docker do you have running (docker --version), and what version of Rockstor for that matter (you might have posted it further up, but I can’t find it right now)?

Otherwise, it would indicate some missing docker image signature which, with docker’s default DCT (Docker Content Trust) will not allowed to be pulled. This can be temporarily disabled (I have not done this myself, though).

It’s probably a Rockstor/docker version issue. I’m on Rockstor version: 3.9.2-57 and Docker version 17.09.0-ce, build afdb6d4. I tried to make the Leap to the SUSE version, but ran into pool import problems so fell back to the old install and haven’t had the time to try again. Not sure if I can update my docker version from the CLI without breaking other things in this Rockstor version, or if I should just table this project until I make the switch to the current version of Rockstor.

I’ve been thinking about building a 2nd Rockstor server on the Open SUSE version maybe on a Pi with an SATA hat to try and be more power efficient, and once I have a full time backup server try to migrate my existing one again.

Highly recommended to move to the OpenSUSE build, for sure.

But, if you don’t want to lose momentum, you could try to temporarily disable the DCT

export DOCKER_CONTENT_TRUST=0

and just run the pull command to see whether that allows you to get the image:

/usr/bin/docker pull zwavejs/zwave-js-ui:latest

if that works you can try to install the Rockon again, and see whether you run into more issues.

However, ensure that you re-enable the trust setting again by running:

export DOCKER_CONTENT_TRUST=1 once your installation/testing is complete.

Thanks for the suggestion, but it doesn’t look like it worked.

[root@jonesville log]# export DOCKER_CONTENT_TRUST=0

[root@jonesville log]# /usr/bin/docker pull zwavejs/zwave-js-ui:latest

Error response from daemon: missing signature key

So I set the trust flag on again.

Was there any need to restart docker after changing the trust flag to 0?

To be honest, no idea. Could be that you need to restart the docker service, but not sure.

I think I may have reached the limit of my docker version.

I tired changing the trust flag again with a restart, and still received the error.

Tried this option as well, with no luck.

[root@jonesville log]# /usr/bin/docker pull --disable-content-trust zwavejs/zwave-js-ui:latest

Error response from daemon: missing signature key

Doing some research on the error I found a reference to a v2 Schema being introduced by Docker with version 18.06 and older versions are not able to pull images. Since I’m on 17.09 I think this may be my issue. I think this project might have to go on hold until I have time to upgrade my RockStor install. Open to opinions on what happens to my RockStor CentOS install if I update Docker from the command line.

That’s unfortunate that it didn’t work.

Have you found instructions to get Docker to a recent release on CentOS7? the risk on the existing docker images (i.e. Rockons), etc. is probably fairly low, since no docker build activity is done with any of these. However, if something does get messed up, you likely do look at a reinstall … your data pools won’t be affected by it, but, it still means that things like HA might not be operable until the system is fixed. So, I would recommend not to do it. If you do, for sure take a settings backup via the Rockstor WebUI and store it on another machine. So, in case you have to re-install (or actually move to the next gen Rockstor), you won’t have to install everything from scratch. Note, though that there was a fix for a failure to re-import nfs shares recently, not sure that problem existed in the CentOS (likely, though).

I would consider attempting to update Rockstor to an built on OpenSUSE version. Do you remember what pool import issues you’ve had? Do you occasionally run a scrub on your current pool? And, before you restore everything (see config backup comment I made), ensure you’re on the latest test channel to begin with, so all shares are restored appropriately.

@Hooverdan Thanks for all your help with this. I considered a Docker update, but I’m afraid of potential ripple effects. The only other RockOn I run is OpenVPN, but I can’t risk living without that. My past attempt so upgrade to Open SUSE was diagnosed as an unwell pool in need of a scrub and balance, which I did, but then haven’t tried another migration since.

That attempt is cataloged here.

By now I think I’ll pull down a new install image and start over. Hopefully I can find time before the end of the year.