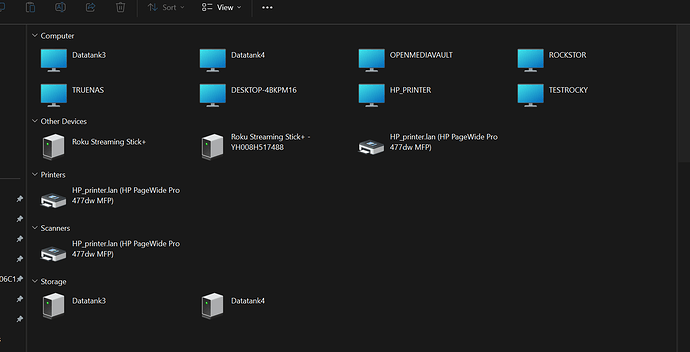

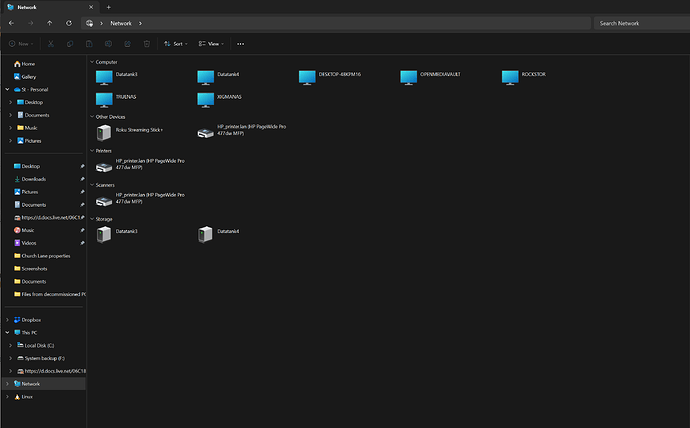

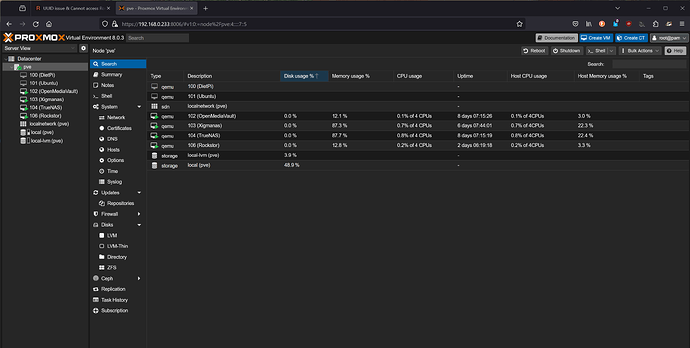

I have installed ProxMox VE and then installed Rockstor as a VM.

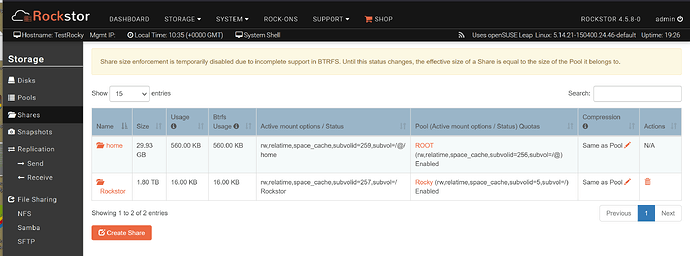

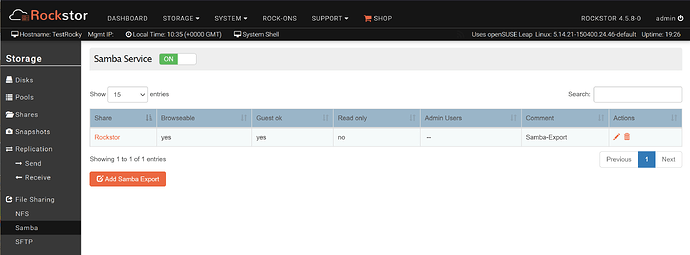

I have set up a share. I can see the network folder in windows. When I click on it, I then get a new folder with the name of my share. WHen clicking again on this, I get asked for credentials despite enabling Guest access and Browsing.

I have checked my credentials in Win 11 and deleted them in case of any legacy issues.

I have tried to change permissions to 777 in the Access control Tab of the Share Menu.

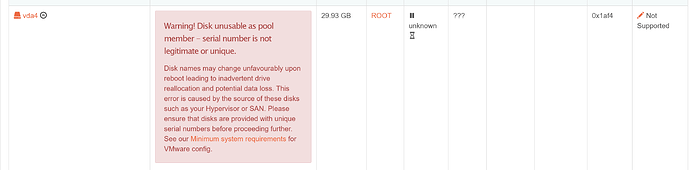

I also have another issue where Rockstor is reporting there are non-unique UUID (and reports VMware rather than ProxMox VE) on its swap, data and OS partitions. This is on a NVME M2 drive that also hosts 5 other VMs)

The share is on a Crucial 2 TB SSD and there are no UUID problems reported on this share. The entire SSD is passed through to Rockstor.

Web-UI screenshot

Error Traceback provided on the Web-UI

Traceback (most recent call last):

File "/opt/rockstor/src/rockstor/rest_framework_custom/generic_view.py", line 41, in _handle_exception

yield

File "/opt/rockstor/src/rockstor/storageadmin/views/share_acl.py", line 60, in post

chown(mnt_pt, options["owner"], options["group"], options["orecursive"])

File "/opt/rockstor/src/rockstor/system/acl.py", line 34, in chown

return run_command(cmd)

^^^^^^^^^^^^^^^^

File "/opt/rockstor/src/rockstor/system/osi.py", line 263, in run_command

raise CommandException(cmd, out, err, rc)

system.exceptions.CommandException: Error running a command. cmd = /usr/bin/chown -R root:root /mnt2/Rocky. rc = 1. stdout = ['']. stderr = ["/usr/bin/chown: changing ownership of '/mnt2/Rocky/containers/de1746be5ebbcfb1fd54b3047708fd2f59d0ac7e4477cba569053247f04690bc/mounts/secrets/suse_745d038b37ff083570b5bddc84209bab0e7743e54fc0502fb2708caeba7e4636_credentials.d': Read-only file system", "/usr/bin/chown: changing ownership of '/mnt2/Rocky/containers/de1746be5ebbcfb1fd54b3047708fd2f59d0ac7e4477cba569053247f04690bc/mounts/secrets': Read-only file system", '']