This document represents the current and pending state of bcache in Rockstor and is not an endorsement of it’s use or support status but is simply intended to share the progress and development made to date. It is intended as a developers document so any changes made should be in accordance with keeping this text up to date with the current code state, including in the case pending pr’s.

If you wish to comment on bcache support in general, as opposed to the specifics of what is actually in play currently: please consider contributing to the following forum thread: SSD Read / Write Caching. Comments and edits here are welcomed in the context of code or development contributions only as it is intended as a current state of play doc.

What is bcache

The facility to use a faster device, such as an SSD, to cache the reads and writes from one or more slower devices, such as a HDD is the focus of the in kernel bcache system. This is not currently a supported feature of Rockstor and if implemented via the command line can lead to some confusing an misleading reports from Rockstor’s user interface. However some of these ‘confusions’ can be avoided by using the udev additions / configurations detailed in this post.

Thanks to @ghensley for his encouragement and assistance in this area.

The rules as presented are only trivially altered from those presented by @ghensley during my (@phillxnet) ‘behind the scenes’ schooling from @ghensley.

Required udev rules

First the following file must be added:

/etc/udev/rules.d/99-bcache-by-id.rules:

with the following contents:

# Create by-id symlinks for bcache-backed devices based on the bcache cset UUID.

# Also, set a device serial number so Rockstor accepts it as legit.

DEVPATH=="/devices/virtual/block/bcache*", \

IMPORT{program}="bcache_gen_id $devpath"

DEVPATH=="/devices/virtual/block/bcache*", ENV{ID_BCACHE_BDEV_PARTN}=="", \

SYMLINK+="disk/by-id/bcache-$env{ID_BCACHE_BDEV_MODEL}-$env{ID_BCACHE_BDEV_SERIAL}", \

ENV{ID_SERIAL}="bcache-$env{ID_BCACHE_BDEV_FS_UUID}"

DEVPATH=="/devices/virtual/block/bcache*", ENV{ID_BCACHE_BDEV_PARTN}!="", \

SYMLINK+="disk/by-id/bcache-$env{ID_BCACHE_BDEV_MODEL}-$env{ID_BCACHE_BDEV_SERIAL}-part$env{ID_BCACHE_BDEV_PARTN}", \

ENV{ID_SERIAL}="bcache-$env{ID_BCACHE_BDEV_FS_UUID}-p$env{ID_BCACHE_BDEV_PARTN}"

this file in turn depends upon a ‘helper’ script:

/usr/lib/udev/bcache_gen_id:

containing:

#!/bin/bash

[ -z "$DEVPATH" ] && DEVPATH="$1"

BCACHE_DEVPATH=$(readlink -f "/sys/$DEVPATH/bcache")

[ -z "$BCACHE_DEVPATH" ] && exit 1

echo "ID_BCACHE_CSET_UUID=$(basename "$(readlink -f "$BCACHE_DEVPATH/cache")")"

BDEV_PROPERTIES=$(udevadm info -q property "$(dirname "$BCACHE_DEVPATH")")

echo "$BDEV_PROPERTIES" | awk -F= -f <(cat <<-'EOF'

/^ID_MODEL=/ {

print "ID_BCACHE_BDEV_MODEL="$2

}

/^ID_SERIAL_SHORT=/ {

print "ID_BCACHE_BDEV_SERIAL="$2

}

/^PARTN=/ {

print "ID_BCACHE_BDEV_PARTN="$2

}

/^ID_FS_UUID=/ {

print "ID_BCACHE_BDEV_FS_UUID="$2

}

EOF

)

and to make this file executable we do:

chmod a+x /usr/lib/udev/bcache_gen_id

Why these rules

In the above virtual bcache dev serial attribution is based on the associated backing device fs uuid as this seemed to be a robust solution. The reasoning here is that if one was to move an image of a bcache backing device from one physical device to another then my understanding is that this new physical device would take the place of it’s predecessor so if we ascribe our virtual device serial with the uuid of the backing device, which presumably is derived via it’s early sector make-bcache -B attributes, then we have a more robust scenario. Ie virtual bcache dev (/dev/bcache0) will remain correctly associated. Obviously a re-naming would take place on the backing device but Rockstor can handle dev re-names as long as the serial remains the same. Which if we use the lsblk reported fstype and uuid of a backing device, it should.

This way we have more info available as the virtual by-id dev name will have backing device serial within it already so we might as well get something more from the serial info, ie the tracking to software superblock of the backing device. While the backing device is tracked by it’s own serial.

Building bcache

Although bcache’s kernel component is included in Rocksor’s elrepo ml kernel (but not in our CentOS installers kernel due to it’s age) there is a requirement for some user land tools. There are Fedora packages available that are linked to from the maintained main page for bcache reproduced here for convenience.

http://pkgs.fedoraproject.org/cgit/rpms/bcache-tools.git/

It is intended that an unofficial retargeted rpm including the above udev modifications be made available for those wishing to trial this unsupported element of Rockstor’s development. If you fancy rolling this rpm before I (@phillxnet) get around to having a go then do please update this wiki with your efforts. For the time being only a ‘built from source’ approach has been trialed:

cd

wget https://github.com/g2p/bcache-tools/archive/master.zip

yum install unzip

unzip master.zip

cd bcache-tools-master/

from the README the commands that are to be build are:

- make-bcache

- bcache-super-show

- udev rules

“

The first half of the rules do auto-assembly and add uuid symlinks

to cache and backing devices. If util-linux’s libblkid is

sufficiently recent (2.24) the rules will take advantage of

the fact that bcache has already been detected. Otherwise

they call a small probe-bcache program that imitates blkid.

“

yum list installed | grep libblkid

libblkid.x86_64 2.23.2-26.el7_2.2 @anaconda/3

bcache build pre-requisites

yum install libblkid-devel

then

make

make install

backup existing initramfs

cp /boot/initramfs-$(uname -r).img /boot/initramfs-$(uname -r).img.bak

If running same kernel version as will boot into:

dracut -f

else we need to specify version and arch.

Finally we run the following to take account of the initramfs changes at boot:

grub2-mkconfig

Once the kernel module is loaded (auto upon device being found or via modprobe bcache) there should be a:

/sys/fs/bcache/

directory.

Also note that changes / additions to the udev rules are often only put into effect after a:

udevadm trigger

Relevant Proposed changes in Rockstor

The udev additions previously covered along with proposed changes to the disk management sub-system in pr:

should mean that Rockstor is able to recognise all bcache real and virtual devices: but very limited testing in this area has been done, but in a single caching device serving 2 bcache backing devices arrangement, all associated devices were correctly presented within the user interface.

N.B. all devices were whole disk and no accommodation or testing was made for partitions in the above pr re bcache.

In the above pr the following command was used to setup a single device to cache 2 other devices in a KVM setup. The VM device serials were chosen to aid in identifying the proposed type of the devices.

make-bcache -C /dev/disk/by-id/ata-QEMU_HARDDISK_bcache-cdev -B /dev/disk/by-id/ata-QEMU_HARDDISK_bcache-bdev-1 /dev/disk/by-id/ata-QEMU_HARDDISK_bcache-bdev-2

And resulted in the following disk / device identifications:

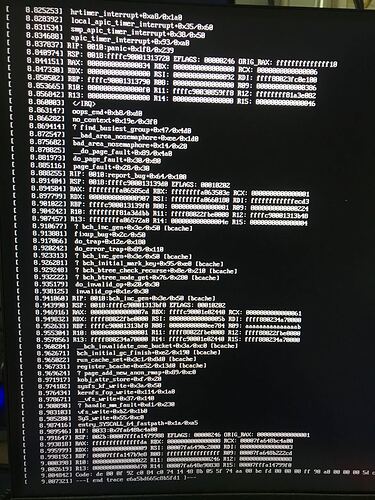

In the above image the padlock’s indicate that the bcache virtual devices have been used as LUKS containers, simply as this was a more pressing focus for the disk management subsystem. So normally they would appear as regular ‘ready to use’ Rockstor devices.