@bhsramz Hello again.

This is a rather old version of Rockstor and since previous forum thread indicated a stable channel version of 3.9.2-30:

But you also state re-installing on a suspect system disk so maybe that is your motivation for not pushing your luck with a drive intensive OS update (which a Rockstor update would bring with it).

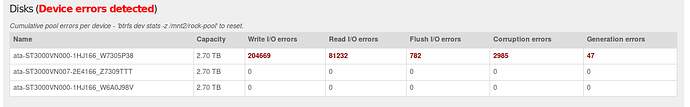

I would advise moving back to the Stable release, especially given the following new capability on the pool details page added in 3.9.2-35 (assuming you system disk is not suspect):

(The above raid1 pool was able to repair itself in a single subsequent scrub operation, suspected cause: interconnects or controller hw/driver hang)

to address issue:

Which should help to identify which drive is causing your io errors.

That UI aspect gets it’s info from the output of the following command (with your homeshare pool name):

btrfs dev stats /mnt2/homeshare

You could paste that output here along with an:

ls -la /dev/disk/by-id

as your current pool details page is unable to display the temp canonical to by-id name mapping.

Your use of Raid0, which has no redundancy, is trading increased risk (with each disk added) for the gained space as if any one disk dies it can take the entire pool with it. So with 4 disks in raid0 you are running 4 * the risk associated with a single disk failure, but for the whole pool. Raid1 is a better bet all around, unless of course the primary concern is available space given the above.

Yes this can happen with our current percentage reporting, but it may have been improved from that version of Rockstor.

Potentially. We definitely need more work in that area.

Balance times can be drastically improved by disabling quotas but again only stable channel can handle that.

3.9.1-0 was very weak on displaying errors / unmounted / degraded pool states. You may very well have issues that would be made clearer on a newer version given the above referenced issues and a number of others that have also been addressed.

Yes execute the following command via ssh and run as the root user, it’s what Rockstor uses internally:

btrfs balance status /mnt2/homeshare

If as I suspect you have a dodgy drive, potentially indicted by the io (In/Out) errors, then your pool is in a rather precarious situation and with raid0 it has no redundancy with with to repair itself.

I can’t spend much time on this but the output of the following commands may help others chip in:

btrfs fi show

and

btrfs fi usage /mnt2/homeshare

and

btrfs dev usage /mnt2/homeshare

The last of which I’m actually in the process of adding to Rockstor currently.

That would be a very risky practice and would likely cause more problem as I suspect you have a flaky disk and it would first be wise to identify which one it is. And if it is the newly added one then the situation is all the more precarious especially given raid0. Raid0 is not a good choice in most scenarios except where the pool is disposable.

Then I would move to identify which is the problematic drive, from the above commands, and by looking to their S.M.A.R.T info, see our S.M.A.R.T howto. And by running the drives self test and checking their smart error log entries there after. Then once you have identified the problematic drive, physically remove it. At this point your pool is toast as raid0 has no redundancy so you will have to wipe the pool and all it’s remaining members and start over by creating a new one with the remaining members. That way you are up and running the quickest.

That’s right, a balance, once initiated, should resume where it left off during shutdown/reboot upon the next boot. But in your case it looks very much like you have an filesystem issue and or a hardware issue. Again command outputs (or newer rockstor version) should help to pin this down.

Hope that helps and thanks for helping to support Rockstor via you stable channel subscription, but I would advise using that subscription as the stable channel updates are a significant improvement on non updated iso installs: especially when things go wrong ie drive / pool errors which in turn can lead to unmounted pools or pools going read only.