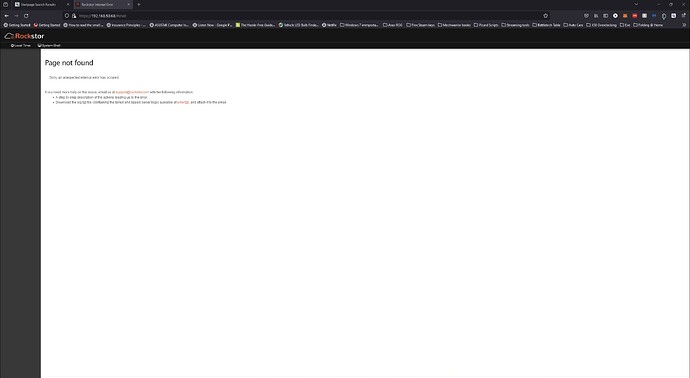

@ScottPSilver Re:

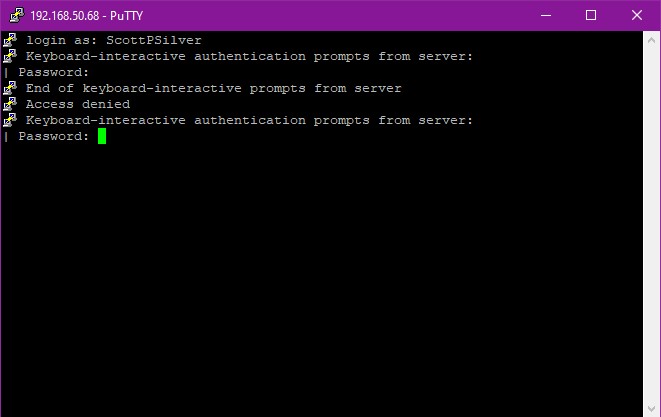

You need to use the ‘root’ user: the passwored for which was setup by you at this stage of our installer:

https://rockstor.com/docs/installation/installer-howto.html#enter-desired-root-user-password

The ‘root’ user is also the one required to execute many btrfs comands.

There is no choice within our installer to ‘define’ or choose the partition/size: only the OS drive to use. See:

“Select Installation Disk”: Rockstor’s “Built on openSUSE” installer — Rockstor documentation

So I don’t know what you mean there. We have a dedicated system drive with suggested minimum size of 16 GB and 32 GB recommended:

Minimum system requirements: Quick start — Rockstor documentation

- 16 GB drive dedicated to the Operating System (32 GB+ SSD recommended, 5 TB+ ignored by installer). See USB advisory.

yours looks to be 1/3rd of the minimum, and 1/6th the recommended.

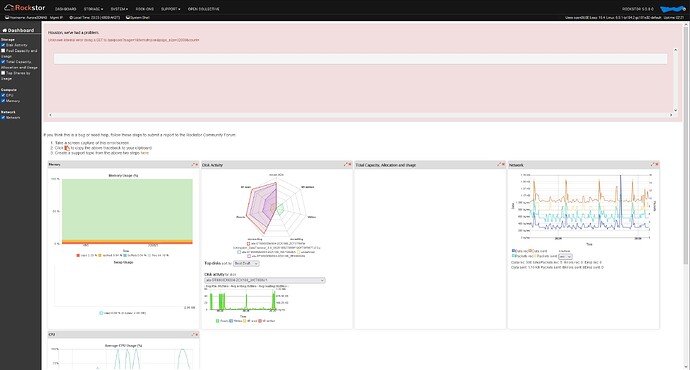

Hence you now have: < 3 MB of free space !!! And:

This would have been apparent to all here on the forum if you had reported a full output of btrfs fi show which was requested by me on the very first day of this thread, and for several responses there-after:

Your response, was as follows:

Others can seem more than you sometimes!

With an incomplete output of just your Data Pool: using a non production raid6 redundancy level, which requires lots of hoops to jump throught just to wright to. As by default it is read-only with our standard kernels.

Know you have a broken OS drive because 5 GB not 32 GB OS POOL/drive.

You OS Pool is likely toast as it was filled and unable to complete a full update: ergo half new / half old as it were. You must clear space as per:

That’s for our Rock-ons ‘rock-ons-root’ share:

https://rockstor.com/docs/interface/overview.html#the-rock-ons-root

Unrelated.

So given you have hosed your OS by not following recommended/let alone minimum system guidelines, and are now in a tight spot, but want assistance with a broke pool that is only writable by your advance use of:

Installing the Stable Kernel Backport:

https://rockstor.com/docs/howtos/stable_kernel_backport.html

This How-to is intended for advanced users only. It’s contents are likely irrelevant unless you require capabilities beyond our default openSUSE base. We include these instructions with the proviso that they will significantly modify your system from our upstream base. As such you will be running a far less tested system, and consequently may face more system stability/reliability risks. N.B. Pools created with this newer kernel have the newer free space tree i.e. (space_cache=v2). Future imports require kernels which are equally new/capable (at least ideally).

You are way out on a limb here and likely over-reaching re your existing knowledge to support this configuration: i.e. inability to use ssh without hand-holding. But hey, we are a DIY community here, and experimentation is all good, and you acknowledge re:

So my assumption here is that you have not used the system drive to store anything, if you have then use another and do a fresh install: ensuring you meed at-least the minimum system requirements as per our doc referenced above. You will then at least have a non-toast OS Pool with which to execute requests that have yet to be answered form day one. And if you could also indicate if this is running inside of a VM as that could also explain why a 32 GB OS drive becomes a 5 GB ‘ROOT’ Pool leaving you entirely unable to install anything including maintenance updates, let alone advanced options such as backported stable kernels and the like.

So in short, you might want to re-install: detaching all Data dives first to be sure you don’t accidentally select them: however we have a safeguard where drives < 5 TB will not be shown as install options anyway - but all yours look to be less than that. Also note I’m working on new installers currently: so better get back to that.

I know this is frustrating: but learning often is - and your feedback here has helped to see where folks go with what we have and in party why I’ve introduced such additional restrictions out-of-the-box as not concerning folks with the OS drive at all: as from 5.0.9-0 (but not on update) and all our future installers, the ROOT pool is no longer imported by default. But you would still have broken your system by having 5 GB ROOT pool. Or is this a bug: if so it would have been nice to have had the evidence as to what lead to it. And no recommendation to update would then have been given. Your efforts to enforce the use of the un-recommended btrfs-raid6 (default read only currently in our installers) indicates you are game, which is great: but there is much time taken in hand holding and we are a small community trying to do a lot. So I will have to duck out from this thread now as I have yet to receive output requested on the first day: as it was edited/incomplete. I am also support email incidentally.

So consider saving everyone elses time, and your own time, and re-do your install as per our recommendations. And if it’s still 5G then fantastic: we have a reproducer for a bug report. You then have an OS at least as per our recomendations. But you will only have read-only capability, if that, to your existing Pool. And will have to re-tread your prior steps re stable kernel backports install, but at least you will be more familiar with what you are attempting to do: which is go-it-alone. We inherited btrfs-raid6 ro form openSUSE. I wrote the stable kernel backport doc with the quoted proviso. If that is ignored by folks and subsequent requests are also ignored then more learning can take place, but folks can’t help you here if you do not answer with complete outputs: and use advanced procedures to do what you think is correct and then have no knowledge of having done them:

Not so much, we have all played around here: it’s part of the DIY sceen and can be fun, but if your are out of your depth you need to reset/re-install so you at least have an OS to work with. 5 GB does not cut it any longer for boot to snapshot ROOT. And advise (not followed) was given to clear up space at that critical time - by me - twice) We enfoce boot to snapshot to give folks a way to boot into a prior system if all fails on the one they have - but that breaks also if the ROOT pool is filled up. And could have dug you out from many issues if you had only had at least the minimum ROOT pool size. I’m wondering if we are looking at an as yet unseen installer bug actually: speaking of which I better get back to that development.

Try re-installing so you have a working OS: then follow:

“Import unwell Pool” Disks — Rockstor documentation

But as you have already gone so way-off track to get your parity raid, you may also need the backported kernel and filesystem howto first.

Hope that helps, and I know you are trying here, but it’s a few minutes to re-install and you have nothing like our recommendations in any element of your system:

- 5 GB ROOT pool

- Parity btrfs-raid6

- Backported kernels and filesystems with warnings against this in HowTo.

- Testing channel (but we are now on RC 4 as of yesterday

)

)

And so your are out-on-a-limb. But without the expertise to contribute fixes / enhancements to improve things for yourself and others here. However you may be perfectly positioned to contribute to our docs re:

Contributions here are always welcome:

Contributing to Rockstor documentation : Contributing to Rockstor documentation — Rockstor documentation

P.S.:

Starting with a 5 GB ROOT (OS Pool), which would have been evident from day one with a full btrfs fi show output  . Always difficult starting out: but you now have quite the perspective. And if really need parity raid: accepting the know repair problems, use say a mixed profile so you have equal redundancy on the metadata. [EDIT] And don’t enable compression.

. Always difficult starting out: but you now have quite the perspective. And if really need parity raid: accepting the know repair problems, use say a mixed profile so you have equal redundancy on the metadata. [EDIT] And don’t enable compression.