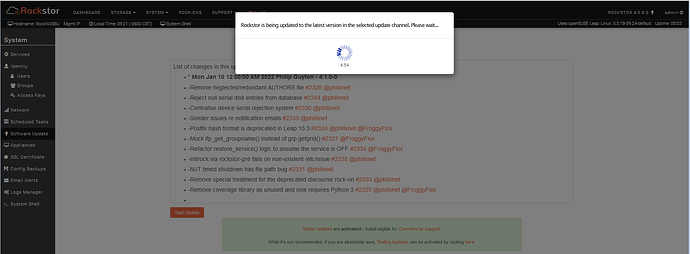

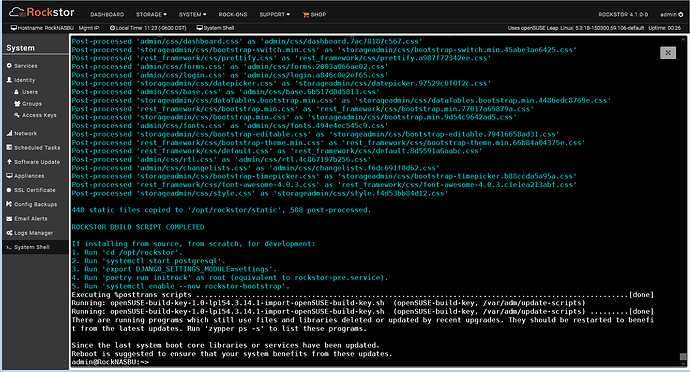

All updates applied before this happened:

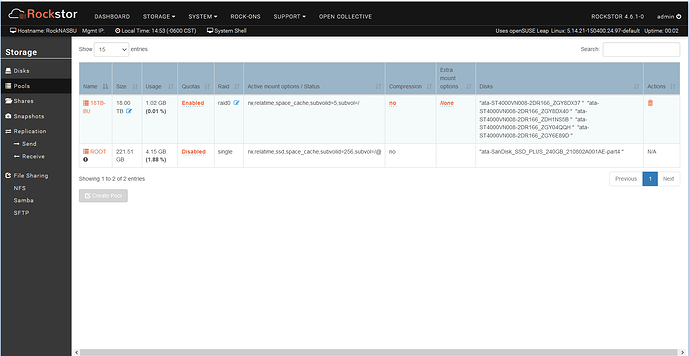

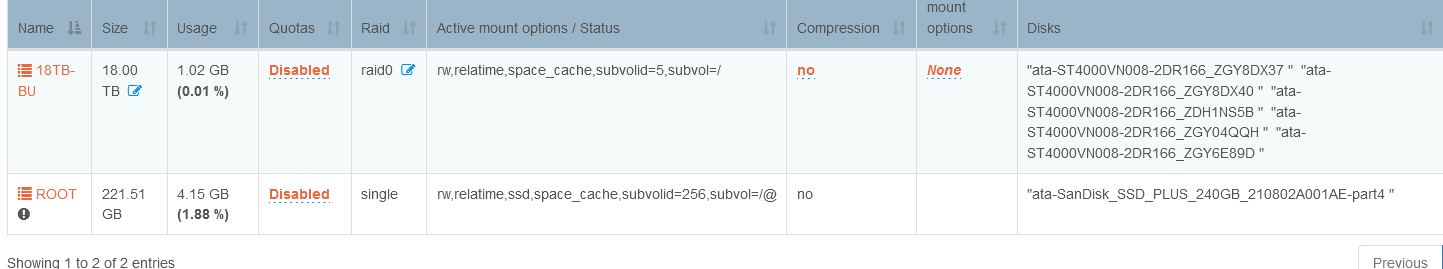

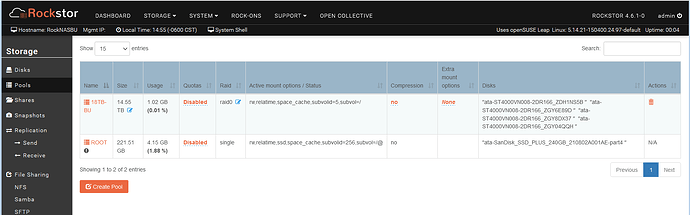

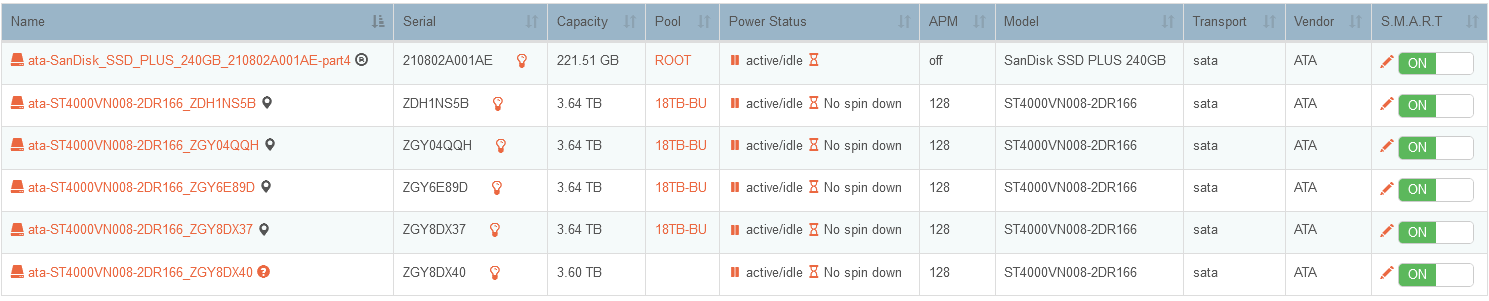

Okay, changed my Backup NAS from two raid0 pools (12TB-3Disk and 4TB-2Disk) to a single 20TB-5Disk setup. All 5 drives are now the same 4TB units.

I went to Pool setup (after clearing all the old setup) and selected all 5 disks for a new Raid0 pool.

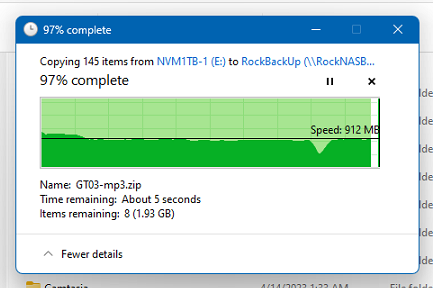

Everything seemed to go fine… the share worked fine and I started transfer speed testing…

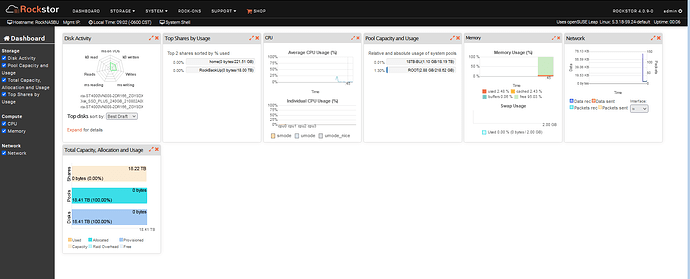

Well, looking at the dashboard, I checked on the write speed of each disk and found one disk had no activity!!!

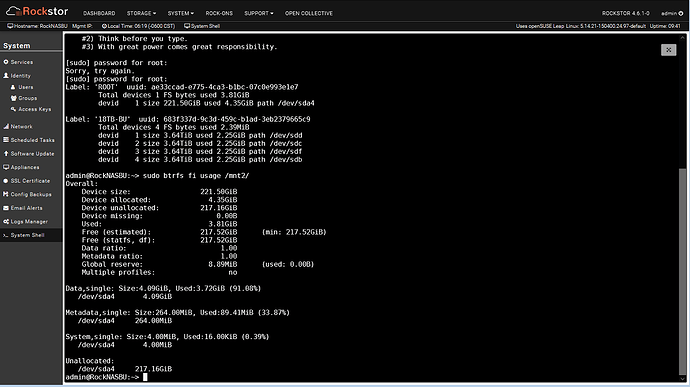

Hmmm, checked the pool and only 4 of the 5 disks had been used in the new Raid0 thing, but the Pool size was correctly reported!?!?!

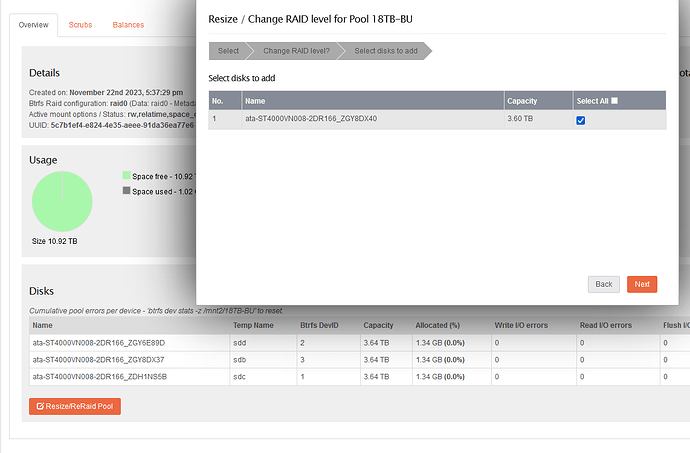

Sooo, deleted the pool and share and reset all the disks again. Did the same thing and it did the same only 4 disks used in the pool. I then did the resize thing and manually added the 5th disk and it worked.

Now everything seems to be working normally, the disk writes in the Dashboard are all showing up etc.

I have no idea if some status bit or something went wrong and it was actually using all 5 disks and the Dashboard was just not reporting or what. I have no inclination to try to fill it up in that state and see what happens!

Before I put it back online, let me know if you want screen shots or anything…

Weird…

![]()