phillxnet

February 4, 2019, 6:59pm

1

As this thread is starting retroactively in this now long release cycle I’ll first give some context and move to detailing only the most recent releases. The canonical reference for all code changes is in our open source GitHub repo:

Linux/BTRFS based Network Attached Storage(NAS)

This is the ‘rockstor’ package origin. This does not include the Rock-on definition files .

An attempt is made to keep GitHub Release / git tags in line with package release versions but due to a variety of reasons one can lag the other for short periods.

3.9.2.0 was the first in our current stable channel release (identical to the last testing channel release of 3.9.1-16). It would normally have been earmarked for an iso release but this did not happen. This means we are not well represented currently. Our fault entirely, it’s just a shame, but I suspect our next iso release is not far away and is likely to be based on current stable code (which is looking ripe) and have openSUSE as it’s linux foundation; all prior releases have been CentOS based. See: Rebasing on to openSUSE? and our openSUSE dev notes and status . And will hopefully also kick off a new testing channel release program; hears hoping as we have failed in this regard previously.

We use to use testing channel to develop until we reached a ‘stable point’ and snapshot this as a known good version to be released in the stable channel. This sustainability model was as a result of discussions on this forum: namely the following thread:

Thanks everyone for very useful input and ideas. I am interpreting the response to be largely in support of my proposal, and some of you want us to even charge a bit more.

While there are other services that we plan to offer as Rockstor matures with clear choices and value propositions for users with different needs, we’ll keep the update-channel-subscription pricing model simple, because, the offering is pretty simple IMO.

We’ll start with two choices, a short term(1 year) and a longer term(5…

This proved to be unsustainable as per our prior arrangement and stable subscribers expressed frustration with being ‘left behind’ / under served in comparison to testing which was only rarely broken anyway. So we changed again, as a value add to our stable subscribers, to releasing Rockstor rpm updates to stable channel only; with the intention of picking up the testing channel again when our resources allowed for this. In open source software it is hard to achieve sustainability and we are attempting to find a way. Our stable channel subscribers are currently that ‘way’.

One of my goals within the project is to return to more frequent iso releases and to resurrect the testing channel but that is going to take time and has failed on us before. But I hold out hope and as we attract more contributors / helpers I see it as a more viable possibility for the future than it was in the past.

Only time will tell.

As per the above intro we can kick off with detailing only the most recent as of writing changes per version in this thread.

1 Like

phillxnet

February 4, 2019, 7:08pm

2

Merged and released Sep 2018

Thanks to forum member @Flox for this one:

" Briefly, this PR adds support for a new “devices” object in Rockons’ json definition files …"

I.e. a potentially far reaching enhancement to our currently constrained Rock-on system and enables Rock-on definitions to encompass such things as hardware transcoding configurations.

Issue:

opened 07:49PM - 31 Aug 18 UTC

closed 11:52AM - 22 Sep 18 UTC

I've been interested in trying to help improving the functionalities of the Rock… on system following several obstacles I've encountered (multiple containers, custom `docker run` command argument, other `docker run` options) for a while now, but never dared digging through the code.

Recently, however, I started looking into taking advantage of a new functionality of the EmbyServer container: hardware transcoding. Unfortunately, this would require the RockOn to support the `--device` option to `docker run`.

As mentioned in the corresponding issue (https://github.com/rockstor/rockon-registry/issues/152), I was thus thinking to implement support for a new _**Devices**_ object in the JSON files. This object would follow the same structure as the _**Environment**_ object, with `"description"` and `"label"` fields in the JSON file triggering a new page in the Rockon install wizard for the user to input a custom device path (`/dev/dri/renderD128` for instance).

```

{

"description": "path to hardware transcoding device (/dev/dri/renderD128)",

"label": "VAAPI device"

}

```

I started working on it and modify the files I believe are involved in such a process (https://github.com/FroggyFlox/rockstor-core/tree/RockOn_device_option), but am hitting an obstacle in figuring out what's wrong. The problem I'm facing is that I do not get the Rockon install wizard to open a new page related to the _**Devices**_ object I'm trying to implement.

While I can read and understand more or less the intent behind the Python code, I am not familiar enough with Javascript to even understand whether my issue lies first in the frontend or backend.

Would anyone be kind enough to offer some pointer(s)?

I've been testing the following changes:

https://github.com/FroggyFlox/rockstor-core/tree/RockOn_device_option

With the following JSON file:

https://github.com/FroggyFlox/rockon-registry/blob/emby_dotNET/embyserver.json

Code changes:

rockstor:master ← FroggyFlox:RockOn_device_option

opened 06:41PM - 12 Sep 18 UTC

Fixes #1957

Add support for the docker run `--device` option to Rockons to all… ow users to add custom device(s) to their Rockon(s), based on the existing support for the docker run 'environment' variables. This PR is now functional and ready for feedback and review.

Briefly, this PR adds support for a new **"devices"** object in Rockons' json definition files, following the same structure as for the **"environment"** variables with "description" and "label" fields in the JSON file (see below).

```

{

"description": "path to hardware transcoding device (/dev/dri/renderD128)",

"label": "VAAPI device"

}

```

This, in turn, triggers the Rockon install wizard to create a new **Device Choice** page, with as many free text input fields as devices listed in the JSON file. The text input in these fields is then validated under the following rules:

1. optional: if blank, validate.

2. if filled, the input must begin with '/dev/' and only contain alphanumerical characters (thanks @phillxnet for your regex definition).

Testing:

All changes in python files pass Flake8.

The style of the `rockon.js` file was fixed by ESLint following style settings described in the `.eslintrc.js` file in the rockstor-core repo.

The JSON file used for testing is the following, with 3 optional devices listed:

https://github.com/FroggyFlox/rockon-registry/blob/emby_dotNET/embyserver3devices.json

phillxnet

February 4, 2019, 7:14pm

3

Merged and released September 2018

Thank again to forum member @Flox for another Rock-on related enhancement:

“For some docker containers, it can be useful–if not necessary–to run add arguments to the docker run command.”

Issue:

opened 03:29PM - 21 Sep 18 UTC

closed 12:42PM - 25 Sep 18 UTC

I've encountered a limitation when writing some Rock-on definition files when th… e latter require specific arguments in their `docker run` call.

From my own experience, this is the case, for instance, for the [jwilder's docker-gen image](https://hub.docker.com/r/jwilder/docker-gen/), but I've encountered another user[ encountering the same issue](https://github.com/rockstor/rockon-registry/pull/120#issuecomment-324193880) in the list of PR in the Rockon registry repo.

As used by @jmhardison in the PR linked above, a workaround is to fork the docker image and customize the entrypoint directly, but this requires another repo to be maintained. Being able to write down these specific `docker run` arguments in the JSON Rockon definition file would be a lot easier.

Code Changes:

rockstor:master ← FroggyFlox:Rockon-AddCmdArgsSupport

opened 07:01PM - 22 Sep 18 UTC

Fixes #1967

This PR replaces the previous #1966 after upstream rebase.

For … some docker containers, it can be useful--if not necessary--to run add arguments to the docker run command. Modeled after the "options" object for Rockons' definitions, this commit adds support for such arguments to Rockons by:

1. Fetching arguments from a "cmd_arguments" object at the "container" level in the JSON file. Similar to the current 'options' object, this object must be in a 2-d array: "cmd_arguments": [ ["cowsay", "argument1"] ].

1. Store arguments in storageadmin under a new DContainerArgs table.

3. Extend the docker run call with these arguments after inserting the container's image name.

Tested with a [JSON for Docker's Whalesay image](https://gist.githubusercontent.com/FroggyFlox/a6dcf6b3eb7f6c4038ae26daffb919d2/raw/fe2d507b40961c5ebb942f8ee6ebb249c47a89f3/whalesay_test.json).

Passed Flake8's compliance tests.

phillxnet

February 4, 2019, 7:27pm

4

Merged and released October 2018

This was a large one but in short it enhanced our disk / pool subsystems and Web-UI to function more helpfully in scenarious where a disk had died or is no longer available (missing in btrfs speak). Building on a prior enhancement that surfaced such conditions within the Web-UI via red flashy warning etc.

Issue:

opened 10:57AM - 26 Apr 17 UTC

closed 11:50AM - 05 Oct 18 UTC

Thanks to zoombiel in the forum and GitHub users @therealprof and @iFloris (#737… ) for highlighting this issue. At time of issue creation we have no UI means of addressing the removal of a missing device from a pool. I proposed that we extend our existing 'bin' icon to link to a specialised page to explain the detached status, ie this could be a backup drive that is not permanently attached (likely then the only member of a pool if it is a btrfs pool member at all) or it could be a member of a multi disk pool, in which case there is a high likely hood that the observed situation is a fail condition. We could in turn link to the associated pool details page where it is proposed we implement a "Remove Missing Devices" option which in turn runs the following:

```

btrfs device delete missing mountpoint

```

Reference: https://btrfs.wiki.kernel.org/index.php/Using_Btrfs_with_Multiple_Devices

Section: Using add and delete:

"... if a device is missing or the super block has been corrupted, the filesystem will need to be mounted in degraded mode:"

"btrfs device delete missing /mnt"

"btrfs device delete missing tells btrfs to remove the first device that is described by the filesystem metadata but not present when the FS was mounted."

N.B. As this option depends upon a degraded mount, it could also be presented only under those circumstance.

It is favoured that this option be located in the pool UI as it exists at the pool level of analysis: hence the link from disk to pool to emphasise the affect level.

A pre-requisite of this issue is to address pool health reports which in turn will require developing to be able to recognise the 'degraded' pool state and additionally, in a missing drive scenario, offer the option to mount degraded with all the associated warnings, ie the current one shot deal, if the drive count is at minimum, including the missing drive, for the given raid level.

Linking to related issues on pool health reporting: #1531 , #1199 ,

Please update the following forum thread with this issues resolution:

https://forum.rockstor.com/t/remove-failed-disk-from-pool-no-replacement/3151

Also note that this issue is potentially a duplicate (but with additional implementation details) of GitHub user @therealprof;s #737 with additional contributions from @iFloris .

Code Changes:

rockstor:master ← phillxnet:1700_Implement_a_delete_missing_disk_in_pool_UI

opened 01:59PM - 02 Oct 18 UTC

Extend the existing pool details page and it's Resize/ReRaid wizard to facilitat… e the removal of detached / missing disks. Includes a number of bug fixes re Pool edits, mostly concerned with disk add / remove when a pool has one or more detached members. Advise and link on Disks page against detached members of managed pools. Note that detached disks with no managed pool association are unaffected by these changes. I.e. they can be, as before, removed (from db) via a bin icon.

Summary:

- Ensure pool is mounted prior to add / remove / raid change actions and present a user error if this prerequisite is not met.

- Add 'btrfs device delete missing' function to managed pool detached member via the existing 'Remove disks' option within Pool details page 'Resize/ReRaid Pool'.

- Web-UI Disk's page - add map icon to identify "... detached member of a Rockstor managed Pool." tooltip quote.

- Web-UI Disk's page - add exclamation mark "Use the associated Pool page Resize/ReRaid 'Remove disks' option if it is not to be reattached." tooltip quote.

- Web-UI Pool details page - add contextually aware text / link giving advice and optional 'dev delete missing' work around for when no detached disks are known: i.e importing a degraded pool on a fresh

install for disaster recovery.

- Change remaining / resulting disk count validation to account for detached disks.

- Remove redundant balance after disk delete (pool member removal) operation.

- Change disk model temp_name property to return canonical variant of target_name, ie make it redirect role aware.

- Add pool details page Disk table "Temp Name" column (first and currently only use of temp_name disk model property).

- Fix bug where io_error_stats failed for btrfs-in-partition (ie redirect role).

- Add "Replace existing disk (planned option)"" placeholder Web-UI text.

- Minor rewording of user facing messages re pool edits.

- Minor variable name refactoring / renaming.

- Manage disk selection within Resize/ReRaid wizard and consequent validation via disk id not disk name, fixes buggy behaviour re detached disk management.

- Add TODO: re running resize_pool() as async task, relevant mostly to disk delete as a time out is generated on all but trivially small pools.

- Add TODO: pool_info() - move 'disks' to dictionary with (devid, size, used) tuple value.

- Add TODO: _update_disk_state() - how to fix a recent btrfs in partition regression (my fault).

Fixes #1700

See issue comments for context and images of added Web-UI elements.

And as the last remaining sub-issue linked in the following super set issues:

Fixes #737

(6 other dependant and now closed issues).

Fixes #1199

(5 other dependant and now closed issues).

@schakrava Ready for Review

Testing:

All affected pool edit options were tested to behave as expected (given known caveats below) on both KVM systems and real hardware.

Caveats:

- When removing a drive live "btrfs fi show" and consequently Rockstor indicate detached / missing pool members. But prior to a reboot any attempt to 'btrfs device delete missing' results in a '... no missing devices..' type error which we do surface. Post reboot delete missing works as intended.

- As "btrfs device delete (missing or otherwise)" initiates an internal balance that can take several hours we experience a time out that break the Web-UI feedback and associated db updates for this operation. However this is a related but independent issue which is detailed in existing issue:

"pool resize disk removal unknown internal error and no UI counterpart" #1722

The work around for the time being is to execute the same disk removal operation again where the second attempt (if post internal balance completion) executes as expected due to the initial disk removal having now succeeded and so is skipped, avoiding the cause of the timeout that breaks the otherwise functional (on trivially small pools) btrfs device delete (missing or otherwise) pool operation.

phillxnet

February 4, 2019, 7:34pm

5

Merged and release end of October 2018

Fixed a Dashboard regression issue kindly reported by @juchong

Issue:

opened 11:10AM - 28 Oct 18 UTC

closed 02:35PM - 01 Nov 18 UTC

Thanks to forum member juchong for highlighting this issue. For devices that hav… e only same length by-id names we have irregular or non functional activity in the dashboard disk activity widget. This is due to a disparity between the temp canonical to by-id name mapping employed by the widget and that used by the rest of the system. The widget, for the time being, uses a particularly light weight construct which simply uses the longest (or first if equal name lengths found) by-id name for a given canonical name in the output of 'ls -l /dev/disk/by-id'. However the rest of the system uses the algorithm instantiated within get_dev_byid_name() which is essentially the same but with additional needed safeguards logging / debug info etc. But as from pr/issue:

"improve by-id device name retrieval. Fixes #1936" #1937

applied in stable channel release version 3.9.2-28, which addressed an instability in the by-id name selection for devices that had only multiple same length by-id names, the name selection algorithms diverged. Hence prior to the referenced release the non deterministic bug present only in the rest of the system (only cosmetic side effects known) could cause an irregular mismatch with the mapping used only by the dashboard disk activity widget. And post the indicated fix the by-id name selection, in certain circumstances, would cause no activity to be registered at all for the given device: our regression.

The fix proposed by the author is to normalise the reverse lexicographical sort of by-id options employed in the improved algorithm referenced in the above fix that was used to add determinism (stability) to by-id name selection in the rest of the system to the dashboard widgets by-id map creation as well: there by re-converging the by-id name selection between the two.

Please update the following forum thread with this issues resolution:

https://forum.rockstor.com/t/disk-activity-plot-not-working-with-sas-drives/5351

Note that this issue only affects a device if it has both:

1. Multiple by-id names (usual).

2. All by-id names are of equal length.

Code changes:

rockstor:master ← phillxnet:1978_regression_in_dashboard_disk_activity_widge

opened 06:18PM - 28 Oct 18 UTC

Recent improvements / bug fixes in the non dashboard by-id name selection has le… ad to a deviation in algorithms for by-id name resolution leading to a failure in the disk activity widget due to unknown by-id name selection within that widget. This only happens if all by-id names for a given device are of the same length.

Summary:

- Move dashboard 'temp name -> by-id' map generation to reverse lexicographical to normalise on by-id name selection across the system.

- Add TODO on potential dashboard code simplification in this area: has performance test pre-requisite.

- Add unit tests for both prior and current behaviour of get_byid_name_map() to ensure nature of change is understood.

Fixes #1978

See issue text for further context.

@schakrava Ready for review

Testing:

The included additional unit tests indicate prior and proposed behaviour of the changed code with prior behaviour consistent with the observed/reported issue.

```

./bin/test --settings=test-settings -v 3 -p test_osi*

test_get_byid_name_map (system.tests.test_osi.OSITests) ... ok

test_get_byid_name_map_prior_command_mock (system.tests.test_osi.OSITests) ... ok

...

```

Caveat:

There is currently a failure to build issue in the master branch, db migration / creation related, that was worked around for the development of this issue. It may be pertinent to address this db initialisation / build issue prior to this pr's merge (I believe them to be unrelated). I will open another issue detailing my findings to date and further diagnose it's cause: looks to be related to recent db additions in master that break or surface prior hidden issues associated with clean db creation, as in a fresh build from source.

phillxnet

February 4, 2019, 7:39pm

6

Merged and released in November 2018

A source build only issue that lead to an improvement / centralisation of our db migration system: the thing that keeps ones settings from update to update. Recent db changes had surfaced a weakness in how we did things.

Issue:

opened 08:04PM - 29 Oct 18 UTC

closed 02:30PM - 10 Nov 18 UTC

This issue only affect fresh builds from source and began at tag 3.9.2-39 but is… currently not believed to be due to an error in that commit. Initial thoughts on this issue is that our recent apparently correct db additions are triggering an otherwise present but dormant bug in our db creation / preperation mechanism. An initial work around is to be attached to this issue until a proper fix is developed.

The indicator for this issue is the following at the end of an otherwise successful build:

```

Job for rockstor-pre.service failed because the control process exited with error code. See "systemctl status rockstor-pre.service" and "journalctl -xe" for details.

```

with journalctl -xe reporting the line 400 and 402 issues within initrock as summarised:

```

File "/opt/rockstor-dev/src/rockstor/scripts/initrock.py", line 400, in main

Oct 28 16:35:24 rockdev initrock[20076]: run_command(fake_migration_cmd + [db_arg, app, '0001_initial'])

...

CommandException: Error running a command. cmd = /opt/rockstor-dev/bin/django migrate --noinput --fake --database

=default storageadmin 0001_initial.

...

'RuntimeError: Error creating new content types. Please make sure contenttype

s is migrated before trying to migrate apps individually.'

```

and similarly at line 402:

```

Oct 28 16:35:28 rockdev initrock[20358]: File "/opt/rockstor-dev/src/rockstor/scripts/initrock.py", line 402, in main

Oct 28 16:35:28 rockdev initrock[20358]: run_command(migration_cmd + ['storageadmin'])

```

with its associated exception:

```

cmd = /opt/rockstor-dev/bin/django migrate --noinput storageadmin. rc

= 1. stdout = ['Operations to perform:', ' Apply all migrations: storageadmin', 'Running migrations:', ' Rendering model states... DONE',

'Applying storageadmin.0002_auto_20161125_0051... OK',

'Applying storageadmin.0003_auto_20170114_1332... OK',

'Applying storageadmin.0004_auto_20170523_1140... OK',

'Applying storageadmin.0005_auto_20180913_0923... OK',

'Applying storageadmin.0006_dcontainerargs... OK',

''

...

'RuntimeError: Error creating new content types. Please make sure contenttypes is migrated before trying to migrate apps individually.'

```

The resulting incomplete migrations are as follows:

```

/opt/rockstor-dev/bin/django showmigrations

admin

[ ] 0001_initial

auth

[X] 0001_initial

[ ] 0002_alter_permission_name_max_length

[ ] 0003_alter_user_email_max_length

[ ] 0004_alter_user_username_opts

[ ] 0005_alter_user_last_login_null

[ ] 0006_require_contenttypes_0002

contenttypes

[X] 0001_initial

[ ] 0002_remove_content_type_name

django_ztask

(no migrations)

oauth2_provider

[X] 0001_initial

[ ] 0002_08_updates

sessions

[ ] 0001_initial

sites

[ ] 0001_initial

smart_manager

[ ] 0001_initial

[ ] 0002_auto_20170216_1212

storageadmin

[X] 0001_initial

[X] 0002_auto_20161125_0051

[X] 0003_auto_20170114_1332

[X] 0004_auto_20170523_1140

[X] 0005_auto_20180913_0923

[X] 0006_dcontainerargs

```

Code Changes:

rockstor:master ← phillxnet:1983_regression_-_source_build_fails_to_fully_initialise_db

opened 03:30PM - 04 Nov 18 UTC

Re-order db migration by early --fake-initial migration of contenttypes.

Refa… ctor initial database initialisation to be only within initrock to centralised this mechanism, removes previous db migration redundancy within buildout and some associated legacy db operations and in turn avoids major duplication of db initialisation on initial clean (no db or no .initrock file) builds. Move to only invoking the initrock script (during source build) via systemd dependency execution. This gives

cleaner error reporting re failed dependency on rockstor-pre (which normally invokes initrock) and follows / tests normal execution flow.

Consequently avoided runtime error:

"Error creating new content types. Please make sure contenttypes is migrated before trying to migrate apps individually."

on recent first attempt migrations during clean (no db or no .initrock) builds.

Summary:

- Migrate --fake-initial contenttypes before individual apps.

- Remove buildout db prep to centralise within initrock.

- Use systemd execution chain to invoke initrock / rockstor-pre.

- Add systemd services setup step in buildout: required by prior item.

- Add debug logging for db delete within initrock.

- Add debug logging to existing db --fake migrations.

- Remove currently unused gulp-install from buildout, this entry breaks non legacy builds.

Fixes #1983

See issue text and comment for development context.

@schakrava Ready for review.

Testing:

After deleting existing systemd links via:

```

rm -f /etc/systemd/system/rockstor-pre.service

rm -f /etc/systemd/system/rockstor.service

rm -f /etc/systemd/system/rockstor-bootstrap.service

systemctl daemon-reload

```

and ensuring we have no pre-existing .initrock file by building directly after a fresh transfer (prior rpm and rockstor dir uninstalled/deleted) we end up with a first time build success with the following showmigrations output:

```

/opt/rockstor-dev/bin/django showmigrations

admin

[ ] 0001_initial

auth

[X] 0001_initial

[X] 0002_alter_permission_name_max_length

[X] 0003_alter_user_email_max_length

[X] 0004_alter_user_username_opts

[X] 0005_alter_user_last_login_null

[X] 0006_require_contenttypes_0002

contenttypes

[X] 0001_initial

[X] 0002_remove_content_type_name

django_ztask

(no migrations)

oauth2_provider

[X] 0001_initial

[ ] 0002_08_updates

sessions

[ ] 0001_initial

sites

[ ] 0001_initial

smart_manager

[ ] 0001_initial

[ ] 0002_auto_20170216_1212

storageadmin

[X] 0001_initial

[X] 0002_auto_20161125_0051

[X] 0003_auto_20170114_1332

[X] 0004_auto_20170523_1140

[X] 0005_auto_20180913_0923

[X] 0006_dcontainerargs

```

which is consistent with prior first time build successes (pre tag 3.9.2-39) and a consistent index / id mapping within django_content_type (ie no id’s skipped) and the following django_migrations contents:

id | app | name | applied

-- | -- | -- | --

1 | contenttypes | 0001_initial | 2018-11-04 11:52:09.046277

2 | contenttypes | 0002_remove_content_type_name | 2018-11-04 11:52:09.080579

3 | auth | 0001_initial | 2018-11-04 11:52:11.620408

4 | oauth2_provider | 0001_initial | 2018-11-04 11:52:11.625451

5 | storageadmin | 0001_initial | 2018-11-04 11:52:11.629292

6 | auth | 0002_alter_permission_name_max_length | 2018-11-04 11:52:13.013702

7 | auth | 0003_alter_user_email_max_length | 2018-11-04 11:52:13.058906

8 | auth | 0004_alter_user_username_opts | 2018-11-04 11:52:13.071466

9 | auth | 0005_alter_user_last_login_null | 2018-11-04 11:52:13.086848

10 | auth | 0006_require_contenttypes_0002 | 2018-11-04 11:52:13.090587

11 | storageadmin | 0002_auto_20161125_0051 | 2018-11-04 11:52:14.680407

12 | storageadmin | 0003_auto_20170114_1332 | 2018-11-04 11:52:14.996502

13 | storageadmin | 0004_auto_20170523_1140 | 2018-11-04 11:52:15.122794

14 | storageadmin | 0005_auto_20180913_0923 | 2018-11-04 11:52:15.488624

15 | storageadmin | 0006_dcontainerargs | 2018-11-04 11:52:15.624022

Indicating our 2 most recent storageadmin migrations, the first non initial migrations in our post south setup to include 'migrations.CreateModel()', have been applied successfully, along with an early application of "contenttypes 0002_remove_content_type_name".

With subsequent (post migration / build) executions of the addition migration related command within this pr resulting in the following:

```

/opt/rockstor-dev/bin/django migrate --noinput --fake-initial --database=default contenttypes

Operations to perform:

Apply all migrations: contenttypes

Running migrations:

No migrations to apply.

```

Tested as this will be executed upon every rockstor-pre (initirock) invocation.

Caveats:

For the time being we have remaining remarked out / redundant / unused content within buildout.cfg; this is intended to aid in reverting these changes were there to be unforeseen side effects once distributed. A later pr can address this kipple.

phillxnet

February 4, 2019, 7:43pm

7

Merged and release November 2018

Another in a long line of quota disabled / quota indeterminate state improvements, but this time focusing on when a pool is imported.

Issue:

opened 06:08PM - 13 Nov 18 UTC

closed 01:31PM - 15 Nov 18 UTC

When attempting to import a pool which has quotas disabled or is in an indetermi… nate quota state the following error can result:

```

'ERROR: unable to assign quota group: Invalid argument'

```

This error can also arise when creating a new share on a pool that has an indeterminate quota state.

This is due to a mostly transient state where some quota commands work as expected, ie: 'btrfs qgroup show mnt_pt', which we use as an indicator for if quotas are enabled and to find our highest current qgroup, while others, which we normally only execute after first establishing if quotas are enabled, will fail; an example being 'btrfs qgroup assign child parent mnt_pt': hence the referenced error potentially occurring during pool import and share creation.

The proposed fix, to be presented shortly via an associated pr, is to add sensitivity / heed warnings re a quota disabled state (often only displayed for a short period and accompanied by otherwise normal quota enabled behaviour) and additionally make our 'btrfs qgroup assign' wrapper robust to this type of failure.

The latter proposed modification will avoid hard failure on import / share creation during these interim / indeterminate quota states but will result in our db assigned qgroup for the given share being unassigned. This however can self correct over subsequent pool/share updates (usually a few seconds later) or a quota disabled / enabled cycle and is non critical; unlike a pool import / share creation failure. We cannot currently avoid the rather heavy handed proposed latter modification as it has been observed where a pool's prior btrfs quota commands gave no indication; error, warning, or exception, yet an assign quota still returned 'Invalid argument'.

Code Changes:

rockstor:master ← phillxnet:1987_improve_pool_import_with_disabled_or_indeterminate_quota_state

opened 08:17PM - 13 Nov 18 UTC

Add sensitivity / heed warnings re a quota disabled state (often only displayed … for a short period and accompanied by otherwise normal quota enabled behaviour) and additionally make our 'btrfs qgroup assign' wrapper fail elegantly in this interim / indeterminate quota state.

Addressed issue could in rare situations also block share creation.

Summary:

- Enhance qgroup_max() re qgroup disabled warnings.

- Enhance are_quotas_enabled() re qgroup disabled warnings.

- Don’t fail import / share creation for failure to quota group assign: log as error and continue.

Fixes #1987

See issue text for more context.

@schakrava Ready for review.

Testing:

During unrelated Rockstor development on docker-ce a larger number of docker service enable / disable cycles were performed. This is suspected as the cause for a then persistent indeterminate quota state that blocked further share creation. See issue:

"docker-ce dictating pool quota enabled disabled status" #1906

for buggy docker-ce btrfs quota interaction.

It was then also noticed that importing certain prior quota disabled pools failed in a related manner.

Post pr the remaining reproducer examples behaved as expected, ie share creation was fixed and imports were successful in the respective instances.

phillxnet

February 4, 2019, 8:54pm

8

(3.9.2-45 by GitHub release and Git tag)

Merged end November 2018

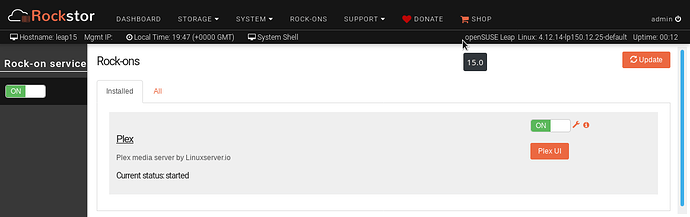

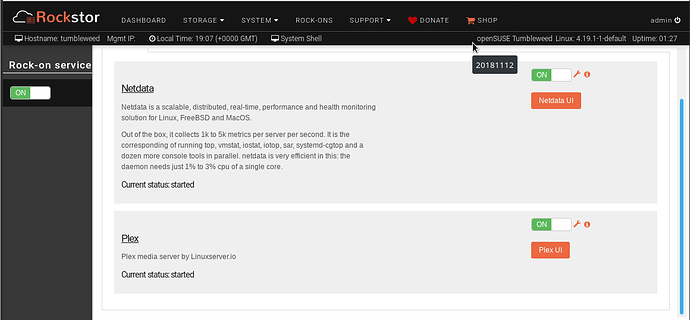

This was another large one which ended up being an umbrella issue for a number of things related to our openSUSE (non legacy) move. Including fixing Rock-ons functionality within Leap15.0 (at the time) and Tumbleweed.

Also note the additional distro indicator to the left of the Linux kernel Web-UI header element.

Issue:https://github.com/rockstor/rockstor-core/issues/1989

Code Changes:

rockstor:master ← phillxnet:1989_fix_non_legacy_build/docker_issues_plus_dev_to_rpm_install_mechanism

opened 09:32PM - 19 Nov 18 UTC

Fix a number of the remaining build and docker issues concerning the proposed mo… ve to openSUSE as an upstream linux ditro base. Includes a fix to facilitate moving from a developer (source) install to an rpm based one: currently this only supports our existing legacy CentOS base, pending the instantiation of an openSUSE rpm build backend along with related distro aware repository config code changes.

Docker specific notes:

Many more modern dockerd invocations require a number of command line arguments. Previously we passed, from the docker.service file, only one: our --data-root target. The included modifications allows for more custom or distro specific requirements to be met via accommodation of any number of arguments (unfiltered). All prior Rockstor specific dockerd arguments are preserved and applied as before.

Summary:

- Add dependency on python ‘distro’ library.

- Store build system distro info in django settings, the assumption here is we build on our target distro: normally the case.

- Add distro UI element, uses prior 2 items.

- Normalise prior UI subheader linux info formatting.

- Remove prior incorrect data_collector code comment.

- Selectively run postgresql-setup (legacy) or initdb (non legacy) in initrock.

- Update psycopg2 from 2.6 to 2.7.4.

- Normalise on direct paths for commands: avoids redundant fs redirection ie in CentOS root we have “/bin -> /usr/bin" and "/sbin - > /usr/sbin"; as these dir links are not found in our non legacy base move all hard wired command paths using them to their canonical reference.

- Use Django settings for a selection of variably located (distro specific) command paths: again with the assumption that we build on our target distro.

- Fix version indicator and software update page display for dev (source) installs.

- Fix dev (source install) to rpm install transition mechanism - necessarily considered as a re-install so db is wiped during the transition. Note that this, in part, involved the addition of an explicit 'yum install rockstor' command during update, along with ensuring that initrock is re-run on next rockstor.service start.

- Add distro aware docker.service template file selection based on distro.id(); moving fully to a live edit (during Rock-on service enable) rather than build time customization: ie to accommodate for our docker wrapper redirect and it’s consequent requirement for NofityAccess=all for Type=notify docker configs. Both included openSUSE templates are taken from their respective distro default installs of docker-ce.

- Establish docker-generic.service failover config for unknown distro ids taken from default upstream docker-ce 18.09 CentOS example.

- Enhance docker wrapper to pass additional arguments to dockerd.

- Minor additional rock-ons-root config exception logging.

- Catch and log harmless reboot/shutdown command exceptions with rc=-15. The exception log reports from these Web-UI initiated events are misleading as they suggest malfunction where there is none as both commands execute as expected with: out='', err='', and rc=-15.

Fixes #1989

See issue text for context and forum reference.

@suman Ready for review. Please note the additional ‘python-distro’ package dependency required of the rpm. My understanding is that the included entry in base-buildout.cfg [rpm-deps] section is sufficient, but I am not certain of this. N.B. my experience so far is that the build system will also require this package installed due to its use in settings.conf.in and it’s invocation from within initrock. Apologies for the inconvenience here. Also note the use of subprocess to run ‘which’ within settings.conf.in: this makes the assumption that the build system is as per the target system, ie CentOS (currently), with regard to binary locations of the commands in question (‘udevadm’, ‘shutdown’, and ‘chkconfig’ (see #1986 )). The package name of ‘python-distro’ works across all 3 current target distros, CentOS (currently only python2 variant) and both python2 and python3 variants in the openSUSE distros: both currently defaulting / resolving to their python2 versions.

Testing:

Source to rpm install transition (source install assumed not to be in /opt/rockstor).

Worked on CentOS distro base (only rpms currently available), with caveat that browser (Firefox) prompted for new cert exception upon transition as this is treated as a re-install / fresh install: db is wiped due to unknown state from development / source install. N.B. re-activation of the chosen update channel is also require, again due to this transition having a re-install status (prior db wiped). However the prior yum repo config is unaffected until a selection is made.

Distro adaptive docker config.

Tested on an original legacy CentOS (‘rockstor’ distro.id()) install as well as on current openSUSE Leap 15.0 (opensuse-leap distro.id()) and Tumbleweed (opensuse-tumbleweed distro.id()) installs. In all cases a successful Rock-ons-root config was achieved with the consequent test of installing a Plex Rock-on and ensuring that it auto started on reboot. The Rock-ons service was also successfully enabled and disabled on all systems via the Web-UI services page.

Images to follow in comments to illustrate the functioning Rock-on system and the added distro UI element.

The rpm release was short lived due to the following missing dependency issue:

@vesper1978 Thanks for the interest.

I’m not quite ready to make those changes in the repo just yet as I’m still ‘stepping up’ to take on / help out with some of these duties (and don’t want to step on toes) but I’m about to start a new forum thread such as we use to have that explains in less GitHub pull request techno speak how each release differs from the last. And as it goes 3.9.2-47 was used to merge more pending code improvements so I’ll detail in my proposed thread. We’ve had a little h…

phillxnet

February 4, 2019, 9:15pm

9

(Belated GitHub release and Git tag 22nd Feb)

Merged and RPM Released 3rd Feb 2019

Added previously missed rpm dependency in 3.9.2-46.

Added distro awareness to the current rpm repository config code. Essentially the Rockstor code side of setting up openSUSE repositories to move to distributing updates via openSUSE rpms.

Fixed unintended repo file permissions.

Reduce rockstor rpm repository load by moving to an update cadence of 1 hour rather than 1 minute.

Fix a number of bugs affecting openSUSE based source installs re version indication and repository configuration.

Enable source based to rpm based installs when on openSUSE. This previously worked in CentOS as from 3.9.2-46.

Issue:https://github.com/rockstor/rockstor-core/issues/1991

Code Changes:

rockstor:master ← phillxnet:1991_add_non_legacy_distro_aware_repo_configuration

opened 07:03PM - 21 Nov 18 UTC

Adds distro awareness to the existing repository configuration to encompass our … next generation openSUSE Leap and Tumbleweed distribution bases. The repository structure assumed is as proposed/detailed in the associated issue text.

Summary:

- Add disto aware repo configuration for openSUSE Leap and Tumbleweed (next gen Rockstor).

- Fix Rockstor NG source to rpm install transition.

- Fix compliance with unconfigured repo. Allows for updates in only one channel i.e. preserving the user option to change the update subscription channel when defaulting to, or having selected, a deprecated or yet to be configured update channel.

- Fix unintended repository file permissions: auto resolves (post patch) by visiting “Software Update” page.

- Fix Rockstor NG version display for source installs.

- Fix missing yum-changelog package dependency: package name tested with yum on legacy and NG distros.

- For dev/source installs (no rockstor rpm installed) list entire changelog of available rpm. Prior use of ‘None’ for date parameter was silently interpreted as ‘all’ in older (legacy) yum-changelog but caused command exceptions in newer openSUSE versions of the same. Manual concurs with new use.

- Trivial code comment addition and typo.

- Changed rockstor repos from 1m to 1h for metadata_expire (to reduce server load).

Fixes #1991

@schakrava Ready for review. See issue text for repo structure assumed within pr.

Git history caveat: I neglected to mention the metadata_expire change in the git commit message. Let me know if you would like for me to re-present this pr due to this omission.

Testing:

A source build, categorised as ‘unknown version’, offers an ‘update’ when any ‘rockstor’ rpm version is available: tested using existing CentOS based repos. Caveat for subsequent ‘upgrade’ is that the source build is assumed NOT to be in /opt/rockstor.

Legacy Rockstor (CentOS based) and Rockstor NG (openSUSE Leap & Tumbleweed based):

Successfully offered and configured both update channels from clean source builds as per repo dir structure proposed/detailed in issue text (unchanged for existing legacy repos).

phillxnet

April 6, 2019, 12:34pm

12

Merged end February 2019

Thanks to @Flox for his ongoing effort to improve our Rock-ons system. This time we have:

Support added for docker labels.

Bug fix for empty label fields.

and finally a fix by me for our unit tests: I broke them in 3.9.2-45 - Sorry devs.

Bug fix for unit test environment

A much belated thanks to @suman for holding everything together behind the scenes.

Happy Rockstoring and may your updates go smoothly.

issues:

opened 07:19PM - 08 Dec 18 UTC

closed 08:41PM - 23 Feb 19 UTC

Many Docker containers now make use of containers labels and allowing the user t… o specify labels would be much helpful in establishing multi-containers and even multi-Rock-ons environment.

As there isn't--to the best of my knowledge--support for container labels to `docker update`, this would need to be done at `docker run` time, which in Rockstor could be done either at the RockOn definition stage (using the `options` object) or using the `update` function. As `labels` would be specific to each user's environment, the latter is greatly preferred.

I thus suggest to implement an "Add label" feature from the Rock-On Settings page, similar to the existing "Add Storage" feature. This would also need to support adding several labels at once as multiple labels are usually needed to setup a custom multi-container multi-RockOn environment.

I'm currently finishing a related branch and will prepare the PR quite shortly.

rockstor:master ← FroggyFlox:patch-1

opened 12:06AM - 24 Feb 19 UTC

Add simple if statement to ignore fields with empty values for labels.

Fixes … #2018.

opened 08:01PM - 23 Nov 18 UTC

closed 07:47PM - 23 Feb 19 UTC

Since 3.9.2-45 build changes were introduced that added several distro aware pro… ject wide settings. These changes, relating in this case to Django settings, were not carried over to our test-settings.conf.in. The result is that our unit tests fail to run due to these missing project settings definitions:

```

./bin/test --settings=test-settings -v 3 -p test_btrfs*

...

AttributeError: 'Settings' object has no attribute 'SHUTDOWN'

```

Code Changes:

https://github.com/rockstor/rockstor-core/pull/1999

rockstor:master ← FroggyFlox:patch-1

opened 12:06AM - 24 Feb 19 UTC

Add simple if statement to ignore fields with empty values for labels.

Fixes … #2018.

rockstor:master ← phillxnet:1993_regression_in_unit_tests_-_environment_outdated_since_3.9.2-45

opened 08:28PM - 23 Nov 18 UTC

Adds previously omitted python imports and associated settings to template file … test-settings.conf.in. These same changes were previously only applied to the non testing environment template file settings.conf.in.

Fixes test_btrfs* runs.

Fixes #1993

@schakrava Ready for Review.

Apologies as I recently broke (via omission) the test environment (in pr #1990 ) and this is the fix.

Proof of fix (using test_btrfs subset of unit tests):

before pr:

```

./bin/test --settings=test-settings -v 3 -p test_btrfs*

...

AttributeError: 'Settings' object has no attribute 'SHUTDOWN'

```

after pr:

```

./bin/test --settings=test-settings -v 3 -p test_btrfs*

test_balance_status_cancel_requested (fs.tests.test_btrfs.BTRFSTests) ... ok

test_balance_status_finished (fs.tests.test_btrfs.BTRFSTests) ... ok

test_balance_status_in_progress (fs.tests.test_btrfs.BTRFSTests) ... ok

test_balance_status_pause_requested (fs.tests.test_btrfs.BTRFSTests) ... ok

test_balance_status_paused (fs.tests.test_btrfs.BTRFSTests)

Test to see if balance_status() correctly identifies a Paused balance ... ok

test_balance_status_unknown_parsing (fs.tests.test_btrfs.BTRFSTests) ... ok

test_balance_status_unknown_unmounted (fs.tests.test_btrfs.BTRFSTests) ... ok

test_degraded_pools_found (fs.tests.test_btrfs.BTRFSTests) ... ok

test_dev_stats_zero (fs.tests.test_btrfs.BTRFSTests) ... ok

test_device_scan_all (fs.tests.test_btrfs.BTRFSTests) ... ok

test_device_scan_parameter (fs.tests.test_btrfs.BTRFSTests) ... ok

test_get_dev_io_error_stats (fs.tests.test_btrfs.BTRFSTests) ... ok

test_get_pool_raid_levels_identification (fs.tests.test_btrfs.BTRFSTests) ... ok

test_get_property_all (fs.tests.test_btrfs.BTRFSTests) ... ok

test_get_property_compression (fs.tests.test_btrfs.BTRFSTests) ... ok

test_get_property_ro (fs.tests.test_btrfs.BTRFSTests) ... ok

test_get_snap_2 (fs.tests.test_btrfs.BTRFSTests) ... ok

test_get_snap_legacy (fs.tests.test_btrfs.BTRFSTests) ... ok

test_is_subvol_exists (fs.tests.test_btrfs.BTRFSTests) ... ok

test_is_subvol_nonexistent (fs.tests.test_btrfs.BTRFSTests) ... ok

test_parse_snap_details (fs.tests.test_btrfs.BTRFSTests) ... ok

test_scrub_status_cancelled (fs.tests.test_btrfs.BTRFSTests) ... ok

test_scrub_status_conn_reset (fs.tests.test_btrfs.BTRFSTests) ... ok

test_scrub_status_finished (fs.tests.test_btrfs.BTRFSTests) ... ok

test_scrub_status_halted (fs.tests.test_btrfs.BTRFSTests) ... ok

test_scrub_status_running (fs.tests.test_btrfs.BTRFSTests) ... ok

test_share_id (fs.tests.test_btrfs.BTRFSTests) ... ok

test_shares_info_legacy_system_pool_fresh (fs.tests.test_btrfs.BTRFSTests) ... ok

test_shares_info_legacy_system_pool_used (fs.tests.test_btrfs.BTRFSTests) ... ok

test_shares_info_system_pool_post_btrfs_subvol_list_path_changes (fs.tests.test_btrfs.BTRFSTests) ... ok

test_shares_info_system_pool_used (fs.tests.test_btrfs.BTRFSTests) ... ok

test_snapshot_idmap_home_rollback (fs.tests.test_btrfs.BTRFSTests) ... ok

test_snapshot_idmap_home_rollback_snap (fs.tests.test_btrfs.BTRFSTests) ... ok

test_snapshot_idmap_mid_replication (fs.tests.test_btrfs.BTRFSTests) ... ok

test_snapshot_idmap_no_snaps (fs.tests.test_btrfs.BTRFSTests) ... ok

test_snapshot_idmap_snapper_root (fs.tests.test_btrfs.BTRFSTests) ... ok

test_volume_usage (fs.tests.test_btrfs.BTRFSTests) ... ok

----------------------------------------------------------------------

Ran 37 tests in 0.044s

OK

```

phillxnet

October 2, 2019, 3:50pm

15

3.9.2-49

Merged end September 2019

I am chuffed, and somewhat relieved, to finally release what is one of our largest and longest awaited releases for some time now. I present the 81st Rockstor.

In this release we welcome and thank first time Rockstor contributor @Psykar for a 2 in one fix for long standing issues of table sorting; nice:

Thanks also to @Flox for a wide range of non trivial fixes/enhancements thus:

@Flox has also been a major player behind the scenes in fashioning our transition to openSUSE, both by way of extensive testing, suggesting, and correcting (of my mistakes) and in the areas he has focused on. He also recently adopted a forum moderator role which is normally a thankless task; thanks @Flox . And has assisted me in final pre-release testing of the RPMs, which includes our first Leap15.1 & Tumbleweed variants, more on this soon.

And a series of run-of-the-mill, and one non trivial, fixes/enhancements from myself:

Thanks also to @suman for tirelessly assisting with my adoption of the maintainer role.

Due to the large number of changes in this release I have used a different format here to avoid a pages long post. Please do follow the GitHub issue links above for full details (change titles from pull requests, #links from their issues).

One final note: if your Rockstor Web-UI is not offering this versions then please consider visiting our shiny new complementary self service Appliance ID manager available at: https://appman.rockstor.com . It may be that your subscription has expired.

2 Likes

phillxnet

October 7, 2019, 11:36am

17

Merged 6th October 2019

This one is predominantly a ‘HOT FIX’ for our Web-UI having 10-30 second pauses in Chrome and similar browsers. Firefox seemed to be unaffected, at least some versions.

I have also included a couple of unrelated contributions to help with addressing our backlog of improvements.

We welcome and thank @magicalyak as a first time rockstor-core contributor but as many of you will know, is very active in our Rock-ons rockon-registry repo.

We have another move toward openSUSE via the prolific @Flox (Thanks @Flox ) with:

And finally the hot fix for “Chrome Go Slow” from myself @phillxnet

pin python-engineio to 2.3.2 as recent 3.0.0 update breaks gevent. Fixes #1995 @phillxnet

Catchy title that last one I know !!

This last fix was ‘resurrected’ from a prior instance of this happening in source builds way back but an upstream ‘mend’ meant that it was then dropped. So we have now finally pinned this library to a set version. We have ongoing plans to re-vamp, and minimise, our library dependencies and this older version ‘pinning’ will be re-addressed.

Thanks to all our stable subscribers and currently unintended testers (working on that) for helping to support Rockstor’s development.

Specifically I like to call out forum members @upapi_rockstor , @Bazza , @Warbucks , @Flox , and @wyoham in the following 2 forum threads:

Web GUI after update to 3.9.2.49 not working properly Data-collector, again?

for helping to bring to light this more recent Chrome compatibility blunder.

1 Like

phillxnet

December 16, 2019, 5:32pm

18

3.9.2-51

Merged 4th Dec 2019

I am again chuffed to finally release this next instalment in our Stable Channel updates.@p-betula-pendula for an innovative power saving / convenience addition to our scheduled shut down feature:

And apologies to p-betula-pendula for not getting this in and released earlier.

Again thanks to @Flox , our intrepid forum moderator and prolific contributor, for the following:

And finally a few enhancements / fixes from myself @phillxnet :

This clears our backlog of currently viable pull requests and will hopefully also mark the beginning of the next phase of Rockstor as this release has a rough split of CentOS and openSUSE specific fixes to:

Improve our config save / restore capabilities, thanks @Flox , which in turn eases the move from a CentOS install to an openSUSE based one.

Fix / Enable our docker and self update capabilities in our openSUSE Leap15.1 offering, @Flox and me, which in turn nudges this offering closer still to feature parity (but not quite there just yet).

And thanks again to our new and very patient first time contributor p-betula-pendula . I tried to get this enhancement into the 49-50 versions but this didn’t end up happening.

Thanks to all our Stable channel subscribers for helping to keep the light on.

*If you have a Stable channel updates subscription and do not see this update available please check your subscription status and relevant Appliance ID via our recently available ‘self service’ Appman facility: https://appman.rockstor.com/

If all looks well and you are still not offered this update then please PM me on the forum with your current Appliance ID and I will look to what the problem may be. There is a known issue for a small subset of our subscribers which I can only sort if contacted.

Also note that if you had/have updated from our now legacy CentOS testing channel your system may not actually be running what it indicates. This bug does not affect systems that have never been on the testing channel. “yum info rockstor” to confirm your installed version for sure.

2 Likes

phillxnet

January 28, 2020, 5:09pm

19

3.9.2-52

Merged 27th Jan 2020

I am glad to announce our slightly late Chinese New Year release by way of a fresh installment to our Stable Channel updates.

We have no first time contributors this time and this release includes the following fixes / improvements from myself and our prolific form moderator @Flox :

[Config Backup & Restore] Implement backup and restore of rock-ons. Fixes #2065 @Flox

improve/add update channel/appman related text and doc links. Fixes #2106 @phillxnet

revise donate menu item within Web-UI. Fixes #2104 @phillxnet @Flox

pin django-braces to restore django version compatibility. Fixes #2102 @phillxnet

None are openSUSE specific this time, which is rare these days and probably a good sign.

The major improvement / feature-add in this release is @Flox ’s addition of Rock-ons to our config save and restore. Intended primarily for use when transitioning a pool from one machine to another. This is a long desired and request feature that we finally have in place. Both @Flox and I have tested this feature for moving a config and pool from a CentOS Stable Channel rpm install to our still very much in early alpha testing “Build on openSUSE” rpm offering for Leap15.1 in our Testing Channel.

Thanks to all our Stable channel subscribers for helping to keep the lights on.

If you have a Stable channel updates subscription and do not see this update available please check your subscription status and relevant Appliance ID via our recently available ‘self service’ *Appman facility: https://appman.rockstor.com/

If all looks well and you are still not offered this update then please PM me on the forum with your current Appliance ID and I will look to what the problem may be. There is a known issue for a small subset of our subscribers which I can only sort if contacted.

Also note that if you had/have updated from our now legacy CentOS testing channel your system may not actually be running what it indicates. This bug does not affect systems that have never been on the testing channel. “yum info rockstor” to confirm your installed version for sure.

3 Likes

phillxnet

February 11, 2020, 9:47pm

20

3.9.2-53

Merged 11th Feb 2020

I am again glad to announce the release of another Stable Channel update. This release is particularly interesting as we have not one but two new contributors.

Please join me in thanking forum members @Celtis (jonpwilson) and @def_monk (defmonk0) for joining the growing list of rockstor-core contributors. We also have some contributions from our prolific forum moderator and long time core contributor @Flox and one from my self @phillxnet :

Although there are a lot of changes here, part of the reason this Stable channel release is ‘early’, they are all fairly self explanatory; so I’ll keep this post short and wish you all well with this update.

The only note of caution here is that this update contains the first database update we have had for just over a year (another reason to add no more changes and release early). This one is on me. But this database update has been well tested and the details are in the given issue link above. It is however a trivial database change, so that is on our side.

Thanks again to all our Stable channel subscriber and contributors who help to keep this Open Source show on the road.

4 Likes